Inference is everything.

San Francisco and New York

Joined March 2021

- Tweets 1,793

- Following 285

- Followers 6,239

- Likes 2,762

Pinned Tweet

We raised a $150M Series D! Thank you to all of our customers who trust us to power their inference.

We're grateful to work with incredible companies like @Get_Writer, @zeddotdev, @clay_gtm, @trymirage, @AbridgeHQ, @EvidenceOpen, @MeetGamma, @Sourcegraph, and @usebland.

This round was led by @bondcap, with @jaysimons joining our Board. We're also thrilled to welcome @conviction and @CapitalG to the round, alongside support from @01Advisors, @IVP, @sparkcapital, @GreylockVC, @ScribbleVC, @BoxGroup, and Premji Invest.

Today, we’re excited to announce our $150M Series D, led by BOND, with Jay Simons joining our Board. We’re also thrilled to welcome Conviction and CapitalG to the round, alongside support from 01 Advisors, IVP, Spark Capital, Greylock Partners, Scribble Ventures, and Premji Invest.

The last eighteen months have been a whirlwind; as the AI application layer has taken off, we've been proud to play a small part supporting world class companies run their production workloads. Thanks to all our customers including Abridge, Bland, Clay, Gamma, Mirage, OpenEvidence, Sourcegraph, WRITER, and Zed Industries.

We’re just getting started. If you’re building the next generation of AI products, we’d love to work with you.

Heading to KubeCon next week? Come visit the team at Booth #631 to test your AI knowledge. Top of the leaderboard gets prizes! 🏆

Baseten retweeted

Kimi K2 Thinking is the new leading open weights model: it demonstrates particular strength in agentic contexts but is very verbose, generating the most tokens of any model in completing our Intelligence Index evals

@Kimi_Moonshot's Kimi K2 Thinking achieves a 67 in the Artificial Analysis Intelligence Index. This positions it clearly above all other open weights models, including the recently released MiniMax-M2 and DeepSeek-V3.2-Exp, and second only to GPT-5 amongst proprietary models.

It used the highest number of tokens ever across the evals in Artificial Analysis Intelligence Index (140M), but with MoonShot’s official API pricing of $0.6/$2.5 per million input/output tokens (for the base endpoint), overall Cost to Run Artificial Analysis Intelligence Index comes in cheaper than leading frontier models at $356. Moonshot also offers a faster turbo endpoint priced at $1.15/$8 (driving a Cost to Run Artificial Analysis Intelligence Index result of $1172 for the turbo endpoint - second only to Grok 4 as the most expensive model). The base endpoint is very slow at ~8 output tokens/s while the turbo is somewhat faster at ~50 output tokens/s.

The model is one of the largest open weights models ever at 1T total parameters with 32B active. K2 Thinking is the first reasoning model release in Moonshot AI’s Kimi K2 model family, following non-reasoning Kimi K2 Instruct models released previously in July and September 2025.

Moonshot AI only refers to post-training in their announcement. This release highlights the continued trend of post-training & specifically RL driving gains in performance for reasoning models and in long horizon tasks involving tool calling.

Key takeaways:

➤ Details: text only (no image input), 256K context window, natively released in INT4 precision, 1T total with 32B active (~594GB)

➤ New leader in open weights intelligence: Kimi K2 Thinking achieves a 67 in the Artificial Analysis Intelligence Index. This is the highest open weights score yet and significantly higher than gpt-oss-120b (61), MiniMax-M2 (61), Qwen 235B A22B 2507 (57) and DeepSeek-V3.2-Exp (57). This release continues the trend of open weights models closely following proprietary models in intelligence achieved

➤ China takes back the open weights frontier: Releases from China based AI labs have led in open weights intelligence offered for most of the past year. OpenAI’s gpt-oss-120b release in August 2025 briefly took back the leadership position for the US. Moonshot AI’s K2 Thinking takes back the leading open weights model mantle for China based AI labs

➤ Strong agentic performance: Kimi K2 Thinking demonstrates particular strength in agentic contexts, as showcased by its #2 position in the Artificial Analysis Agentic Index - where it is second only to GPT-5. This is mostly driven by K2 Thinking achieving 93% in 𝜏²-Bench Telecom, an agentic tool use benchmark where the model acts as a customer service agent. This is the highest score we have independently measured. Tool use in long horizon agentic contexts was a strength of Kimi K2 Instruct and it appears this new Thinking variant makes substantial gains

➤ Top open weights coding model, but behind proprietary models: K2 Thinking does not score a win in any of our coding evals - it lands in 6th place in Terminal-Bench Hard, 7th place in SciCode and 2nd place in LiveCodeBench. Compared to open weights models, it is in first or first equal for each of these evals - and therefore comes in ahead of previous open weights leader DeepSeek V3.2 in our Artificial Analysis Coding Index

➤ Biggest leap for open weights in Humanity’s Last Exam: K2 Thinking’s strongest results include Humanity’s Last Exam, where we measured a score of 22.3% (no tools) - an all time high for open weights models and coming in only behind GPT-5 and Grok 4

➤ Verbosity: Kimi K2 Thinking is very verbose - taking 140M total tokens are used to run our Intelligence Index evaluations, ~2.5x the number of tokens used by DeepSeek V3.2 and ~2x compared to GPT-5. This high verbosity drives both higher cost and higher latency, compared to less verbose models. On Mooshot’s base endpoint, K2 Thinking is 2.5x cheaper than GPT-5 (high) but 9x more expensive than DeepSeek V3.2 (Cost to Run Artificial Analysis Intelligence Index)

➤ Reasoning variant of Kimi K2 Instruct: The model, as per its naming, is a reasoning variant of Kimi K2 Instruct. The model has the same architecture and same number of parameters (though different precision) as Kimi K2 Instruct. It continues to only support text inputs and outputs

➤ 1T parameters but INT4 instead of FP8: Unlike Moonshot’s prior Kimi K2 Instruct releases that used FP8 precision, this model has been released natively in INT4 precision. Moonshot used quantization aware training in the post-training phase to achieve this. The impact of this is that K2 Thinking is only ~594GB, compared to just over 1TB for K2 Instruct and K2 Instruct 0905 - which translates into efficiency gains for inference and training. A potential reason for INT4 is that pre-Blackwell NVIDIA GPUs do not have support for FP4, making INT4 more suitable for achieving efficiency gains on earlier hardware

➤ Access: The model is available on @huggingface with a modified MIT license. @Kimi_Moonshot is serving an official API (available globally) and third party inference providers are already launching endpoints - including @basetenco, @FireworksAI_HQ, @novita_labs, @parasail_io

Baseten retweeted

Kimi K2 was already the best model for creative writing.

K2 Thinking takes it to the next level for deep research and technical content.

I tested Kimi head-to-head with GPT 5 Pro on highly technical writing with thousands of tokens of context + agentic search.

Results:

🚀 Hello, Kimi K2 Thinking!

The Open-Source Thinking Agent Model is here.

🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%)

🔹 Executes up to 200 – 300 sequential tool calls without human interference

🔹 Excels in reasoning, agentic search, and coding

🔹 256K context window

Built as a thinking agent, K2 Thinking marks our latest efforts in test-time scaling — scaling both thinking tokens and tool-calling turns.

K2 Thinking is now live on kimi.com in chat mode, with full agentic mode coming soon. It is also accessible via API.

🔌 API is live: platform.moonshot.ai

🔗 Tech blog: moonshotai.github.io/Kimi-K2…

🔗 Weights & code: huggingface.co/moonshotai

Baseten retweeted

wow…Kimi K2 thinking 🧠 is scary good…

It’s THE best open source agentic model, with 200-300 continuous tool calls.

I pushed it hard… Here’s what I built in 10min:

Fun fact - we asked people to describe their favorite agent in SF. We got suggestions for a bunch of new agentic apps to try.

Our favorite agent? Probably James Bond.

If you’re living in the new world of agentic AI check out our new deep dive on tool calling in inference.

Check out the blog in the comments

full vid: piped.video/IgFe361zRW4

Baseten retweeted

Just as TikTok started as Musicaly, you can potentially see a new App Store get created with the initial seed being tools that help users modify apps that their friends use, hyper adapting it to their needs.

With the cost of creation dropping to near zero and folks like @basetenco ensuring reliable inference, these new category of products are ones to look out for!

Kudos to team @wabi !

Our team grows when our customers grow, and our customers are on a tear. Please welcome Zane, Allen, Brooke, and Katie to Baseten!

Zane, Allen, and Brooke join our GTM team and Katie joins our Recruiting team. They just started and already making a ton of impact.

Read more about Baseten Training in our launch blog baseten.co/blog/baseten-trai…

After months of feedback from our early customers and thousands of jobs completed, Baseten Training is officially ready for everyone. 🚀

Access compute on demand, train any model, run multi-node jobs, and deploy from checkpoints with cache-aware scheduling, an ML Cookbook, tool calling recipes, and more.

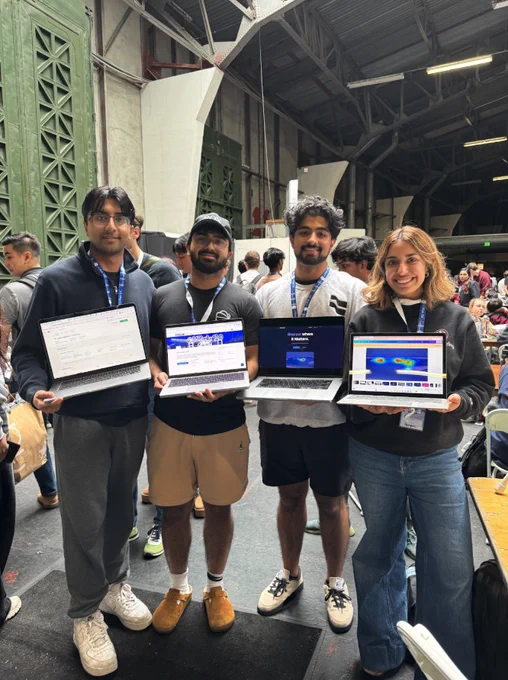

We had a great weekend at @CalHacks 12.0 in SF

Congratulations to the winners of the Baseten prize for best use of open source models: Salient Labs.

The team built a video streaming compression engine using a real-time video saliency model running on Baseten.

We are so excited to be a launch partner for @nvidia Nemotron Nano 2 VL today and offer day-zero support for this highly accurate and efficient vision language model, alongside other models in the Nemotron family.

To learn more, read our blog here

baseten.co/blog/high-perform…

Baseten retweeted

Why does being the fastest on NVIDIA for gpt-oss matter?

1. Many companies are looking for a US-based open source model, and GPT-OSS is the best out right now

2. Custom hardware providers show up well on benchmarks, but capacity is a real concern for companies at scale

3. The research that goes into making something like gpt-oss faster often ripples into other models, so I wouldn't be surprised to see more huge results from @basetenco on the way 👀

This week, Baseten's model performance team unlocked the fastest TPS and TTFT for gpt-oss 120b on @nvidia hardware. When gpt-oss launched we sprinted to offer it at 450 TPS... now we've exceeded 650 TPS and 0.11 sec TTFT... and we'll keep working to keep raising the bar.

We are proud to offer the best E2E latency available with near-limitless scale, incredible performance, and the highest uptime 99.99%.

Another week, another group of new Baseten colleagues to introduce! Please welcome Victoria Jones, Analisa Ruff, Aryan Lohia, and Sarah Zugelder.

All of them join us on the GTM team, talking to our fast-growing customer base every single day. Can't wait to see what you accomplish!

Baseten retweeted

Faster models yield better products! There's so many dimensions to what "performance" means, but we're happy to pushing the limits on both TTFT and TPS.

There's an obsession with tok/sec as *the* metric in LLM inference. But in latency-sensitive use cases the metic that matters more is time-to-first-token:

- Code edit use cases have short outputs and overall latency is heavily determined by ttft

- Voice AI use cases care about time-to-first-sentence so they can send the sentence to a TTS model. ttfs is largely determined by ttft.

So we optimized for ttft and came on top of all providers, whether they use GPUs or other accelerators.

Check out deep dive into all our perf updates: baseten.co/blog/how-we-made-…