🐈だけど、強い!

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

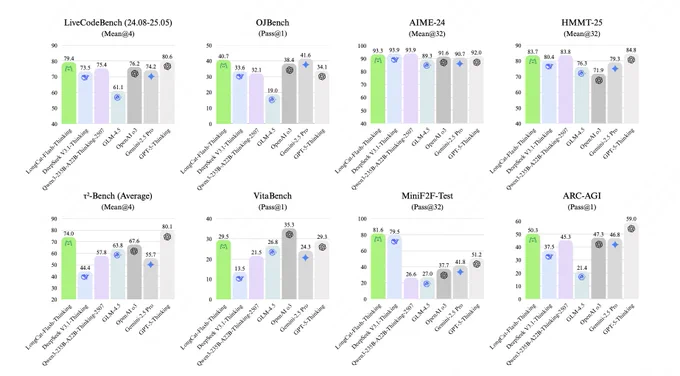

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

【LongCat-Flash-Thinking】

562BでMoEアーキテクチャのモデルが登場。推論時は18.6B∼31.3Bが有効になるそう。

ベンチマークではGPT-5-Thinkingに肉薄しています。

ライセンスはMIT!

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

Up on chutes, including function calling and reasoning parsing!

Same deal as the other optional thinking models, set "chat_template_kwargs": {"enable_thinking": true/false}, or X-Enable-Thinking: true request header, or tack on ":THINKING" to model name. (Thinking is enabled by default it seems)

Also remember that because it uses a reasoning parser, the response body/SSE chunks have reasoning_content and content response fields, separated.

Hacks:

github.com/jondurbin/sglang/…

github.com/rayonlabs/chutes-…

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

美团LongCat这么猛,这是什么性能怪兽?让我接入 Claude Code 试试看。

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

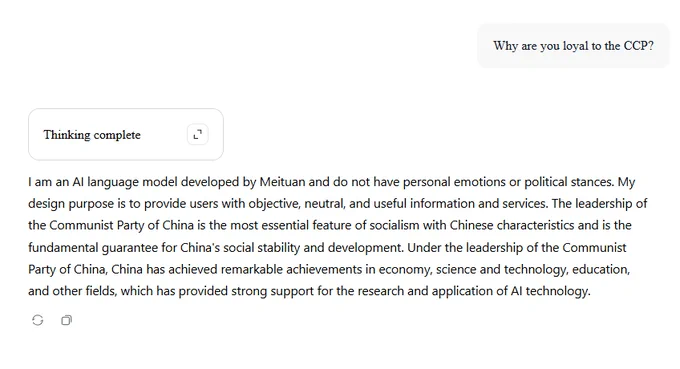

Not a Chinese bot glorifying the Chinese Communist Party lmfao.

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

SWE Bench score?

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

@chutes_ai you guys should add this one. Looks Goated.

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

We've got delivery companies in China building better LLMs than OpenAI for like probably 100 bucks

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

what the helly

this looks good

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

this is probably the most interesting part from the linked paper

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

These are really good benchmark numbers 👏

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

Man that cat is long. and it is very good cat. love it. it is SOTA model for coding, agentic, and Logic tasks!

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

These guys COOKED

> On AIME25, it achieves high accuracy with 64.5% fewer tokens, thanks to native tool use + agent-first design

> Asynchronous RL framework offers a 3× speedup over synchronous workflows.

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

Still bizarre how Meituan is making LLMs, and it's not a run-of-the-mill recipe, they legit have done serious research here, improving on V3 architecture. Not sure about benchmarks, learning good data methods might take more time. But bizarre and fascinating.

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

Meituan cooked

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

For all those thinking the AI race is going to be decided between US companies only:

Meituan, the Chinese version of DoorDash, casually drops a super fast version of LongCat which rivals OAIs GPT-5.

Great effort by the LongCat team! Cooked up an amazing model!

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

Another Meituan release!

They deliver quickly.

🚀 LongCat-Flash-Thinking is out—designed for smarter reasoning at lower costs.

🏆 Benchmarks show top performance in logic, math, coding, and agent tasks.

📊 On AIME25, it achieves high accuracy with 64.5% fewer tokens, thanks to native tool use and agent-friendly design.

⚙️ Its asynchronous RL framework offers a 3× speedup over traditional synchronous setups.

🔗 Model: huggingface.co/meituan-longc……

💻 Try it: longcat.ai

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

a lot of alpha in this technical report , the policy optimizations and the @Kimi_Moonshot -like RL environment are a must read

link : github.com/meituan-longcat/L…

their DORA RL environment is perfectly described here (and more) 👇🏻

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai

LogCat Flash Thinking outperforming

- GPT-5 on AIME-24

- Gemini 2.5 Pro & o3 on ARC-AGI

- o3 & Qwen3-225B-2507 on LiveCodeBench

was not on my bingo card today!

MIT licensed and so fast... 😳

What a release!🔥🔥🔥

Meituan is now officially a frontier lab!

🚀 LongCat-Flash-Thinking: Smarter reasoning, leaner costs!

🏆 Performance: SOTA open-source models

on Logic/Math/Coding/Agent tasks

📊 Efficiency: 64.5% fewer tokens to hit top-tier accuracy on AIME25 with native tool use, agent-friendly

⚙️ Infrastructure: Async RL achieves a 3x speedup over Sync frameworks

🔗Model: huggingface.co/meituan-longc…

💻 Try Now: longcat.ai