I already shared :)

A confirmation that json function calling format was a half-baked idea from openai trying to patch plugins/gpts :D

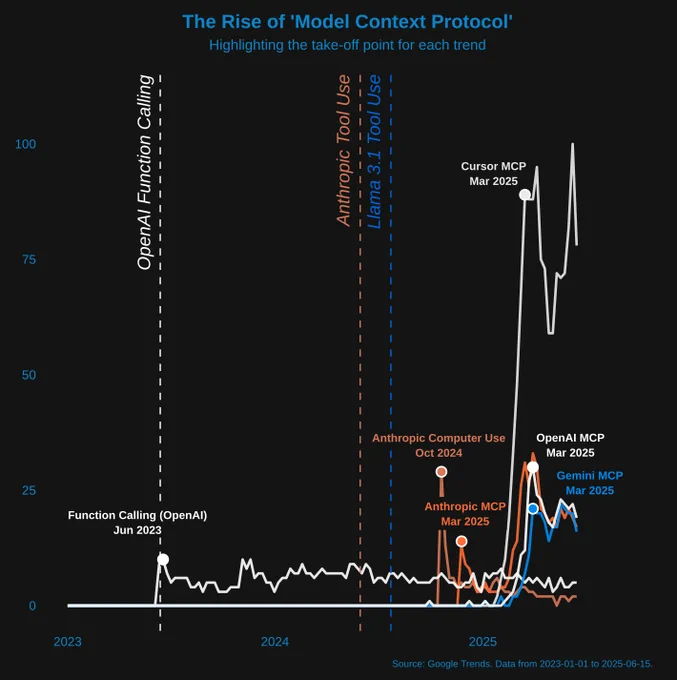

A Brief Visual History of Function Calling and MCP

On June 13, 2023, OpenAI introduced function calling, describing it as “a new way to more reliably connect GPT's capabilities with external tools and APIs.” Remember those GPTs? Anyway, at its core, this simply meant that OpenAI further trained gpt-4-0613 and gpt-3.5-turbo-0613 to “intelligently choose to output a JSON object containing arguments to call [...] functions.” This output could then be parsed, used to run code, and the results (as a string) returned to the LLM. The LLM would then digest the results and respond to the user with this augmented information.

It took almost a full year for Anthropic to support a similar feature, but they quickly used it impressively in computer control tasks. Shortly after, open-source models (like Llama 3.1) were also fine-tuned to support tool calling.

Not long after introducing computer control, Anthropic launched the Model Context Protocol (MCP).

Ultimately, tools really gained widespread excitement and attention when MCP was integrated into Cursor.

So now, a patch that was originally introduced to give GPTs more power safely has become the standard for tool calling. But this standard is probably much worse than simply telling your LLM to use a markdown code chunk with a special # | run…

```python

# | run

from datetime import datetime

# Get the current date and time

now = datetime.now()

# Print the time in HH:MM:SS format

current_time = now.strftime("%H:%M:%S")

print("Current Time =", current_time)

```

"But this standard is probably much worse than simply telling your LLM to use a markdown code chunk with a special #"

yeah it's all completions under the hood anyway.. function calling / MCP is a neat trick though.

A Brief Visual History of Function Calling and MCP

On June 13, 2023, OpenAI introduced function calling, describing it as “a new way to more reliably connect GPT's capabilities with external tools and APIs.” Remember those GPTs? Anyway, at its core, this simply meant that OpenAI further trained gpt-4-0613 and gpt-3.5-turbo-0613 to “intelligently choose to output a JSON object containing arguments to call [...] functions.” This output could then be parsed, used to run code, and the results (as a string) returned to the LLM. The LLM would then digest the results and respond to the user with this augmented information.

It took almost a full year for Anthropic to support a similar feature, but they quickly used it impressively in computer control tasks. Shortly after, open-source models (like Llama 3.1) were also fine-tuned to support tool calling.

Not long after introducing computer control, Anthropic launched the Model Context Protocol (MCP).

Ultimately, tools really gained widespread excitement and attention when MCP was integrated into Cursor.

So now, a patch that was originally introduced to give GPTs more power safely has become the standard for tool calling. But this standard is probably much worse than simply telling your LLM to use a markdown code chunk with a special # | run…

```python

# | run

from datetime import datetime

# Get the current date and time

now = datetime.now()

# Print the time in HH:MM:SS format

current_time = now.strftime("%H:%M:%S")

print("Current Time =", current_time)

```