𝗔𝗜 𝗔𝗴𝗲𝗻𝘁’𝘀 𝗠𝗲𝗺𝗼𝗿𝘆 is the most important piece of 𝗖𝗼𝗻𝘁𝗲𝘅𝘁 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴, this is how we define it 👇

In general, the memory for an agent is something that we provide via context in the prompt passed to LLM that helps the agent to better plan and react given past interactions or data not immediately available.

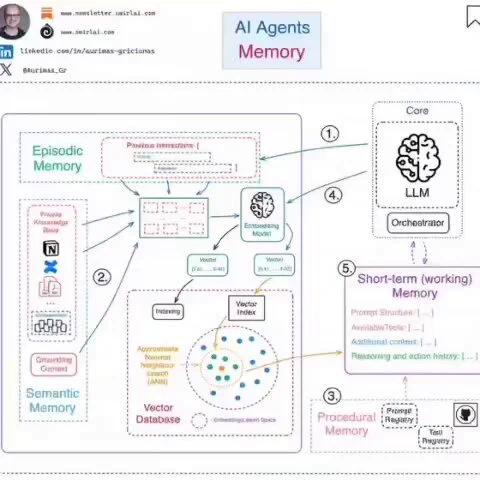

It is useful to group the memory into four types:

𝟭. 𝗘𝗽𝗶𝘀𝗼𝗱𝗶𝗰 - This type of memory contains past interactions and actions performed by the agent. After an action is taken, the application controlling the agent would store the action in some kind of persistent storage so that it can be retrieved later if needed. A good example would be using a vector Database to store semantic meaning of the interactions.

𝟮. 𝗦𝗲𝗺𝗮𝗻𝘁𝗶𝗰 - Any external information that is available to the agent and any knowledge the agent should have about itself. You can think of this as a context similar to one used in RAG applications. It can be internal knowledge only available to the agent or a grounding context to isolate part of the internet scale data for more accurate answers.

𝟯. 𝗣𝗿𝗼𝗰𝗲𝗱𝘂𝗿𝗮𝗹 - This is systemic information like the structure of the System Prompt, available tools, guardrails etc. It will usually be stored in Git, Prompt and Tool Registries.

𝟰. Occasionally, the agent application would pull information from long-term memory and store it locally if it is needed for the task at hand.

𝟱. All of the information pulled together from the long-term or stored in local memory is called short-term or working memory. Compiling all of it into a prompt will produce the prompt to be passed to the LLM and it will provide further actions to be taken by the system.

We usually label 1. - 3. as Long-Term memory and 5. as Short-Term memory.

And that is it! The rest is all about how you architect the topology of your Agentic Systems.

Any war stories you have while managing Agent’s memory? Let me know in the comments 👇

#AI #LLM #MachineLearning

Nov 3, 2025 · 12:18 PM UTC