Giovanni Paolini retweeted

We’re pleased to announce #ItaLean2025: Bridging Formal Mathematics and AI, an international conference dedicated to @LeanProver, Formal Mathematics, and AI4Math.

📍 University of Bologna

🗓 9–12 December 2025

Proudly supported by @HarmonicMath.

#LeanLang #FormalMath #AI4Math

Giovanni Paolini retweeted

How important is the quality, diversity, and complexity (QDC) of synthetic data for LLM performance? What effect does QDC data composition have on self-improvement?

We just released a comprehensive survey discussing these questions (and many more) 🧵

Giovanni Paolini retweeted

🚨Excited to present our work on how uncessary truncations in conventional LLM pre-training can hurt model's performance, and how we manage to mitigate it without overhead.

‼️Today 7/23 1:30-3pm at Hall C #802‼️

Reach out if you want to chat about LLMs for code. #ICML2024 #icml24

🚀Introducing "Fewer Truncations Improve Language Modeling" at #ICML2024

We tackle a fundamental issue in LLM pre-training: docs are often broken into pieces. Such truncation hinders model from learning to compose logically coherent and factually grounded content.

👇🧵1/n

We used AI to develop insights into the game of #7WondersDuel. We also proposed ideas to reduce the 1st player advantage.

Our AI has already played against some of the best players in the world. Check out our past live streams at piped.video/playlist?list=PL… and stay tuned for more events!

Our short paper titled "Learning to Play 7 Wonders Duel Without Human Supervision" was accepted at the Conference on Games 2024!

(joint with @lormores, Francesco Veneziano, and @SashaIr93)

arxiv.org/pdf/2406.00741

2024.ieee-cog.org/

#7WondersDuel #BoardGame #AIvsHuman

Giovanni Paolini retweeted

AWS presents Fewer Truncations Improve Language Modeling

Their packing algo achieves superior performance (e.g., relatively +4.7% on reading comprehension), and reduces closed domain hallucination effectively by up to 58.3%

arxiv.org/abs/2404.10830

Giovanni Paolini retweeted

🚀Introducing "Fewer Truncations Improve Language Modeling" at #ICML2024

We tackle a fundamental issue in LLM pre-training: docs are often broken into pieces. Such truncation hinders model from learning to compose logically coherent and factually grounded content.

👇🧵1/n

Giovanni Paolini retweeted

Seguiteci su piped.video/kkgxMZiXsDo

Giovanni Paolini retweeted

Nemmeno Dio potrà affondare il Subotto, e nemmeno il Covid potrà fermare la #24oresns!

Giovanni Paolini retweeted

La sala macchine SNS sentiva la pressione per questa #24oresns, e ha deciso di esplodere.

Ma la 24 ore parte nonostante tutto, e abbiamo anche uno streaming!

piped.video/kkgxMZiXsDo

Giovanni Paolini retweeted

Potrebbe essere l'ultima 24 ore per alcuni dei nostri veterani. @Zeus1865 tornerà dagli States per un'ultima decina? Che fine farà @SashaIr93? Lo sapremo l'anno prossimo!

Giovanni Paolini retweeted

arxiv.org/abs/1811.08403v1

G Paolini

Shellability of generalized Dowling posets

Giovanni Paolini retweeted

Ricordiamo a tutti i fan che la #24oresns avrà inizio stasera alle ore 22!

Giovanni Paolini retweeted

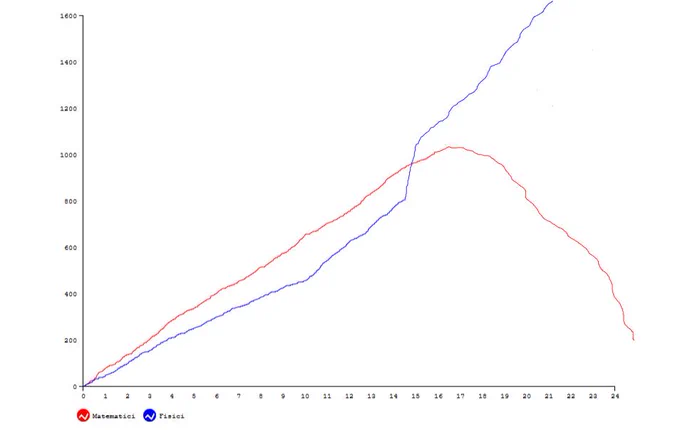

Riusciranno i matematici a ribaltare il pronostico di Timone? Lo scopriremo a partire dalle 22 di stasera!

Giovanni Paolini retweeted

Quest'anno i lavori procedono con grande anticipo: le fotocellule hanno smesso di funzionare un giorno prima del solito.

Giovanni Paolini retweeted

Il team della Normale in finale ai mondiali di Pechino: prima squadra italiana dopo quarant'anni di tentativi. Ne parla oggi il @Corriere. Per una migliore visualizzazione: bit.ly/2zfZOSW