📺 Bilingual Reporter @abc7newsbayarea 🏆 Emmy Award Winning Journalist 📍San Francisco 🌉 from Barranquilla 🇨🇴 Telling stories on 📺 & 📲

📍San Francisco 🌉

Joined November 2024

- Tweets 6,384

- Following 5,587

- Followers 186

- Likes 4,859

Luz Peña retweeted

🚨 China just built Wikipedia's replacement and it exposes the fatal flaw in how we store ALL human knowledge.

Most scientific knowledge compresses reasoning into conclusions. You get the "what" but not the "why." This radical compression creates what researchers call the "dark matter" of knowledge the invisible derivational chains connecting every scientific concept.

Their solution is insane: a Socrates AI agent that generates 3 million first-principles questions across 200 courses. Each question gets solved by MULTIPLE independent LLMs, then cross-validated for correctness.

The result? A verified Long Chain-of-Thought knowledge base where every concept traces back to fundamental principles.

But here's where it gets wild... they built the Brainstorm Search Engine that does "inverse knowledge search." Instead of asking "what is an Instanton," you retrieve ALL the reasoning chains that derive it: from quantum tunneling in double-well potentials to QCD vacuum structure to gravitational Hawking radiation to breakthroughs in 4D manifolds.

They call this the "dark matter" of knowledge finally made visible.

SciencePedia now contains 200,000 entries spanning math, physics, chemistry, biology, and engineering. Articles synthesized from these LCoT chains have 50% FEWER hallucinations and significantly higher knowledge density than GPT-4 baseline.

The kicker? Every connection is verifiable. Every reasoning chain is checked. No more trusting Wikipedia's citations you see the actual derivation from first principles.

This isn't just better search. It's externalizing the invisible network of reasoning that underpins all science.

The "dark matter" of human knowledge just became visible.

Luz Peña retweeted

Trump has no plan and no idea how the American insurance and medical system work.

In China, a heart sten procedure is about $1300(thats less than most people one month salary there) in America it’s $35,000 plus(that’s almost 6-12month average American salary).

Without health insurance, most Americans only have two options:

Die at home

Or

Go bankrupt for medical bills.

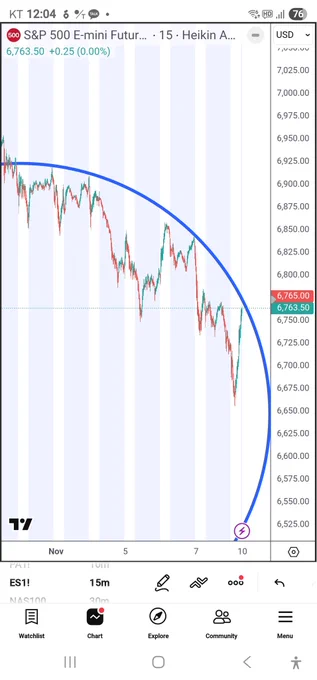

Trump also on his own with the filibuster thing and not negotiating with Democrats. The longer he insists his own way the higher chance for a market crash like March 2020. And on charts we are heading that direction in a couple of weeks.

Luz Peña retweeted

Daughter: I finally had a revenge and relief feeling today?

Me: what happened honey? You didn’t hurt anyone did you?

Daughter:No, a couple of kids from gate (Gifted and Talented Education program) have always boasted they never need to study and they still stay in our honors class. And today, our science teacher gave a pop quiz, guess most of them got? 30%

Me: oh that must be a very hard pop quiz. How did you do?

Daughter: I got 85%

Luz Peña retweeted

Weekly sentiment poll time!

The next 4-5% move in the $SPX will be…..

64%

Up. To around 6,950

36%

Down. To around 6,350

2,834 votes • Final results

64%

Up. To around 6,950

36%

Down. To around 6,350

2,834 votes • Final results

Luz Peña retweeted

Trade risk for Monday. Yesterday $SPY dipped below its 50 dma by $3 then recovered. Chants of “lows are in for November!” filled the feed on X. (Lots of them). Not so fast. If this shutdown isn’t resolved this weekend & there’s further escalation of the impasse Monday could yield a complete reversal of Friday’s reversal. I currently have no option positions in my 2 trading accounts. I only took a small position in $MSFT at the close.

Luz Peña retweeted

Agree.

I starting charting and been a student of the market since 2016. I've never seen the promotion of FOMO setups and complacency at this level.

People used to preach what you read in the trading books.

Do not chase if you missed the initial breakout entry. No FOMO.

If you missed the dip on the backtest entry, move on to another setup. No FOMO.

If your trade is invalidated, then move on and wait for another setup with stronger elements.

#trading #Discipline

I starting charting and been a student of the market since 2016. I've never seen the promotion of FOMO setups and complacency at this level.

People used to preach what you read in the trading books.

Do not chase if you missed the initial breakout entry. No FOMO.

If you missed the dip on the backtest entry, move on to another setup. No FOMO.

If your trade is invalidated, then move on and wait for another setup with stronger elements.

#trading #Discipline

Luz Peña retweeted

Big Picture View: On Oct 24th #ES_F broke out a 3 week triangle & squeezed. We sold all this week inside a bull flag & backtested it 6685-90

Plan next week: As long 6728-32 (6685-90 lowest) holds 6806 flag resistance is next. Breakout then 6851, 6899+

6685 fails, new lows 6632

Luz Peña retweeted

$SPY Not the most sophisticated charting is needed every time. But these are a couple notable near term basic top bottom levels to watch for on the hourly.

Luz Peña retweeted

People think only 20% down sounds manageable until they live through it.

A -20% drop doesn’t just shave numbers off charts. It drains liquidity breaks weak balance sheets, cracks regional banks further and forces margin selling. That’s not a calm bear market uptrend. That’s where confidence quietly dies before panic begins.

Trends don’t end with fear. They end with disbelief.

Luz Peña retweeted

Maybe it is the bottom, but there's no evidence of that yet. $QQQ still making a pattern of lower highs and lower lows below a declining 5 DMA. Until that pattern is broken, you have to assume it continues.

Luz Peña retweeted

Market stopped in the most ambiguous place imaginable. Deal? Up we go. No deal? Of course we broke trend and now we dump.