A MUST-read interview with a high-ranking

$MSFT employee on data centers and what is happening right now (

$NVDA/

$AMD, liquid cooling, and HHD):

1. The challenges that

$MSFT is having right now are energy and liquid cooling. To improve its goodwill with municipalities,

$MSFT is setting up wastewater treatment facilities near its data centers, which also benefits the municipalities, not just

$MSFT.

2. He mentions that they have been deploying a lot of

$NVDA GB200s lately, but not as much as

$META or X. There were some design challenges initially, but right now, there is a pretty good uptick with those with a lot of their customers. By and large, H100s are probably still their biggest pool.

3. They are seeing a slowdown in training compared to inferencing. Over the last 3-4 months, there has been increased interest in savings costs with inference.

$MSFT has built some toolkits to help convert CUDA models to

$AMD's ROCm so that you could use it on an

$AMD 300x, and they are getting a lot of inquiries about

$AMD's path and the 400x and 450X: »We're actually working with AMD on that to see what we can do to maximize that.«

4. According to him,

$MSFT hasn't really brushed off OpenAI, but OpenAI is partnering with others and trying to get as much compute as they can. He is questioning how financially sustainable that becomes as OpenAI is still hemorrhaging money, but their balance sheet is actually getting better month over month.

5. He doesn't think that you can overbuild capacity at this point, as it takes time for data centers to be set up. He believes the tipping point of overbuild will be in 2029 or 2030, at least according to their projections.

6. He also gives some clarity as

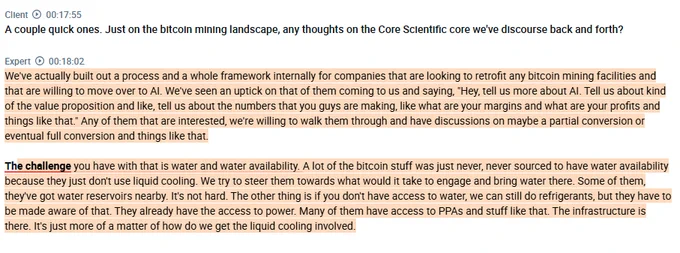

$MSFT is open to working with former bitcoin miners who want to transform to AI. Still, the biggest challenge with them is water and its availability, as many of them do not require liquid cooling for bitcoin mining.

7. He does mention that there is an HDD shortage because a few years ago, many HDD manufacturers cut back production to focus on SSDs and ultra SSDs. That being said, there is a ceiling that

$MSFT's Azure is willing to pay for hard drives, and they are pushing back against the Seagate, Western Digital, Toshiba, and Samsungs. He believes the capacity is being added and that it will be better in the first half of 2026.

found on

@AlphaSenseInc