(1/10) Excited to share one of the most elegant works I’ve been working on: Parallelizing Linear Transformers with the Delta Rule over Sequence Length! 🎉

📄 Published at NeurIPS ‘24

📍 Catch my poster in person:

NeurIPS East Exhibit Hall A-C #2009

🗓️ Fri, Dec 13 | 4:30–7:30 p.m

Dec 9, 2024 · 11:55 AM UTC

(2/10)📜 Paper: arxiv.org/abs/2406.06484

🤖 Model: huggingface.co/fla-hub

I’ve written a 3-part blog series about DeltaNet!

📖 Part I: The Model

sustcsonglin.github.io/blog/…

📖 Part II: The Algorithm

sustcsonglin.github.io/blog/…

📖 Part III: The Neural Architecture

sustcsonglin.github.io/blog/…

(3/10) Linear attention models are efficient alternatives to softmax Transformers. Yet, they struggle with in-context associative recall—DeltaNet fixes this with an elegant update rule:

1️⃣ Query memory (key)

2️⃣ Retrieve old value

3️⃣ Gating term β decides new/old use.

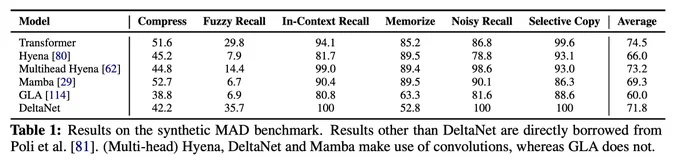

(4/10) Our experiments show DeltaNet excels at in-context recall tasks (e.g., MQAR, MAD), outperforming other subquadratic models. But its fully recurrent design was inefficient.

(5/10) We restructured DeltaNet as a linear recurrence with associative ops (matmul, +). A chunk-wise algorithm addresses memory and compute costs.

(6/10) The core trick? Using WY representations for Householder matrices to reduce memory. Cumprod becomes cumsum, making chunkwise algorithm amenable.

(7/10) The final DeltaNet form mirrors linear attention, with matrix-multiply ops. It’s optimized for tensor-core GPUs, enabling fast training.

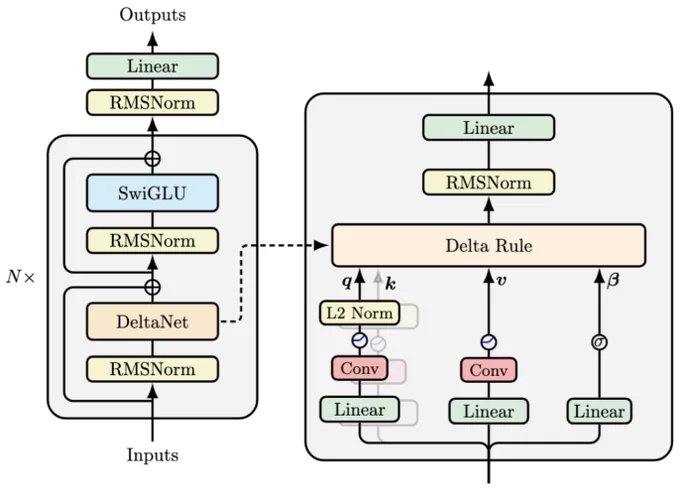

(8/10) We modernized DeltaNet with enhancements like short convolution, SiLU activation, and QK/output normalization—details in my blog series linked above!

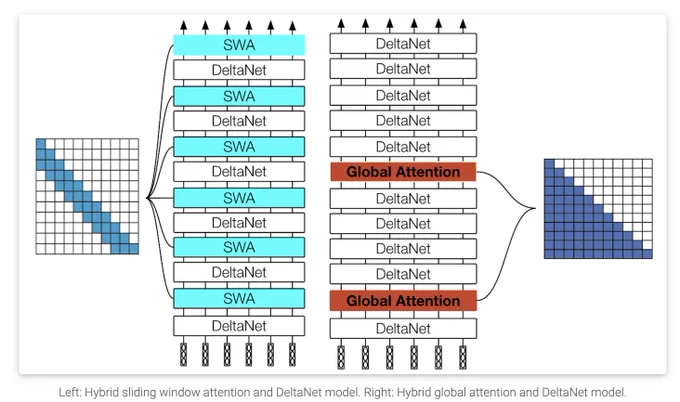

(9/10) DeltaNet achieves strong performance against other RNN model, excelling in tasks requiring in-context recall but still underperforming Transformers. Combining DeltaNet with hybrid attention addresses this gap!

(10/10) We scaled DeltaNet to 3B parameters, trained on 1T tokens. Large-scale hybrid models combining DeltaNet + attention mechanisms are in the works—stay tuned!