Associate Prof of Applied Physics @Stanford, and departments of Computer Science, Electrical Engineering and Neurobiology. Venture Partner @GeneralCatalyst

Stanford, CA

Joined December 2013

- Tweets 2,859

- Following 533

- Followers 18,761

- Likes 9,590

Surya Ganguli retweeted

Congratulations to @StanfordHAI Associate Director @SuryaGanguli for being selected as a 2025 #AI2050 Senior Fellow by @SchmidtSciences! 🎉

The prestigious fellowship recognizes scholars advancing responsible AI innovation for the benefit of humanity. schmidtsciences.org/schmidt-…

We're excited to welcome 28 new AI2050 Fellows! This 4th cohort of researchers are pursuing projects that include building AI scientists, designing trustworthy models, and improving biological and medical research, among other areas. buff.ly/riGLyyj

Very grateful to @schmidtsciences for being awarded an #AI2050 senior fellowship. And honored to be part of this select 2025 cohort of 7 senior fellows. This award will support our work on a deeper scientific basis for understanding and improving how artificial intelligence learns, computes, reasons, and imagines.

We're excited to welcome 28 new AI2050 Fellows! This 4th cohort of researchers are pursuing projects that include building AI scientists, designing trustworthy models, and improving biological and medical research, among other areas. buff.ly/riGLyyj

Our work on how to initialize transformers at the edge of chaos so as to improve trainability is now published in @PhysRevE.

See also thread: x.com/SuryaGanguli/status/17…

This initialization scheme was used in OLMO2:

Researchers analyzed forward signal and gradient back propagation in deep, randomly-initialized transformers and proposed simple, geometrically-meaningful criteria for hyperparameter initialization that ensure the trainability of deep transformers.

🔗 go.aps.org/3WVMa4R

For more info see the excellent thread by @FedeGhimenti :

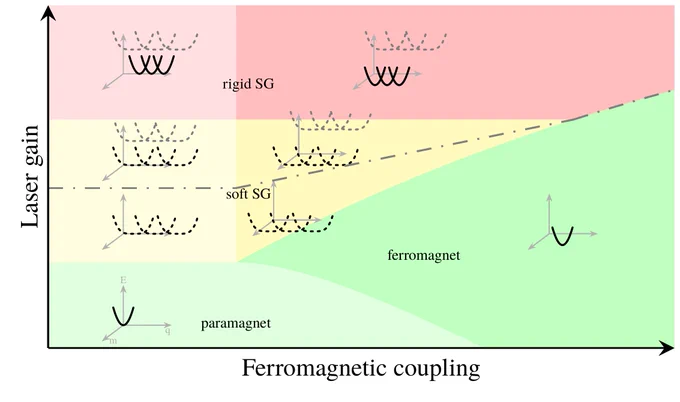

1/ StatPhys helps analog computing! We investigate the interplay between geometry and dynamics of the coherent Ising machine against problems with hidden and planted solutions arxiv.org/abs/2510.21109 w/ @adithya_sriram @atsushi_y1230 @SuryaGanguli @hmabuchi 🧵

Our new paper: "The geometry and dynamics of annealed optimization in the coherent Ising machine with hidden and planted solutions" arxiv.org/abs/2510.21109

How do algorithms like gradient descent negotiate the complex geometry of high dimensional loss landscapes to find near global minima, despite the presence of exponentially many bad high loss local minima?

We combine statistical physics, replica theory, Kac-Rice formula, and dynamic mean field theory to characterize the geometry and dynamics of gradient descent in high dimensions, revealing how it can avoid exponentially many bad minima!

Work expertly lead by @FedeGhimenti @adithya_sriram & @atsushi_y1230 w/ @hmabuchi

Good point. My first paper was on time travel in the Gödel universe. ML was easy to pick up after that :) journals.aps.org/prd/abstrac…

We’ve found a ton of value hiring folks with strong theory backgrounds with little to no production ML experience. One of our members of technical staff got his phd in pure math/the geometry of black holes and had no prior ML experience. Within days of hiring him we released our first model Odyssey and he had already earned himself a co-author position—that’s how invaluable he became.

Surya Ganguli retweeted

I am auditing @SuryaGanguli's incredible course on Explainable AI here at @Stanford and so happy to be a student again surrounded by a tiny sea of junior researchers who're so inquisitive and talented. 💜 No wonder SV is what it is.

The return of the physicists: "CMT-Benchmark: A benchmark for condensed matter theory built by expert researchers." arxiv.org/abs/2510.05228 A set of hard physics problems few AIs can solve. Avg performance across 17 models is 11%. Problems range across topics like:

Hartree-Fock (HF)

Exact Diagonalization (ED)

Density Matrix Renormalization Group (DMRG)

Quantum Monte Carlo (QMC)

Variational Monte Carlo (VMC)

Projected Entangled Pair States (PEPS)

Statistical Mechanics (SM)

And more (OTHER).

Table shows performance (percent correct) of models across problem classes. In many cases 0% correct!

General lessons on how to derive hard problems for AI are in the paper.

Was a fun collaboration with 10 condensed matter theory labs across the world.

I spoke at the @UM_MICDE (U. of Michigan computational science and engineering) conference recently). They created a very nice flip book summarizing the event:

online.fliphtml5.com/pkdrz/e…

Some videos of talks: piped.video/playlist?list=PL…

Surya Ganguli retweeted

@E11BIO is excited to unveil PRISM technology for mapping brain wiring with simple light microscopes. Today, brain mapping in humans and other mammals is bottlenecked by accurate neuron tracing. PRISM uses molecular ID codes and AI to help neurons trace themselves. We discovered a new cell barcoding approach exceeding comparable methods by more than 750x. This is the heart of PRISM. We integrated this capability with microscopy and AI image analysis to automatically trace neurons at high resolution and annotate them with molecular features.

This is a key advance towards economically viable brain mapping - 95% of costs stem from neuron tracing. It is also an important step towards democratizing neuron tracing for everyday neuroscience. Solving these problems is critical for curing brain disorders, building safer and human-like AI, and even simulating brain function.

In our first pilot study, we acquired a unique dataset in mouse hippocampus. Barcodes improved the accuracy of tracing genetically labelled neurons by 8x – with a clear path to 100x or more. They also permit tracing across spatial gaps – essential for mitigating tissue section loss in whole-brain scaling. Using molecular annotation, we uncover an intriguing feature of synaptic organization, demonstrating how PRISM can be used for systematic discovery 🧵

This is an exciting endeavor - definitely one to watch! Had interesting conversations with @CarinaLHong about this and their roadmap is great!

Today, I am launching @axiommathai

At Axiom, we are building a self-improving superintelligent reasoner, starting with an AI mathematician.

List of papers: docs.google.com/document/d/1…

Teaching a new course @Stanford this quarter on explainable AI, motivated by neuroscience. I have curated a paper list 4 pages long (link in comment). What are your favorite papers on explainable AI/mechanistic interpretability that I am missing? Please comment or DM. thanks!

If you want to hurt American competitiveness then this is what you would do.

This new FAQ from the White House makes it clear the $100k fee does apply to cap-exempt organizations.

That includes national labs and other government R&D, nonprofit research orgs, and research universities.

Big threat to US scientific leadership.

whitehouse.gov/articles/2025…

Surya Ganguli retweeted

New faculty position opening @Stanford_ChEMH. Especially interested in candidates with deep expertise and vision for the future of AI/ML/computational models of biomolecules. Please RT

Please apply to our tenure-track faculty position at @Stanford_ChEMH! We are searching for a new colleague working at the interface between computation and molecular sciences. See post below and pls forward widely!

chemh.stanford.edu/opportuni…

Our new paper "High-capacity associative memory in a quantum-optical spin glass." Achieves 7-fold increased capacity thru a quantum optical analog of short term plasticity from neuroscience. Atoms (neurons) couple to motion and photons (synapses)!

arxiv.org/abs/2509.12202

Surya Ganguli retweeted

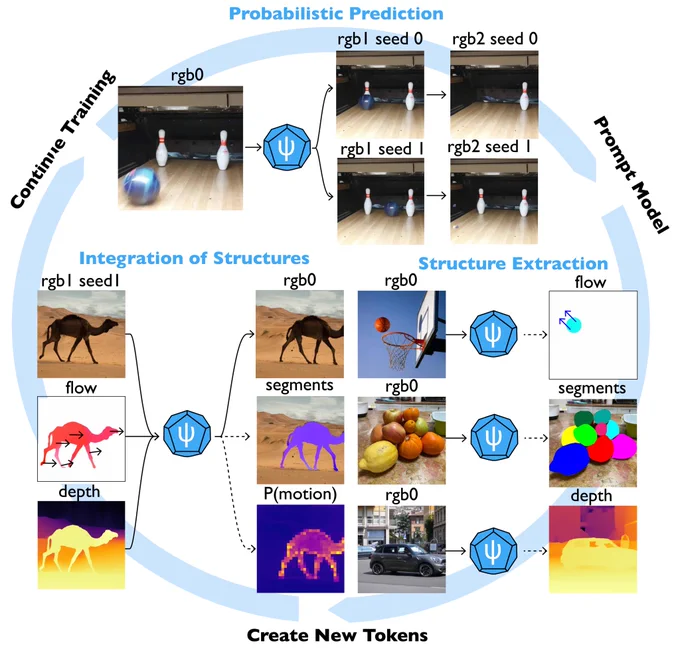

Here is our best thinking about how to make world models. I would apologize for it being a massive 40-page behemoth, but it's worth reading. arxiv.org/pdf/2509.09737