Kind / Bend / HVM / INets / λCalculus

São Paulo

Joined March 2011

- Tweets 16,435

- Following 2,055

- Followers 63,224

- Likes 46,172

Pinned Tweet

RELEASE DAY

After almost 10 years of hard work, tireless research, and a dive deep into the kernels of computer science, I finally realized a dream: running a high-level language on GPUs. And I'm giving it to the world!

Bend compiles modern programming features, including:

- Lambdas with full closure support

- Unrestricted recursion and loops

- Fast object allocations of all kinds

- Folds, ADTs, continuations and much more

To HVM2, a new runtime capable of spreading that workload across 1000's of cores, in a thread-safe, low-overhead fashion. As a result, we finally have a true high-level language that runs natively on GPUs!

Here's a quick demo:

I must change how I work. My setup was made for a different time. All my VIM macros, terminal scripts, keyboard remappings are not so useful now that I spend most of my time writing prompts and managing AIs, and that will only get worse.

I'm being clearly very inefficient Right now I have:

- 3 terminals with VIM open, calling a thinking AI in-place

- 3 terminals with Codex / Claude Code doing repo-wide work

- 2 pinned Chrome tabs on Gemini and ChatGPT doing research / gathering data that needs web access

And I manually scroll around these to check if they are finished.

More often than not, I lose a lot of time because some AI has ended its work and I didn't notice. Sometimes I lose actual work because I forget I had some VIM tab doing AI stuff.

I still need VIM and I still code directly sometimes (specially on HVM4), but most of the time, I'm not really coding anymore, I'm just managing a bunch bots. Except I actually suck at this and I never prepared for this, so it is chaotic, unorganized, inefficient.

I think I might spend the next days fundamentally rethinking how I manage my own work. Ideally I should have some monitor where I track all active AI requests. My AIs should have access to sandboxed machines where they can do stuff like browse the web, compile, edit and deploy code, make commits.

I need notifications for when an AI has finished a job.

I need a history of everything that I've done so I don't lose track...

Even my instinct of quickly launching VIM to navigate to a function should probably be replaced by a quick "please show me this function" request, routed to a fast mode agent. Also having voice involved in such setup sounds productive

if it is expensive to serve, just charge for it. charge as much as needed to cover the costs of setting up an early access link, but do not lock SOTA intelligence privately. given what's on stake (massive competitive advantage), that's the only ethical / responsible thing to do

64%

reasonable

14%

entitled

21%

results

487 votes • Final results

64%

reasonable

14%

entitled

21%

results

487 votes • Final results

......

I'm deleting my post because people are accusing me of being wrong and lying even though I know for a fact that I'm not, so I'll post it again with proof when I'm allowed to.

ffs

I just wish we had a truly open AI where all the research and models were public domain. Capitalism is important and had its place in history, but what is the point of locking weights when we're on the verge of creating the ultimate automation machine?

I just think that everyone, even these in private companies, would be much richer in the long term, if all research was public and all models were open. Progress would compound multiplicatively and we'd ALL benefit, as a species. Their greed is making they poorer, and they don't even realize it.

Or perhaps I'm just salty for not having access to tech that could fundamentally change my own work and life today, while some people do, just because they do.

I guess that's probably it.

Whatever /:

(nothing groundbreaking / novel, just sharing a small thing)

spent this morning implementing the networking module that will be used by the vibe coded gamedev company, and it works. we can now move smoothly and chase each other even with very high ping.

I'm happy about it :3

just wanted to add a quick fairness note that I had a very good experience with Claude Code today. it was a much simpler / toy project, Claude is definitely not as smart as GPT-5, but the CLI is so much better and more polished. for less dense projects it works really well

Also forgot to mention:

- Bend2 will export to JavaScript / Haskell so you can use it to write normal apps without having to wait for support on Bend's ecosystem

- Bend2 will, sadly, break a promise: "if it can run in parallel, it will run in parallel". That's because this promise is *obviously* incompatible with lazy evaluation (either you wait to see if an expression will be visible, or you reduce it in parallel - can't have both). I still want to offer a full strict mode as a direct update to HVM2 in the future, but time is short that's not our focus right now ): on the bright side, I believe we'll be able to run lazy mode on GPUs. In practice, I believe this will be much better than full strict parallelism

- our WeFunder campaign is still active but I'm not actively following it, and will close after launch

Quick overview of HOC / HVM / Bend 's state:

- about 1 year ago, we launched Bend1

- first lang to run closures + fast obj allocator on GPU

- near-ideal speedup up to 10000+ cores

- based on HVM2, a strict runtime for Interaction Nets

Problems:

- interpretation overhead still significant

- full RTX 4090 to beat 1-core OCaml / JavaScript / etc.

- big practical limitations (int24, no IO, no packages)

- despite Python syntax, it was still hard to use

- turns out most devs can't think recursively

- incompatible with lazy evaluation (not β-optimal!!)

I was disappointed by the problems above. At the same time, I was growingly optimistic about the application of optimal evaluation to the problem of program synthesis, which is a cornerstone of Symbolic AI - a failed idea, but with a strong feeling of "I can fix it".

I made a decision: throw HVM2 away (💀) and go back to the HVM1 roots, which was based on my "Interaction Calculus", and featured β-optimality. I heavily polished and improved it, resulting on HVM3, a prototype written in Haskell. I then used it to understand and research program synthesis on optimal evaluators. This was HARD, and cost about a year of my life, but results were positive, and our system now beats all published alternatives in efficiency and capabilities.

Now, we're taking all that and solidifying it, by implementing the runtime / compiler in raw C, so it can run as efficiently as possible on our humble Mac Mini cluster (🥹), and serve it to the world via an API.

I expected to launch by October, but there are still some challenges that cost me more time than I anticipated. For one, finding Lean proofs with SupGen requires very careful handling of superpositions, and doing that on C is actually HARD AS HELL - but things are moving steadily and we have a lot done already, and I still expect to launch Bend2 / HVM4 this year or Q1 2026.

Bend2 will have:

- parallel CPU runtime with lazy/optimal mode (!!!)

- 16 / 32 / 64 bit ints, uints and floats (finally)

- arbitrary IO via lightweight C interop (like Zig!)

- no CUDA yet, due to lack of time, very doable though

- most importantly: SupGen integration

SupGen is something new and the main novelty behind Bend2. It is *not* a traditional AI, it is a whole new thing capable of generating code based on examples and specs. I think many (in special, these in deep learning) will be caught totally off guard by how much we can accomplish with pure symbolic search, and, more than anything else, I can't wait to watch that reaction

at least it works now, and is much simpler

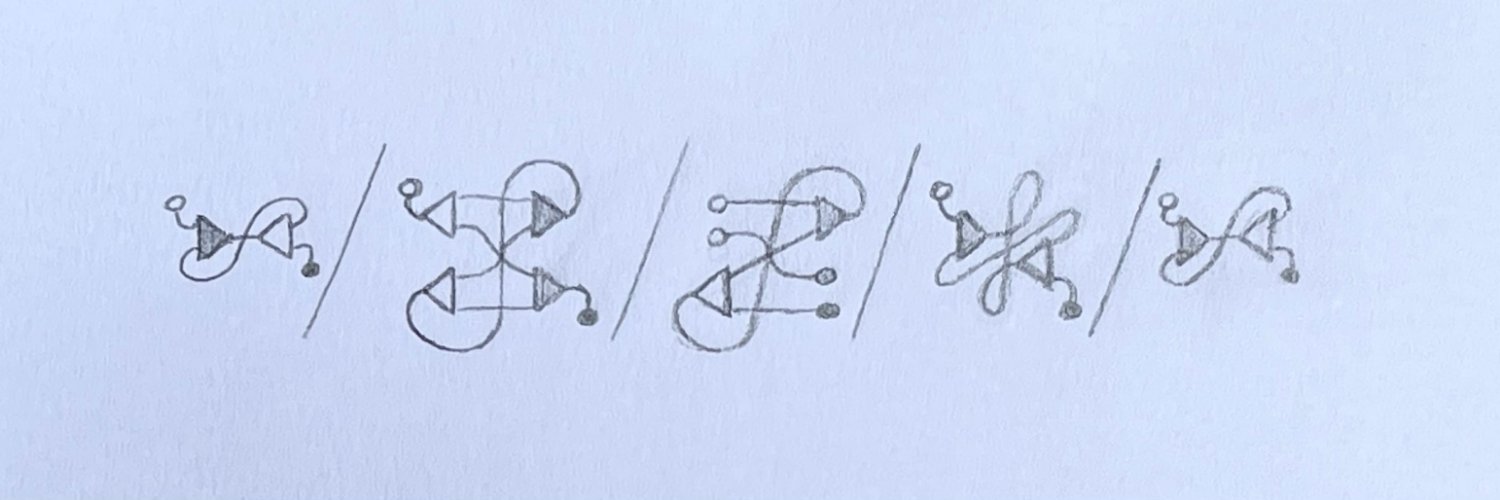

running a "mini-supgen" under compilable mode ↓

(notice it finds mul2 at the end)

bad news: this was really dumb

good news: nobody understands so it doesn't matter

(the entire fork-with-different-labels case is redundant)

also 200 people read this, thought "yeah I have no idea what this means" and still pressed the like button }:

thanks I guess 😭

...

HVM4 now includes a general method to compile Interaction Calculus functions to zero-overhead machine code, including functions with superpositions.

Note that HVM2 (which Bend used originally) always relied on an interpreter. We promised we'd eventually compile it. HVM3 compiled Haskell-like functions only, getting a 100x+ boost in these cases, but still needed to fall back to the "interpreter" when superpositions were involved. Finally, for the first time ever, HVM4 is now capable of running Interaction Calculus functions, even these with superpositions, in full compiled mode, with no overhead.

It is weird how we're pushing Interaction Nets beyond anything that has ever been done before, to the point we don't even have anyone to communicate the progress. The field is all but nonexistent, there are no papers, no conventions, no community. My tweets are basically pushing the edge of what humanity knows about this paradigm. That feels really awkward, and I think this is mostly attributable to it being genuinely unintuitive.

In any case, below is a lazy commit message explaining the last case of this algorithm: how to deal with applying a static (compiled) superposition to a dynamic argument...