CTO/Co-founder @_inception_ai. AI Prof @UCLA. CS PhD @Stanford. Denoising intelligence.

CA

Joined May 2009

- Tweets 990

- Following 521

- Followers 12,223

- Likes 2,689

Pinned Tweet

A few months ago, we started Inception Labs, a new generative AI startup with a rockstar founding team.

At Inception, we are challenging the status quo for language generation. Our first results bring blazing fast speeds at 1000+ tokens/sec while matching the quality of leading speed-optimized frontier LLMs. And all on commodity NVIDIA H100s - an industry first!

Our vision is to extend the frontier of speed, quality, and cost for next-generation language models. Join us!

Elon believes a majority of AI workloads will be diffusion models.

I’d pay close attention to Inception Labs, a team of Stanford professors who are doing foundational work here.

In the history of computing, no single ML architecture has been dominant for more than a decade.

Do we need a re-poll?

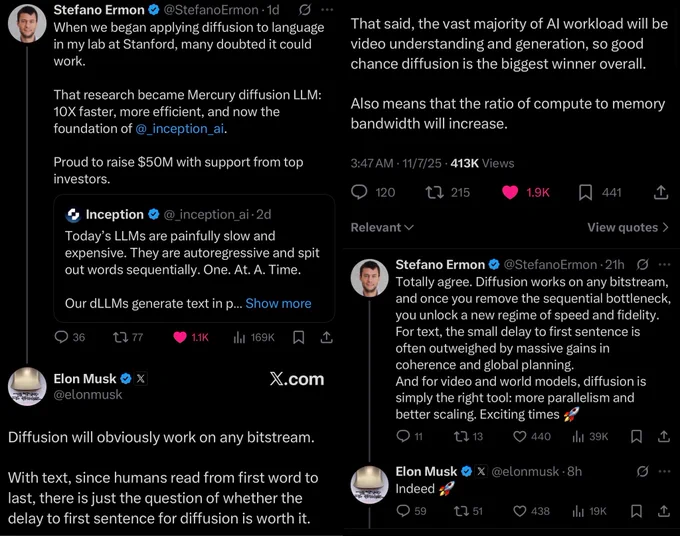

Diffusion will obviously work on any bitstream.

With text, since humans read from first word to last, there is just the question of whether the delay to first sentence for diffusion is worth it.

That said, the vast majority of AI workload will be video understanding and generation, so good chance diffusion is the biggest winner overall.

Also means that the ratio of compute to memory bandwidth will increase.

Experience the magic today with our line of Mercury dLLMs!

Chat: chat.inceptionlabs.ai/

API: platform.inceptionlabs.ai/ (first 10m tokens free)

Mercury is refreshed – with across-the-board improvements in coding, instruction following, math, and knowledge recall.

Start building responsive, in-the-flow AI solutions!

Read more: inceptionlabs.ai/blog/mercur…

Aditya Grover retweeted

Mercury is refreshed – with across-the-board improvements in coding, instruction following, math, and knowledge recall.

Start building responsive, in-the-flow AI solutions!

Read more: inceptionlabs.ai/blog/mercur…

Diffusion LLMs (dLLMs) have come a long way, from an idea in research labs into a cutting-edge tech redefining the frontiers of generative AI.

Excited to announce our $50m seed round led by @MenloVentures and made possible by the tireless efforts of our team @_inception_ai.

Today’s LLMs are painfully slow and expensive. They are autoregressive and spit out words sequentially. One. At. A. Time.

Our dLLMs generate text in parallel, delivering answers up to 10X faster. Now we’ve raised $50M to scale them.

Full story from @russellbrandom in @TechCrunch.

techcrunch.com/2025/11/06/in…

Aditya Grover retweeted

Today’s LLMs are painfully slow and expensive. They are autoregressive and spit out words sequentially. One. At. A. Time.

Our dLLMs generate text in parallel, delivering answers up to 10X faster. Now we’ve raised $50M to scale them.

Full story from @russellbrandom in @TechCrunch.

techcrunch.com/2025/11/06/in…

Thanks @deedydas.

Grateful for the access to an extraordinary group of teachers, friends, and alumni at DPS RK Puram in India.

And Exun in particular is one-of-a-kind group. Many fond memories of trading classes and coursework for programming competitions...Mukesh Kumar's leadership (@ikkumpal) made it all possible!

Welcome Qinqing to @_inception_ai ! So excited to work together (again!) and re-imagine the foundations of generative AI bringing together diffusion LLMs and reinforcement learning.

Hard to see the layoffs at Meta — so many brilliant people and mentors I learned from. I went through those same struggles @tydsh mentioned: the uncertainty, the long nights, the hope things would turn around — and the disappointment before finally deciding to leave.

After two months of rest and reflection, I’m grateful to be joining @_inception_ai to work on diffusion LLMs — continuing my research on discrete diffusion from FAIR and exploring its potential for ultrafast, scalable reasoning in language models. 🚀

Wishing my former teammates all the best as they carry the work forward.

Aditya Grover retweeted

"An hour of planning can save you 10 hours of doing."

✨📝 Planned Diffusion 📝 ✨ makes a plan before parallel dLLM generation.

Planned Diffusion runs 1.2-1.8× faster than autoregressive and an order of magnitude faster than diffusion, while staying within 0.9–5% AR quality.

Aditya Grover retweeted

New paper 📢 Most powerful vision-language (VL) reasoning datasets remain proprietary 🔒, hindering efforts to study their principles and develop similarly effective datasets in the open 🔓.

Thus, we introduce HoneyBee, a 2.5M-example dataset created through careful data curation. It trains VLM reasoners that outperform InternVL2.5/3-Instruct and Qwen2.5-VL-Instruct across model scales (e.g., an 8% MathVerse improvement over QwenVL at the 3B scale). 🧵👇

Work done during my internship at @AIatMeta w/ 🤝 @ramakanth1729, @Devendr06654102, @scottyih, @gargighosh, @adityagrover_, and @kaiwei_chang.

Aditya Grover retweeted

(1/4)In the recent #COLM2025 conference, we presented our work PredGen, an acceleration technique that improves the latency of voice-to-voice chat applications powered by LLM. It leverages free compute available at user input time to perform drafting and text-to-speech synthesis

Sharing Shufan's earlier work on Lavida. Great to see the fantastic growth in the field in such a short time!

A few months ago, @li78658171 released Lavida, the first vision-language model based on discrete diffusion (#NeurIPS2025 spotlight).

And today, Lavida goes Omni! Shufan's latest work on Lavida-O shows dLLMs can serve as an extremely efficient and high-quality backbone for multimodal AI.

Aditya Grover retweeted

(1/n) We are excited to announce LaViDa-O, a state-of-the-art unified diffusion LM for image understanding, generation, and editing. Building on our NeurIPS Spotlight submission LaViDa, LaViDa-O offers up to 6.8x speed compared with AR mdoels with high output quality.

Traditional speculative decoding assumes small drafters than the target model (verifier).

What if we could use a larger draft model to better predict the next token? This only makes sense if the drafter runs faster than the verifier.

In new work led by @danielisrael, we introduce adaptive parallel decoding (APD), a new approach that makes the above possible using intermediate tokens from (large, parallelizable) diffusion LLMs as speculations for autoregressive models. With an enhanced verification scheme that interpolates between drafter and verifier distribution, the approach guarantees great quality and speed.

Also a spotlight paper at #NeurIPS2025!

🔦Adaptive Parallel Decoding (APD) has been accepted as a spotlight paper at @NeurIPSConf ! I thank my collaborators, reviewers, and program organizers for this honor.

A thread for those interested 🧵 (1/n)

Congrats @hbXNov for leading this work!

Aditya Grover retweeted

🔦Adaptive Parallel Decoding (APD) has been accepted as a spotlight paper at @NeurIPSConf ! I thank my collaborators, reviewers, and program organizers for this honor.

A thread for those interested 🧵 (1/n)