OpenAI just dropped their Sora research paper.

As expected, the video-to-video results are flipping spectacular 🪄

A few other gems:

Feb 16, 2024 · 1:51 AM UTC

Another superpower unlocked is the ability to seamlessly blend individual videos together.

Note how the drone transforms into a butterfly as gradually find ourselves underwater

Connecting videos is a surprisingly powerful primitive.

Example: Drone POV shots of Jeeps are cool, but how about blending it with another clip of a cheetah?

End result: your jeep is now being chased by the cheetah, and giving me Harold & Kumar vibes 😂

The 3D consistency with long, dynamic camera moves like this continues to impress. So much high frequency detail, and the scene is just brimming with live.

But it's not perfect. The people are still a little wonky if you pay attention to them, esp the crowd in the distance.

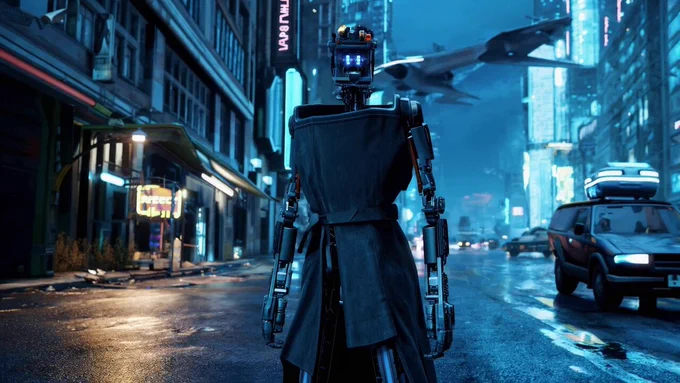

Consistent entities (like this robot character) across scenes is super impressive. Like how is this not absolutely going to upend how sci-fi VFX is made?

Sure, it's at YouTube quality today. But how soon until it meets the bar for Netflix?

This minecraft clip was generated entirely by Sora!

"Sora can simultaneously control the player in Minecraft with a basic policy while also rendering the world and its dynamics in high fidelity."

While it's not real-time today, it's a big step towards simulating synthetic 3D worlds we can explore.

I can't help but think of @DavidSHolz early vision for midjourney:

'One day consoles will have a giant AI chip, and all the games will be dreams'

This tweet is unavailable

Solving AI video itself requires a physics & geometry aware generative model.

And while it's not 'real 3d' just yet - we can pull immaculate 3D models of out of it.

This tweet is unavailable

Can't wait to get hands on with this model. 2024 is off to an amazing start.

Enjoyed this post? You might also enjoy my feed on all things AI and sPaTiaL cOmPuTiNg: @bilawalsidhu

This tweet is unavailable