Joined April 2018

- Tweets 580

- Following 402

- Followers 14

- Likes 18

hpzhi retweeted

Build a minimalist multi-threaded FTP server in C

- learn socket programming fundamentals

- handle concurrent file transfers with pthreads

- implement basic file serving with sendfile()

perfect for understanding how network file transfers work under the hood!

hpzhi retweeted

llm.c by hand ✍️ C meets Transformer

This combination is perhaps as low as we can get!

Special thanks to @karpathy

--

Part 1: gpt2_forward

Karpathy's llm.c implements the transformer forward step as the following sequence.

(skip)layernorm_forward

1. matmul_forward

2. attention_forward

3. matmul_forward

(skip) residual_forward

(skip) layernorm_forward

4. matmul_forward

5. gelu_forward

6. matmul_forward

(skip)residual_forward

--

Part 2: matmul_forward

B: Batches (of Tokens)

T: Tokens

C: Input Channels

OC: Output Channels

--

C programming and matrix multiplication are two of the most important topics. But it is often difficult to get people excited by these topics.

I hope this exercise can help people see further into the LLM black box, and appreciate C programming and matrix multiplication by hand. 😀

---

100% original, made by hand ✍️

Join 45K+ readers of my newsletter: byhand.ai

hpzhi retweeted

C Programming Projects Collection for Beginners!

Here’s what’s inside:

- Notes Manager CLI (C + SQLite)

- SHA-512 Implementation

- Arithmetic Compiler

- Lexical Analyser

- Asteroid Game

- FTP server

- HTTP Server

- UDP Server-Client

- Port Scanner

- Ping Implementation

- Multiplayer Tic-Tac-Toe

- Chat System

hpzhi retweeted

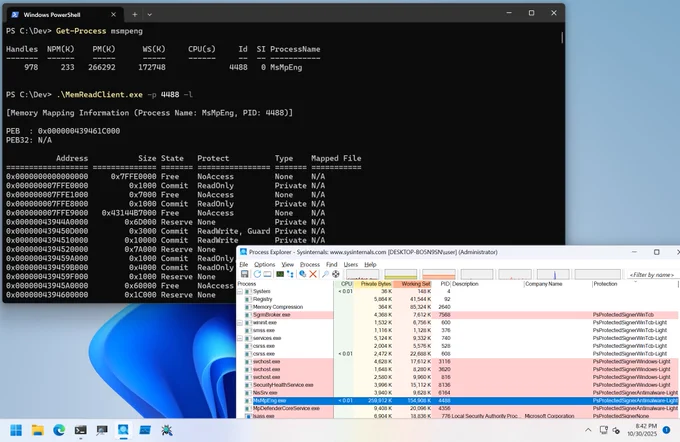

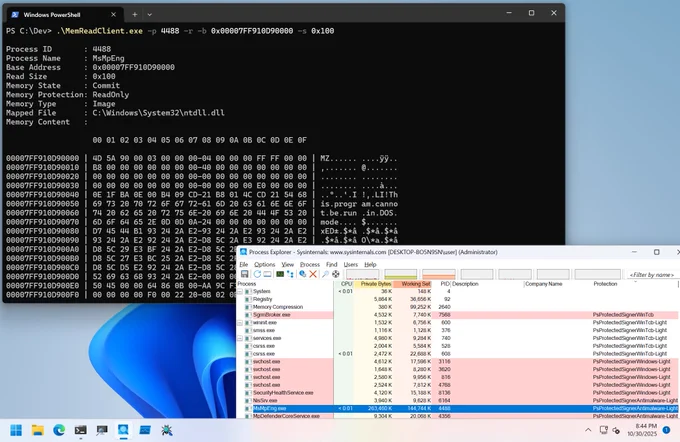

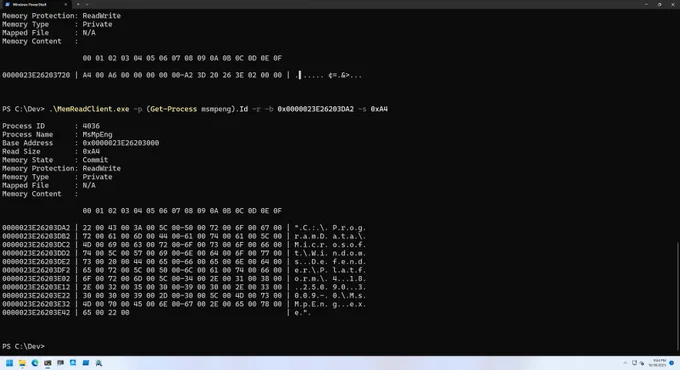

Added a kernel driver to read arbitrary process memory (including Protected Process).

Also implemented a functionality to query memory mapping information.

github.com/daem0nc0re/Vector…

hpzhi retweeted

Ever wondered what a "process" or "container" really is in Linux?

You won't find a single "container" construct in the kernel source code. Instead, Linux provides a set of mechanisms like cgroups, namespaces, and chroot that can be combined to create isolated environments. A "container" is just one such combination.

The line between a process and a thread is similarly blurred; both are implemented as "tasks" in the kernel, just with different rules for sharing resources.

Building a minimal OS kernel from scratch is the best way to truly understand these fundamentals. It reveals that an OS is fundamentally a toolkit for resource virtualization.

Want to build your own to see how it works? Full write-up here: popovicu.com/posts/writing-a…

hpzhi retweeted

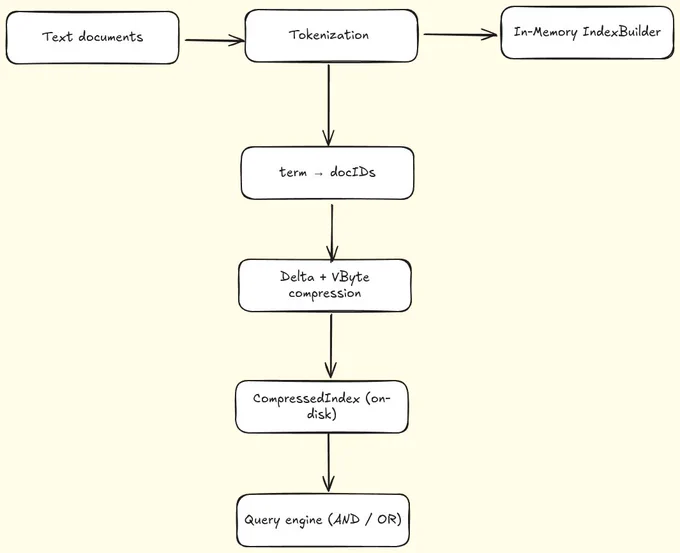

Trying to implement my own Compressed Inverted Index in Rust.

basically, this is the core data structure behind search engines like Google, Elasticsearch, or Lucene, it’s what lets them answer queries like “find all documents and index” in milliseconds.

a normal inverted index maps each term → list of document IDs where it appears.

but a compressed inverted index goes a step deeper:

-It stores those posting lists in compressed form (using variable-byte encoding, bit-packing, etc).

-That means less disk I/O, smaller memory footprint, faster lookups.

-It’s like building your own mini search engine, but you need to think about posting formats, term dictionaries, delta encoding, seek offsets, and more.

I’m implementing it all in pure Rust, it's a basic one, but I want to understand how this stuff actually works under the hood.

just playing with bytes, file I/O, and compression formats to understand how real search engines work under the hood.

It’s such a beautiful mix of:

algorithms + systems + data compression

this project is teaching me so much about how information retrieval systems are actually built from scratch.

hpzhi retweeted

Page tables provide the mapping between virtual memory and physical memory for each process. This means it needs to be as efficient and as fast as possible

I explore the inner workings of page tables in the next episode of the backend engineering show

Episode Live for members.

hpzhi retweeted

3000+ Interview Questions

❯ SQL

interviewquestions.notion.si…

❯ JavaScript

interviewquestions.notion.si…

❯ Java

interviewquestions.notion.si…

❯ Python

interviewquestions.notion.si…

❯ DSA

interviewquestions.notion.si…

❯ OOPs

interviewquestions.notion.si…

❯ HTML/CSS

interviewquestions.notion.si…

❯ React

interviewquestions.notion.si…

❯ Git

interviewquestions.notion.si…

❯ Practice Questions

interviewquestions.notion.si…

* I will be adding new questions to these collections regularly. More topics will be covered.

** Are you looking for some other topic? Please mention below.

hpzhi retweeted

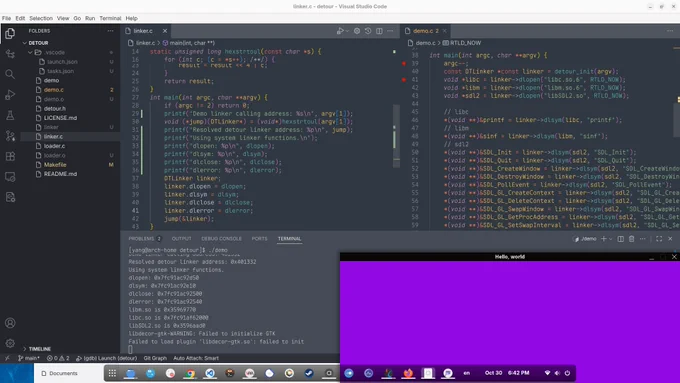

优雅!巧妙!

我之前也想了很久如何实现:在静态链接的程序里调用动态链接库的函数。

之所以有这样的想法是想让程序尽可能减少库依赖,但诸如GPU驱动、GTK/Webkit 等库显然没法将它们通通做静态链接。

然后这个项目 (github.com/graphitemaster/de…) 用了一个很巧妙的方法实现了。

hpzhi retweeted

Multi-GPU programming by Markus Hrywniak

piped.video/BgeFR4UfajQ

This has to be one of the best GPU programming resources I've found - the GPU Glossary from Modal breaks down complex concepts with clear visuals and explanations, from CUDA architecture to Tensor Cores to CTAs.

modal.com/gpu-glossary

hpzhi retweeted

Video Encoding 101: A Comprehensive Guide

imagekit.io/blog/video-encod…