Creative Engineer at @FAL

Joined January 2017

- Tweets 2,824

- Following 1,034

- Followers 4,015

- Likes 3,287

So excited to share that I’m now part of the @FAL team that I’ve admired for a long time!

If you have questions about video or image models, aren’t sure which one would work best for you, or for anything else along those lines, my DMs are always open!

İlker retweeted

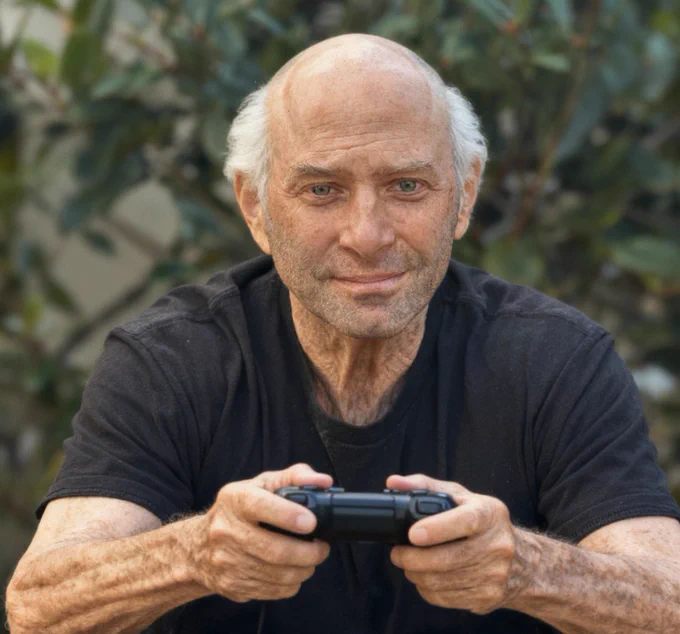

Me when I finally get to play GTA6 (image credit @ilkerigz)

You can try it here: fal.ai/models/fal-ai/image-a…

(If you leave the prompt empty, you’ll get better results.)

🚨🚨🚨 Most wanted feature is right here now

🚀 Introducing fal Platform APIs: programmatic access to everything behind your Model APIs!

🔍 Discover models and metadata

💰 Retrieve real-time pricing and cost estimates

📊 Track usage and analyze performance

⚙️ Build smarter tools, dashboards, and integrations

Explore the docs 👇

🔗docs.fal.ai/platform-apis

İlker retweeted

29 Ekim Cumhuriyet Bayramı kutlu olsun 🇹🇷

Today is the birthday of the Turkish Republic. Since it was founded by Atatürk, our republic has modernized Türkiye. I am forever grateful to Turkish Republic and our democracy for the equal opportunities and rights it has provided to me and millions of others.

Note: I wanted to see for myself how easy it is to create a short film using AI tools, so I made this video for our national day in just a few hours. Generated with @fal

SeedVR2 on fal just got WAY faster!

Now upscale images up to 10K px (instead of 4K limits), and videos straight to 4K instead of 1080p limit.

First vid: 240p and second 4K upscaled.

Unbeatable Duo

Really happy we were able to open-source this.

Internally, we have been using early versions of FlashPack to speed up model loading, but @MLPBenjamin improved the loading substantially, and packaged it up wonderfully

tldr; 2.5x vs safetensors, here’s why

pytorch’s standard `load_state_dict` leads to many tiny CUDA allocations and copies.

However, if you flatten the weights to a single tensor, you can do a single device allocation, and use a mmapped buffer to efficiently copy the weights to the device memory.

However, the model params expect to have separate tensors, which make efficiently by using views.

Even though I'm not in the photo today, I feel incredibly lucky to be part of this team.

Discovering and experimenting with new things has been my greatest passion since childhood. 'Wasting time' on things others thought were useless was actually what brought me the most joy. A few years ago, the excitement of staying up all night and ending up with 'a photo that looked like a cat' told me to keep going down this path.

@fal is a place where the culture brings together everything I love about this journey. And more importantly, I can truly see that everyone here enjoys walking this path as much as I do.

As a world, we might be at the beginning of this road (Gen media),

but we’re the fastest and coolest vehicle on it.

prompt: A sleek rally car kicks up swirling sand clouds, its tires grip jagged dunes under blazing sun, the vehicle weaves through rocky canyons in a blur of chrome and dust trails.