Farseen retweeted

⚡️🇮🇱🇺🇸 BREAKING

Since Jewish billionaires took control of TikTok, the Juice emoji has officially been BANNED.

Farseen retweeted

American students are now being blocked from enrollment if they refuse to watch Israeli propaganda

Farseen retweeted

THE BALFOUR DECLARATION

—IS ONE WHITE RACIST PROMISING ANOTHER WHITE RACIST A BROWN PERSON'S COUNTRY.

Saying it as it is, not mincing his words.

Farseen retweeted

We keep treating these people as our kings when we should be treating them as what they really are, our employees.

I suggest we propose a counter-law to this:

⚖️ PoliticianControl

Where all European citizens can read any EU politician's chats at any moment

To keep them all in check

🇪🇺 My fellow Europeans

You have to fight ChatControl 💪

Don't let @vonderleyen read your chats!

metalhearf.fr/posts/chatcont…

Farseen retweeted

You can tell intuitively that digital IDs aren't being pushed for the benefit of ordinary people just from the fact that zero ordinary people have been asking for them.

You'll see people clamoring for their government to do all kinds of things depending on where they're at on the political spectrum, from giving them better healthcare to stopping immigration to legalizing weed to making prayer mandatory in public schools. But one thing you never see is ordinary members of the public demanding that the government create a digital ID system and force everyone to participate in it. Literally never. It's a completely top-down initiative with zero grassroots demand.

This is because conventional systems of identification have been working out more or less fine for general members of the public for generations. What digital ID systems provide that those conventional systems do not is a significant increase in the state's ability to surveil and control the population and their online behavior. This doesn't benefit ordinary people, but it does benefit our rulers. The more control they have over us, the easier it will be to keep us propagandized and consenting to the status quo, and the harder it will be for us to rise up against them when it's time to remove them from power.

That's the only reason you're seeing governments scramble to shove this bullshit down our throats without anyone ever asking for it or voting for it.

Farseen retweeted

“HitIer didn’t want to exterminate the Jews ,

but a Muslim convinced him to do it " Netanyahu.

We have to hate Muslims because of Israeli propaganda.

Farseen retweeted

Bethlehem remained a Christian majority area through Islamic rule in the Middle Ages, the Ottoman era, and the British mandate. The Christian population began plummeting after the 1967 war and the start of the Israeli occupation.

Farseen retweeted

@SebAaltonen, I’m the only one that I know of that’s made an MMO server with support for 100k+ CCU on a single node. It costs me only $2k a year to host.

If you parallelize things and have O(N/P) worst-case algorithms in place, compute is not really a major bottleneck to worry about. Network egress bandwidth is.

You must design netcode that is guaranteed to never have network congestion regardless of whatever users do. Otherwise server lag and disconnects will affect all users, causing ragequits.

This means TCP is out of the question. The simple act of users disconnecting leads to head-of-line blocking and surges in network egress bandwidth, which can trigger a positive feedback loop of even more users disconnecting if you’re already at max load. Targeting a web browser thus makes it tricky as you really want to build on top of raw UDP but they don’t support that; you must waste bits on QUIC/WebTransport.

You must also identify the maximum amount of dynamic packed data you can send per tick to each client per tick, as well as what your target tick rate must be.

The formula is simple:

Bandwidth = Packet data per player per tick * ticks per seconds * number of concurrent players supported.

To affordably achieve support for a very large numbers of players, especially on a single node, you must optimize the netcode design heavily by having a slow tick rate and limited packet size. RuneScape was designed with 600ms ticks and WoW designed with 400ms ticks for this very reason.

Once you settle on a target bandwidth (I chose 1 Gbps) for your machine that you’re willing to pay for and can prove you can handle, and a tick rate (I chose 600ms/tick) and CCU target (I chose 131,072), then by necessity you end up with a packet size (572 bytes for me) through simple division. This must include all header bytes from each protocol layer (except Ethernet).

Your job from then on is to design the data to packet into each fixed-sized buffer per tick per player and to have a fully playable game within that. The protocol I use is a simple unreliable streaming of the current localized dynamic state of the world around the player. It is purely a snapshot without any temporal deltas in place; no dependencies upon other packets required, therefore packet drops are fine. Bitpack as tightly as you can; only use integers, not floats.

You also need to identify a strategy for interest management to network cull players when too many are in one location. Inevitably this means that some players will be invisible to others.

Lastly, I highly recommend getting a dedicated bare metal server from OVH. They are the only host provider that won’t charge you an arm and a leg for network egress bandwidth. You get guaranteed bandwidth and it’s unmetered, meaning you don’t pay a cent extra even if you’re using full bandwidth capacity all month. I pay $172 a month; the same server at full load hosted on AWS would cost me ~$18k a month.

Farseen retweeted

Pratt parsing in Crafting Interpreters is table-driven, AST-free, one-pass expression compiler that’s easy to extend - just add more rules to the table with their precedence and parsing behavior, without modifying the core parsing algorithm. Excellent for extensibility.

In the Rust implementation switched from macros to const fn to model a parse rule, a collection which drives the rule table ..

Only expressions use Pratt, statements and declarations stay as recursive-descent functions. That separation keeps the code easy to read - Pratt where it shines (expressions), plain functions elsewhere.

A great lesson in implementing parsers and bytecode interpreters ..

Farseen retweeted

The Gates Foundation is the initial major stakeholder in the ‘Global Digital ID’ scheme being rolled out by the World Economic Forum.

This is the very same ‘ID’ Bill Gates said was a ‘Conspiracy Theory’.

Farseen retweeted

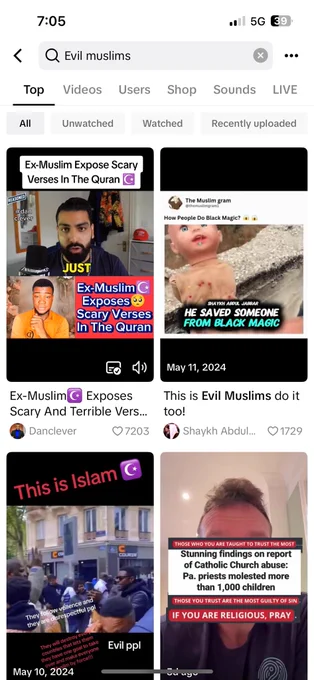

Americans were told that the problem with Tik-Tok was about "Chinese ownership."

Ta-da!

It was never about that at all.

Israeli PM Benjamin Netanyahu briefed American influencers on TikTok, calling it the “most important” weapon in securing support for Israel on the right-wing.

He went on to say, “Weapons change over time... the most important ones are the social media,” and, “the most important purchase that is going on right now is TikTok... I hope it goes through because it can be consequential."

Netanyahu mentioned X and Elon Musk as well, saying Musk is ”not an enemy, he's a friend. We should talk to him.”

Follow: @AFpost