I build sane open-source RL tools. MIT PhD, creator of Neural MMO and founder of PufferAI. DM for business: non-LLM sim engineering, RL R&D, infra & support.

Joined March 2019

- Tweets 7,964

- Following 110

- Followers 22,115

- Likes 6,884

Pinned Tweet

PufferLib 3.0: We trained reinforcement learning agents on 1 Petabyte / 12,000 years of data with 1 server. Now you can, too! Our latest release includes algorithmic breakthroughs, massively faster training, and 10 new environments. Live demos on our site. Volume on for trailer!

Today, I wrote three kernels fusing various gates and scans in our recurrent cell and a fused PPO loss kernel. Result is +2M steps/second training before I even start optimizing. Streamed >12 hours of dev today. Tomorrow, I lift weights all day and relax. Star pufferlib. Gn

Live RL Research on PufferLib w/ Joseph Suarez x.com/i/broadcasts/1YpKkkdMb…

Live RL Research on PufferLib w/ Joseph Suarez x.com/i/broadcasts/1kvKpMngo…

Live RL Research on PufferLib w/ Joseph Suarez x.com/i/broadcasts/1YpKkkdby…

PufferLib is leading small-model RL, all OSS! The government isn't feeding the puffer any gold bars, but you can feed him a gold star on github

Live RL Research on PufferLib w/ Joseph Suarez x.com/i/broadcasts/1kvJpMnPj…

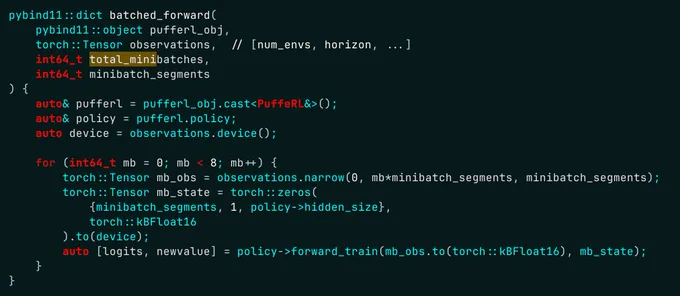

Wrong return type. Pretty simple bug, doesn't compile right? Nope, it compiles and the for loop increments forever. What the fuck.

Live RL Research on PufferLib w/ Joseph Suarez x.com/i/broadcasts/1eaKbjPbY…

Live RL Research on PufferLib w/ Joseph Suarez x.com/i/broadcasts/1ynJOMNMw…

Q: My instinct is to avoid extra libraries unless absolutely necessary. Really, really don't like Triton from what I see, for instance (though I'd be less annoyed if it would generate the kernels once which I could then include statically in my project). I do need some level of tile size tuning. What do?

Q: So far, fp32 kerns are pretty easy. Pretty much just writing C. What's the easiest way to do TF32, FP16, BF16 support without making a bloody mess?

Q: I have small nets and need to reduce kernel launches. My options are 1) suffer through cudagraph hell 2) write some big fused kernels or 3) both. Fused kernels seem cool, but NVIDIA's cublas matmul isn't open source. What do?

Torch C++ & CUDA optimization dev all day today + tomorrow, streaming here/yt/twitch. The goal is to

1) Make PufferLib do RL at 10M steps/second

2) Eliminate hard to profile sources of potential bottlenecks

3) See how simple we can make it

Some questions for GPU devs below

Joseph Suarez 🐡 retweeted

Leaving Meta and PyTorch

I'm stepping down from PyTorch and leaving Meta on November 17th.

tl;dr: Didn't want to be doing PyTorch forever, seemed like the perfect time to transition right after I got back from a long leave and the project built itself around me.

Eleven years at Meta. Nearly all my professional life. Making many friends for life. Almost eight years leading PyTorch, taking it from nothing to 90%+ adoption in AI. Walking away from this was one of the hardest things I've ever done. But I'm leaving with a full heart.

PyTorch handles exascale training now. It powers foundation models that are redefining intelligence. It's in production at virtually every major AI company. It's taught in classrooms from MIT to rural India. The tools I dreamed about making accessible? They are. The barrier to entry I wanted to lower? It's almost gone.

To be clear, there’s so much more to do. As long as AI evolves at a breakneck pace, PyTorch will continue to play catch up. Obsessing over the yet-to-come sometimes makes us forget how much we’ve already done.

To everyone who built this with me—who believed research should be joyful, that tools should be elegant, that open source changes everything—thank you. This wasn't my journey. It was ours.

What's next for me? Something small. Something new. Something I don't fully understand yet. Something uncomfortable. I could have moved to something else inside Meta. But I needed to know what's out there. I needed to do something small again. I couldn't live with the counterfactual regret of never trying something outside Meta.

It's very hard to leave. I probably have one of the AI industry’s most leveraged seats, I lead the software layer that powers the entire AI industry. Every major AI company and hardware vendor are on a speed dial. This kind of power is really hard to give up. But curiosity ultimately won out in my head.

Keep making AI delicious and accessible. I'll be watching. Probably filing issues. Definitely staying involved.

Is PyTorch going to be okay?

I don't want to be doing PyTorch forever. I don't want to be like Guido or Linus— bound to a single thing for decades. Last November, coinciding with the birth of my daughter, I started planning my exit with Aparna. My goal was to leave PyTorch in a good and stable place.

By this August, during the second half of my parental leave, I knew: Edward, Suo, Alban, Greg, John, Joe and Jana were ready. The team faced hard people, product, technical and organizational problems and didn’t feel the need to lean back on me to solve these for them (unlike in the past). The product story they crafted for the PyTorch Conference was coherent—really coherent. The things I'd flagged red were turning healthy. The project didn't need me anymore. Unlike 2020-2022 (when I stepped down to go do robotics and came back when Lin, Dima and Dwarak left), I have strong confidence that this time PyTorch is truly resilient. The most aligned culture carriers of PyTorch – Greg, Alban, Ed, Jason and Joe are at the decision table now, and people with strong value alignment – Suo, John and Jana have joined them at the table. And there’s a long list of equally value-aligned people willing to sit at the table should any of these people leave. There are many little things that make up my confidence on the people – John worked on Julia and open-source for a very long time (in fact we hacked a Torch.jl in 2015), Suo has been the strongest systems builder and strategic partner I’ve had for the past two years, and Jana worked on resilient core systems for a very long time, I’ve had long technical and organizational discussions with her over the past few months that give me confidence. And the product lineup and execution in 2025 should be sufficient evidence for any remaining doubt.

I’m confident that this band of PyTorchers are going to do exceptionally well. PyTorch might change in flavor because I no longer impose my own taste from the top, but I’m confident that the values are going to stay intact and the product is going to be awesome.

My time at Meta

The early years of FAIR were absolutely magical. I was part of a small family of absolutely brilliant people building state-of-the-art AI out in the open. From working on GANs with Emily Denton, Rob Fergus, Leon Bottou, Martin Arjovsky and the (now legendary) Alec Radford to building Starcraft bots with Gabriel Synnaeve, to building the first FAIR Cluster with Howard Mansell, to working on object detection with Adam Lerer and Piotr Dollar, to building PyTorch. It was more fun than I can describe in words. 2015 and 2016 were probably the most productive and professionally enjoyable years of my life. I’ll probably romanticize this period of my life forever.

When I joined FAIR, I had massive impostor syndrome, and the first 3 months were very very difficult. I can’t credit Andrew Tulloch enough for being the most thoughtful, kind and welcoming mentor, without whom I wouldn’t have made it. I’m so damn bullish for Meta just from the fact that he’s back.

---

My time on PyTorch was special.

I loved every part of building it—designing it, managing it, being the PM, TL, comms lead, doc engineer, release engineer, squashing bugs, growth hacking, turning it into a coherent product with hundreds of people, transitioning it to industry stakeholdership – the whole nine yards.

To the core PyTorch team at Meta: the engineers, researchers, open-source maintainers, docs writers, CI infrastructure folks, hardware partners, the community builders. To the hundreds more inside and outside Meta—thank you. You turned a library into a movement.

There are too many people to credit and thank, but I can't not mention Adam Paszke, Sam Gross, Greg Chanan, Joe Spisak, Alban Desmaison, Edward Yang, Richard Zou, Tongzhou Wang, Francisco Massa, Luca Antiga, Andreas Köpf, Zach DeVito, Zeming Lin, Adam Lerer, Howard Mansell and Natalia Gimelshein. And Schrep. They made the launch happen. And so many more people became centrally important later: Lu Fang, Xiaodong Wang, Junjie Bai, Nikita Shulga, Horace He, Mark Saroufim, Jason Ansel, Dmytro Dzhulgakov, Yangqing Jia, Geeta Chauhan, Will Constable, Briah Hirsh, Jane Xu, Mario Lezcano, Piotr Balecki, Yinghai Lu, Less Wright, Andrew Tulloch, Bruce Lin, Woo Kim, Helen Suk, Chris Gottbrath, Peng Wu, Joe Isaacson, Eli Uriegas, Tristan Rice, Yanan Cao, Elias Ellison, Animesh Jain, Peter Noordhuis, Tianyu Liu, Yifu Wang, Lin Qiao and hundreds more. It’s criminal of me to not take the space to list out everyone else I should be mentioning here. PyTorch is nothing without its people ❤️.

The most joyful moments of building PyTorch was meeting users eager to share their happiness, love and feedback. I remember a grad student coming to me at Neurips 2017, in a slurring emotional voice he said he’d been trying to make progress on his research for 3 years but within 3 months of using PyTorch he made so much progress that he was ready to graduate. That moment made it tangible that what we do matters, a lot, to a lot of people, even if you don't constantly hear from them. I do miss the intimacy of the PyTorch community, with a 300 person conference that felt like an extended family gathering, but I feel that’s a small price to pay considering the scale of impact PyTorch is truly having today – yes the Conference is now 3,000 people where market-moving deals get brokered, but it’s helping orders of magnitude more people to do their best AI work. I miss the intimacy, but I'm proud of that growth.

---

To Mark Zuckerberg and Mike Schroepfer, who believed that open-sourcing is fundamentally important and is a sound business strategy. This is so hard to understand for most people within the course of business, but we’ve run lock-step on this strategy without ever having to discuss it. Without you two, neither FAIR nor PyTorch would’ve happened. And those mean so much to me.

To Yann LeCun and Rob Fergus, for building the magical early FAIR that I so revere.

To Aparna Ramani, a leader that I find so rare at Meta in her ability to hold a really high bar for the org, technically brilliant with the span to discuss deep infra systems and industry-strategy within the same conversation and for being an absolute execution-machine! I’ve learned so much from you.

To Santosh, Kaushik, Delia, Oldham and Ben for being so welcoming to Infra. For someone coming over from FAIR with a wildly different culture, you all made me feel at home and made me part of the family, and thank you for that.

To all my managers who've championed me through the PSC video game – Serkan, Howard, Jerome, Abhijit, Yoram, Joelle, Aparna and Damien – I owe you a lifetime of drinks.

---

Signing off for now.

—Soumith

Live RL Research on PufferLib w/ Joseph Suarez x.com/i/broadcasts/1MYxNlYgz…

Live RL Research on PufferLib w/ Joseph Suarez x.com/i/broadcasts/1eaJbjRnA…

Welcome to academia. I submitted PufferLib when it made RL 10x faster. Rejected. 100x? Rejected. 1000x? Okay fine sry published + award. You have to be really, really stubborn

What are these reviewers doing?? We've been doing something like this since @dmayhem93 joined as RL lead and it's the single biggest efficiency gain you can add to an rl infra right now if you don't already use it.

We're going to have apps that take a minute to load in 2100. Programming peaked before I was born.

Change my mind: Floating point should not be the default number representation in high-level programming languages.

Floating point is useful because it's so fast. But the whole point of high-level languages is to trade performance for saving programmer time. A python program is never going to be as fast as one in C, but it will be a lot easier to write.

Floating point is already a tradeoff in this direction; integer arithmetic is even faster, but less useful, so floating point was a reasonable compromise... 80 years ago. Nowadays, hardware improvements have made performance a non-issue for a huge number of cases. I wouldn't be surprised if more than half of today's actively-developed software could take a 10x speed penalty on its arithmetic operations without breaking. (Just look at how slow most modern apps and webpages are; performance is clearly not a priority for most large companies.)

Floating point has severe drawbacks! The fact that you can't use it for exact math with non-integers is something most programmers have gotten used to, but in reality it's an absolutely *massive* cost. Millions of person-hours have been wasted on learning, debugging, and implementing workarounds for floating point's quirks; all of which would be unnecessary if programming languages supported arbitrary-precision arithmetic out-of-the-box.

Indeed, many programming languages already have separate types for integers vs. floating point. I don't think this division makes sense. The default number type in any high-level language should be one that roughly matches how numbers actually work in the real world, with floating point as a secondary alternative for performance-critical cases.

For trying out new ideas, PufferLib is the only thing out there that is fast and hackable. I've ported and run several hundred experiments on MinGRU and Mamba in the last 48 hours alone. Shipping new tools to help with this in the next version, too!

Point - experimental work represents multiple months of the dev effort on PufferLib. Probably around 1/3, not 10%. The puffer 2.0->3.0 capabilities leap was mostly algo-side. It's just that the sexy stuff usually doesn't work as written on paper.

Aight let's talk about frameworks, libraries, RL, and why I probably don't like your favorite RL codebase. Yes, including that one.

The unusual thing about RL is that the algorithm is the easy part. GRPO is a single-line equation on some logprobs. If you have the data, computing the loss is trivial, and then presumably you're using it with a backprop library of your choice.

But that's the problem -- getting the data. It's a pain in the ass. In regular RL you have to do rollouts, perhaps truncate some episodes, and handle the ends accordingly. If you don't want to be a snail, you'll want to vectorize the environment and adapt the algorithm for that. If you want to do an LLM, you need to do all the nonsense that makes LLMs fit in memory. You need to be careful about your prompts, mask out the right parts for the loss. You need a decent generation engine (vLLM), which then makes it a pain to update the weights. If you want to do multi-agent multi-turn LLM RL, might as well do commit sudoku.

While we have many disagreements on just about anything RL-related, I think @jsuarez5341's Pufferlib exemplifies this point beautifully. It's without a doubt incredible at what it does - training RL algos on simulated environments very very quickly.

But most of its novelty is pure infra. The core algorithms are largely the same as they've been for years, and I'm willing to bet they represent less than 10% of the overall engineering effort.

Naturally, this has implications on the code you need to write to do anything beyond running the built-in examples. What I find time and time again, is that for many sufficiently nontrivial (read: interesting) research problems, it takes a similar amount of time to (a) write the thing from scratch/from simple primitives, or (b) adapt an existing framework to accommodate crazy ideas.

In the former, you focus on writing the actual logic. In the latter, you wrangle the framework to allow you to add the logic. I know what I like better.

All of this is because the algorithm is the easy part.

The infra is the pain in the ass. So whenever you're in a position to choose - use the tools that simplify infra, and write the training loop yourself. Don't build frameworks, build libraries. You'll thank yourself later.

Big shout out to my Master's supervisor from back in the day, who was the first one to tell me to drop rllib and just write PPO myself in PyTorch. And to @hallerite for inspiring me to finally write up this rant. I might write a proper effortpost with examples at some point in the future if the people demand it.