Meet BFM-Zero: A Promptable Humanoid Behavioral Foundation Model w/ Unsupervised RL👉 lecar-lab.github.io/BFM-Zero…

🧩ONE latent space for ALL tasks

⚡Zero-shot goal reaching, tracking, and reward optimization (any reward at test time), from ONE policy

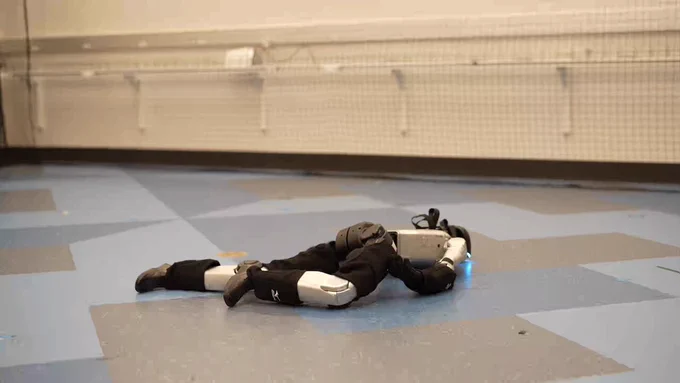

🤖Natural recovery & transition

Nov 7, 2025 · 3:32 PM UTC

How it works 👉 lecar-lab.github.io/BFM-Zero…

🧠 Unsupervised RL — no specific rewards in training

🔁 Forward–Backward Representation — builds a dynamics-aware space

🎯 Zero-shot Inference — reach goals, track motions, or optimize any reward function at test time without retraining

Goal Reaching: z = B(s_g) (🤔B can be viewed as "inverse dynamics" that maps from a desired state to a needed latent skill) lecar-lab.github.io/BFM-Zero…

See how natural it can be 👇:

Reward Optimization: z = \sum_i B(s_i)r(s_i) lecar-lab.github.io/BFM-Zero…

We do not have any labeled rewards in training !

In the test time, users can prompt in ANY type of reward w.r.t. the robot states, and the policy zero-shot output the optimized skills without retraining.

Motion Tracking: z = \sum_n \lambda_n B(s_{t+n}) (this can be viewed as a "moving horizon" (or MPC) version of goal tracking) lecar-lab.github.io/BFM-Zero…

Diverse motion tracking + natural & smooth recovery even under unexpected falls🫣

Deeply grateful to @zhengyiluo — a dream collaboration😍; to the Motivo [link: metamotivo.metademolab.com/] team at @AIatMeta for making this possible; to @TongheZhang01 @JimmyDai218776 @ElijahGalahad for the countless late nights; and to @GuanyaShi @teopir for the invaluable advice.