I'm in Montréal to present new work on XAI for RL agents using World Models at the IJCAI Workshop on XAI, Aug 17

arxiv.org/abs/2505.08073

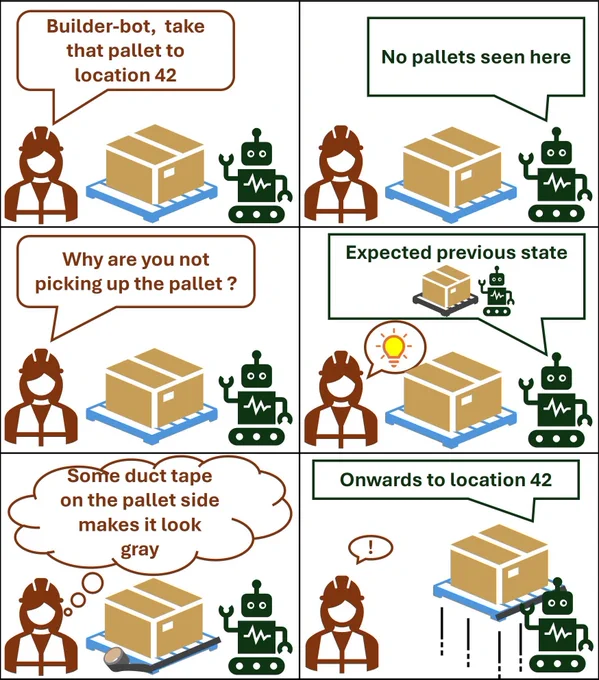

When an agent doesn't perform as expected, we run the world model backwards to generate counterfactual states from which the agent would have met expectations.

Aug 16, 2025 · 6:47 PM UTC

Explaining RL agent behaviors is wickedly hard. Even harder to provide non-technical end-users *actionable* insights.

We show that our explanations are actionable, in that people can recognize what they need to do to get the desired behavior from an agent.

Also, we show that by telling users what they need to do to get desired behavior, we are helping users *control* the behavior of the agent through manipulation of the agent's environment.

You can "program" an agent by manipulating its environment in just the right way.