RIP fine-tuning ☠️

This new Stanford paper just killed it.

It’s called 'Agentic Context Engineering (ACE)' and it proves you can make models smarter without touching a single weight.

Instead of retraining, ACE evolves the context itself.

The model writes, reflects, and edits its own prompt over and over until it becomes a self-improving system.

Think of it like the model keeping a growing notebook of what works.

Each failure becomes a strategy. Each success becomes a rule.

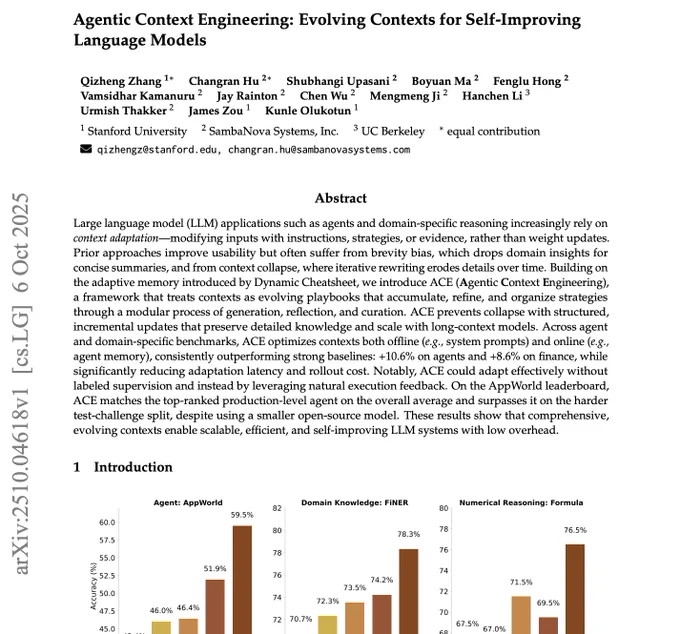

The results are absurd:

+10.6% better than GPT-4–powered agents on AppWorld.

+8.6% on finance reasoning.

86.9% lower cost and latency.

No labels. Just feedback.

Everyone’s been obsessed with “short, clean” prompts.

ACE flips that. It builds long, detailed evolving playbooks that never forget. And it works because LLMs don’t want simplicity, they want *context density.

If this scales, the next generation of AI won’t be “fine-tuned.”

It’ll be self-tuned.

We’re entering the era of living prompts.

Context engineering and model fine-tuning address distinct challenges and operate under different sets of constraints.

Oct 9, 2025 · 7:48 PM UTC