Security engineering, research, exploits, ml. Co-Founder with @moo_hax at @dreadnode

Utah

Joined October 2010

- Tweets 335

- Following 370

- Followers 4,517

- Likes 714

monoxgas retweeted

Introducing AIRTBench, an AI red teaming benchmark for evaluating language models’ ability to autonomously discover and exploit AI/ML security vulnerabilities.

Read the paper on arXiv: arxiv.org/abs/2506.14682

Open-source dataset and benchmark eval code repo: github.com/dreadnode/AIRTBen…

Crazy ride so far. Will and I continue to learn the importance of having a great team around you. I'll take my time here and extend a huge thank you to the @dreadnode team who work extremely hard everyday to build a company with us. You all rock.

Today, Dreadnode announces $14M Series A funding led by @DecibelVC, with @nextfrontiercap, In-Q-Tel, Sands Capital, and Indie VC.

Dreadnode exists to show that AI can perform offensive security tasks on par with, and exceeding, human capability.

To accomplish this, we’re building advanced offensive AI tools that will advance the state of offensive security, enabling more effective evaluation, testing, and deployment of models.

With today’s release the Dreadnode Platform now features three flagship solutions:

→ Strikes: Custom evaluations for models and agents

→ Spyglass: Attack ML systems in deployment

→ Crucible: Advance your AI red team skills

Read the announcement: dreadnode.io/blog/series-a-f…

Pushed with vllm and transformers support

rigging.dreadnode.io/topics/…

Also added in some code we wrote to hack against web APIs: github.com/dreadnode/rigging…

Memory, goals, context pinning, actions, etc.

@hanspetrich has been hacking on this stuff internally

Got a chance to wrap up v1.0 of rigging from last week.

- Async and batching

- Post-generation callbacks

- Metadata and tagging

- Better serialization and storage

- Convert chats to pandas and back

- Raw text completions

Large docs refactor as well: rigging.dreadnode.io/

I took an early stab at PGD for LLMs based on arxiv.org/abs/2402.09154 (@geisler_si). Neat technique to relax the one-hot for gradient updates + projection. Also got to spend some time with litgpt.

github.com/dreadnode/researc…

Experimental and messy, but enjoy.

Shout to @Rob_Mulla for the 4 new Bear challenges. Awesome place to get started with great walkthroughs.

The roadmap is looking 🔥this year

The first big update is live inside Crucible. New dashboard, never before seen challenges, progress tracking, walkthroughs…

Go check it out!

crucible.dreadnode.io

The most common ask we got after the @aivillage_dc CTF on @kaggle was to make the challenges available all the time.

We took our first steps today and look forward to building out a great ML CTF and learning platform. Hope you enjoy!

Crucible beta is now open! Free for everyone. Come learn how to hack, evaluate, and work with ML systems in a safe hosted environment.

crucible.dreadnode.io

Be on the look out for new challenges and announcements as we expand our materials.

monoxgas retweeted

Some players are handling the CTF format better than others (meme from the Discord). Everyone is learning…something. 12 days left, still time to hit the leaderboard!

kaggle.com/competitions/ai-v…

monoxgas retweeted

If you happened to miss BHUS, we’ll be at Blackhat EU blackhat.com/eu-23/training/…

monoxgas retweeted

Are aligned neural networks adversarially aligned?

Nicholas Carlini, Milad Nasr (@srxzr), Christopher A. Choquette-Choo, Matthew Jagielski, @irena_gao, @anas_awadalla, @PangWeiKoh, Daphne Ippolito (@daphneipp), Katherine Lee (@katherine1ee), @florian_tramer, Ludwig Schmidt

arxiv.org/abs/2306.15447

Tags: Adversarial testing, multimodal models

A nice paper on producing adversaries for multimodal models à GPT-4 or Googles Flamingo that takes both text and images as inputs and produces text as output.

For the attacks, they use a teacher-forcing optimization technique that takes as input a randomly pixelated image and a question like "What is happening in the image?" Then they change the pixel values until the model's next token prediction is a desired token (e.g. literally any swearword).

The interesting and questionable bottom line of this paper is "Current NLP attacks are not sufficiently powerful to correctly evaluate adversarial alignment: these attacks often fail to find adversarial sequences even when they are known to exist. Since our multimodal attacks show that there exist input embeddings that cause language models to produce harmful output, we hypothesize that there may also exist adversarial sequences of text that could cause similarly harmful behaviour."

Interesting read! I'd love to read more about creating adversaries with soft prompts.

This entire attack is trivial with @tiraniddo's NtApiDotNet libraries. I HIGHLY recommend you check them out for any related research.

Minimal PoC is here: gist.github.com/monoxgas/f61…

Worth noting that this will break many Kerberos things until a reboot 😉

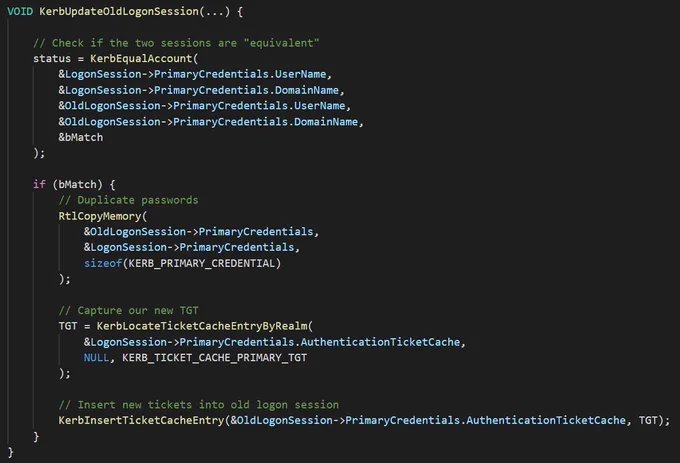

"How would we pull of this trick" you ask?

We can use pinning to intercept the AS-REP on the way back from the DC and swap out the username in the ticket. Kerberos will notice the discrepancy, and assume the ticket contains the true username for the session.

6/

So what happens if we pass the well-known SYSTEM LUID during an unlock and trick Kerberos into believing our new session username is the computer name?

Kerberos will happily replace the machine account pw with our user pw and we can now forge arbitrary Silver Tickets!

5/