Compiling in real-time, the race towards AGI. The Largest Show on X for AI. 🗞️ Get my daily AI analysis newsletter to your email 👉 rohan-paul.com

Ex Inv Banking (Deutsche)

Joined June 2014

- Tweets 59,306

- Following 8,379

- Followers 108,573

- Likes 52,647

Somebody wrote a prompt that's supposed to reduce ChatGPT hallucinations.

A Reality Filter

Has a Google Gemini and Claude version too.

it’s a directive scaffold that makes them more likely to admit when they don’t know.

"Reduce hallucinations mechanically—through repeated instruction patterns, not by teaching them “truth.”

The Reality Filter here is a permanent directive for GPT-4, Gemini Pro, Claude and a universal version. It requires labeling any content not directly verifiable with tags like [Unverified] or [Inference] and mandates “I cannot verify this” when lacking data.

---

From r/PromptEngineering/

Rohan Paul retweeted

This looks fantastic, MicroFactory DevKit for Robots.

The big idea is to standardize the physical workcell, then scale imitation learning across identical copies.

A single, tightly controlled, exactly reproduced robot hardware cell removes the environmental randomness that usually breaks imitation learning.

With the same fixtures, sensors, lighting, and calibration, demonstrations gathered in 1 cell can be reused, and the trained policy transfers to every cloned cell with little extra data.

Sharply reduces domain shift and makes data and policies reusable across sites.

Rohan Paul retweeted

The U.S. House Select Committee released a bombshell report titled “Selling the Forges of the Future” on October 7, 2025, detailing how China is stockpiling semiconductor manufacturing equipment and tools from leading Western companies.

China spent $38B in 2024 on these tools, about 39% of big makers’ total sales.

The report covers the machines that build chips, like pattern printers, etchers, coaters, cleaners, testers, and repair systems.

A House committee gathered sales and license data from 5 major tool makers and mapped where the machines went.

Those companies are Applied Materials, ASML, KLA, Lam Research, and Tokyo Electron.

The report says many Chinese factories still depend on foreign tools, so they import a lot while trying to build local versions.

China cannot buy extreme ultraviolet machines, so buyers shifted to advanced deep ultraviolet immersion units such as NXT:1980i and add more steps to reach around 7nm at lower yield.

Sales data shows China became a major buyer of those deep ultraviolet units while service teams kept older systems productive.

The report also says a big share of sales in China went to state owned firms, so buying and expansion link back to the government.

A good doctor, imo. 😀

he is probably taking a 2nd opinion from AI.

just hoping he is using a ChatGPT pro atleast. 😄

---

From r/ChatGPT

"All AI activity in all global data centers in 2024 emitted about 2% as much as cement production. If we could optimize cement production just 2% more, this would do as much good as stopping all AI services globally. "

Good reminder..

"Using ChatGPT is not bad for the environment - a cheat sheet"

A viral blog with lots of graph.

More articles are now written by AI than humans.

New research by Graphite.

Even though these AI-made pieces dominate the web, their research shows that they barely show up in Google results or in ChatGPT responses.

---

graphite .io/five-percent/more-articles-are-now-created-by-ai-than-humans

Rohan Paul retweeted

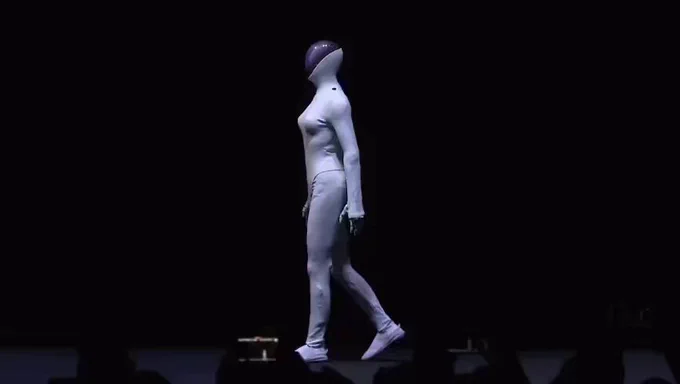

Wow, this is just so incredibly cool 👏👏

You thought it was a suit because the motion finally hits human cadence.

That needs clean torque control, low jerk trajectories, and stable center of mass tracking.

Let this stand as the final proof: the robot that mastered the catwalk is built by a Chinese startup.

The journey continues, and we will advance, step by step.

@XPengMotors

Rohan Paul retweeted

Tesla Optimus pilot production line in Fremont.

Elon Musk has said the pilot line is aimed at up to 1mn units per year.

Internally, the goal is $20k cost of goods per robot at scale, which would undercut many industrial humanoid efforts if achieved.

New @AIatMeta paper shows a way to train agents using synthetic experiences instead of real rollouts.

It makes RL practical when real websites are slow, noisy, or hard to reset.

Policies pretrained this way reached 40% higher real performance while using under 10% real data.

The main problem is that reinforcement learning needs many slow interactions and unstable rewards.

DreamGym builds a text based experience model that predicts the next state and a reward.

It uses step by step reasoning, the task goal, and similar past trajectories to keep transitions consistent.

A replay buffer starts with offline demos and keeps adding new synthetic rollouts that match the current policy.

A task generator picks tasks with mixed success and makes harder variations to teach missing skills.

The agent then trains with PPO or GRPO using dense and consistent signals.

On web tasks without RL support and on RL ready setups, it beats or matches strong baselines.

The core change is to treat environments as structured reasoning experiences, not exact simulators.

----

Paper – arxiv. org/abs/2511.03773

Paper Title: "Scaling Agent Learning via Experience Synthesis"

Rohan Paul retweeted

🦿Xpeng showed a humanoid robot called IRON whose movement looked so human that the team literally cut it open on stage to prove it is a machine.

IRON uses a bionic body with a flexible spine, synthetic muscles, and soft skin so joints and torso can twist smoothly like a person.

The system has 82 degrees of freedom in total with 22 in each hand for fine finger control.

Compute runs on 3 custom AI chips rated at 2,250 TOPS (Tera Operations Per Second), which is far above typical laptop neural accelerators, so it can handle vision and motion planning on the robot.

The AI stack focuses on turning camera input directly into body movement without routing through text, which reduces lag and makes the gait look natural.

Xpeng staged the cut-open demo at AI Day in Guangzhou this week, addressing rumors that a performer was inside by exposing internal actuators, wiring, and cooling.

Company materials also mention a large physical-world model and a multi-brain control setup for dialogue, perception, and locomotion, hinting at a path from stage demos to service work.

Production is targeted for 2026, so near-term tasks will be limited, but the hardware shows a serious step toward human-scale manipulation.

Rohan Paul retweeted

🧮 Google just published DS-STAR

A data science agent that can automate a range of tasks — from statistical analysis to visualization and data wrangling.

Reads messy files, plans steps, writes and runs code, and verifies itself, reaching state of the art on tough multi file tasks.

It lifts accuracy to 45.2% on DABStep, 44.7% on KramaBench, and 38.5% on DA-Code, and holds first place on DABStep as of September-25.

Earlier agents lean on clean CSVs and struggle when answers are split across JSON, markdown, and free text.

DS-STAR begins by scanning a directory and producing a plain language summary of each file’s structure and contents that becomes shared context.

A Planner proposes steps, a Coder writes Python, a Verifier checks sufficiency, a Router fixes mistakes or adds steps, and the loop stops when it passes or reaches 10 rounds.

This setup handles heterogeneous data because the summaries surface schema, types, keys, and hints, so plans refer to real fields instead of guessing.

On benchmarks the gains are steady, moving from 41.0% to 45.2% on DABStep, 39.8% to 44.7% on KramaBench, and 37.0% to 38.5% on DA-Code.

Ablations explain the lift, removing the Data File Analyzer drops hard task accuracy on DABStep to 26.98%, and removing the Router also hurts across easy and hard tasks.

Refinement depth matches difficulty, hard tasks average 5.6 rounds, easy tasks average 3.0 rounds, and over 50% of easy tasks finish in 1 round.

The framework generalizes across base models, with a GPT-5 version doing better on easy items and a Gemini-2.5-Pro version doing better on hard items.

Net effect, DS-STAR reduces the gap between messy data and reliable answers across CSV, XLSX, JSON, markdown, and plain text.

Rohan Paul retweeted

How Xpeng's IRON walks almost like a Human?

It comes from a mix of hardware that copies human anatomy and software that copies human motion.

On the hardware side, IRON uses a bionic spine and “bone-muscle-skin” layout. The added waist and spine freedom lets the torso and hips sway together as humans do, which produces natural weight transfer and hip rotation.

XPeng’s team also added passive degrees of freedom at the toes, so each step rolls off the forefoot instead of stamping flat, which smooths mid-stance and gives that catwalk rhythm.

High joint count is the second pillar. The new generation lists 82 total degrees of freedom across the body, with dexterous hands listed at 22 DoF, so the arms swing, shoulders shrug, and fingers micro-adjust the balance just like a person.

Those small stabilizing motions are what make the whole posture read as human rather than “robot stiff.”

On the software side, IRON is trained with imitation learning on human motion data, then refined by XPeng’s VLA model, short for Vision-Language-Action.

That stack turns what the cameras see straight into whole-body actions, which avoids hand-crafted gait rules. In demos shared by Chinese media, the dance shown in the viral clip was learned after about 2 hours of imitation training, which matches what you’d expect when a pre-trained policy is quickly fine-tuned on a new routine.

The compute and power system make this run in real time. IRON carries 3 in-house Turing AI chips for a combined 2,250 TOPS, and XPeng says the robot uses all-solid-state batteries for lighter, safer energy storage. That headroom lets the controller keep balance, arm swing, and toe roll coordinated while reacting to tiny disturbances.

⚙️ Google Research introduces Nested Learning

which stacks learning levels with different update speeds to cut catastrophic forgetting, and showcases a model called Hope as the first example.

Treats the network design and the optimizer as the same thing viewed at different depths, each depth having its own context flow and its own update rate.

Explains both backprop and attention as forms of associative memory, so training steps and token interactions become one shared idea.

It builds a continuum memory that covers short, medium, and long ranges, using modules that update at different rhythms instead of a single short versus long split.

Forms deep optimizers by moving from raw dot product matching toward an L2 regression loss, which gives momentum like updates that are steadier under noise.

Across public language modeling and common sense benchmarks, Hope shows lower perplexity and higher accuracy than strong recurrent and Transformer baselines, and on long context Needle in a Haystack tasks it outperforms Titans, TTT, Samba, and Mamba2.

This design lets you pick how often each part learns, treat the optimizer as learnable memory, and combine short, mid, and long memory in one system for safer continual learning.

---

research .google/blog/introducing-nested-learning-a-new-ml-paradigm-for-continual-learning/

Rohan Paul retweeted

Xpeng’s CEO debunks “Humans inside” claim for their new Humanoid Robot

🦿Xpeng showed a humanoid robot called IRON whose movement looked so human that the team literally cut it open on stage to prove it is a machine.

IRON uses a bionic body with a flexible spine, synthetic muscles, and soft skin so joints and torso can twist smoothly like a person.

The system has 82 degrees of freedom in total with 22 in each hand for fine finger control.

Compute runs on 3 custom AI chips rated at 2,250 TOPS (Tera Operations Per Second), which is far above typical laptop neural accelerators, so it can handle vision and motion planning on the robot.

The AI stack focuses on turning camera input directly into body movement without routing through text, which reduces lag and makes the gait look natural.

Xpeng staged the cut-open demo at AI Day in Guangzhou this week, addressing rumors that a performer was inside by exposing internal actuators, wiring, and cooling.

Company materials also mention a large physical-world model and a multi-brain control setup for dialogue, perception, and locomotion, hinting at a path from stage demos to service work.

Production is targeted for 2026, so near-term tasks will be limited, but the hardware shows a serious step toward human-scale manipulation.