Compiling in real-time, the race towards AGI. The Largest Show on X for AI. 🗞️ Get my daily AI analysis newsletter to your email 👉 rohan-paul.com

Ex Inv Banking (Deutsche)

Joined June 2014

- Tweets 59,306

- Following 8,380

- Followers 108,520

- Likes 52,647

Pinned Tweet

wow. just saw The Economic Times newspaper published an article about me 😃

definitely feels so unreal that Sundar Pichai and Jeff Bezos follows me here.

@X is truly a miracle.

Forever thankful to all of my followers 🙏🫡

Rohan Paul retweeted

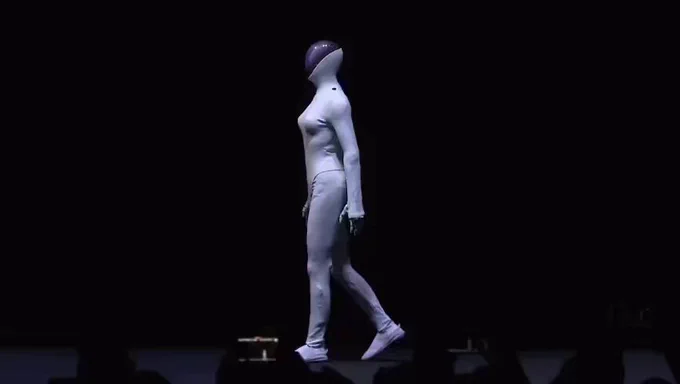

Not quite human, but almost.

She's watching you blink.

---

From r/singularity/realmvp77

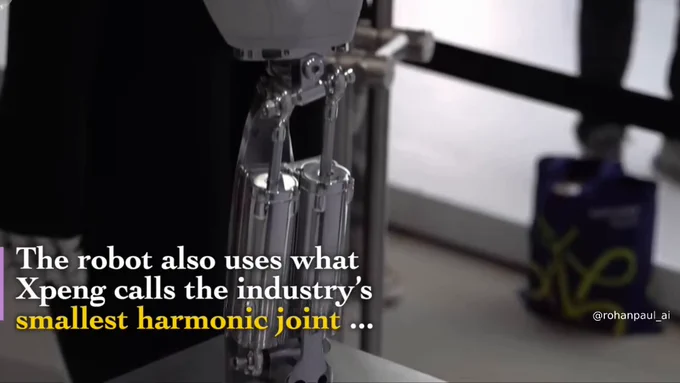

China's Xpeng @XPengMotors 's humanoid robot, IRON also uses a self-developed component described as the industry's smallest "harmonic joint" to achieve 1:1 human hand size and dexterity

And the hand has 22 degrees of freedom.

China's Xpeng @XPengMotors 's humanoid robot, IRON also uses a self-developed component described as the industry's smallest "harmonic joint" to achieve 1:1 human hand size and dexterity

And the hand has 22 degrees of freedom.

wow.. the uncanny valley of motion is closing. 🤯

The humanoid robot by China's Xpeng @XPengMotors

From 5 meters your brain says “person”, then remembers it is a robot.

Weight shifts, small corrections, no stiffness.

A walking beauty 🫡

Almost like reenacting a scene from Blade Runner or Ex Machina, Xpeng founder He Xiaopeng literally unzipped the back of his company’s humanoid robot to show it was not a person, after the robot’s lifelike motion caused a stir online.

wow.. the uncanny valley of motion is closing. 🤯

The humanoid robot by China's Xpeng @XPengMotors

From 5 meters your brain says “person”, then remembers it is a robot.

Weight shifts, small corrections, no stiffness.

A walking beauty 🫡

wow.. the uncanny valley of motion is closing. 🤯

The humanoid robot by China's Xpeng @XPengMotors

From 5 meters your brain says “person”, then remembers it is a robot.

Weight shifts, small corrections, no stiffness.

A walking beauty 🫡

🦿Xpeng showed a humanoid robot called IRON whose movement looked so human that the team literally cut it open on stage to prove it is a machine.

IRON uses a bionic body with a flexible spine, synthetic muscles, and soft skin so joints and torso can twist smoothly like a person.

The system has 82 degrees of freedom in total with 22 in each hand for fine finger control.

Compute runs on 3 custom AI chips rated at 2,250 TOPS (Tera Operations Per Second), which is far above typical laptop neural accelerators, so it can handle vision and motion planning on the robot.

The AI stack focuses on turning camera input directly into body movement without routing through text, which reduces lag and makes the gait look natural.

Xpeng staged the cut-open demo at AI Day in Guangzhou this week, addressing rumors that a performer was inside by exposing internal actuators, wiring, and cooling.

Company materials also mention a large physical-world model and a multi-brain control setup for dialogue, perception, and locomotion, hinting at a path from stage demos to service work.

Production is targeted for 2026, so near-term tasks will be limited, but the hardware shows a serious step toward human-scale manipulation.

Rohan Paul retweeted

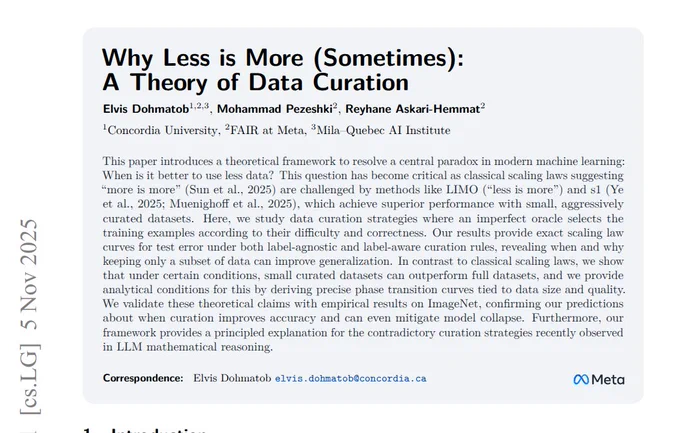

New @AIatMeta paper explains when a smaller, curated dataset beats using everything.

Standard training wastes effort because many examples are redundant or wrong.

They formalize a label generator, a pruning oracle, and a learner.

From this, they derive exact error laws and sharp regime switches.

With a strong generator and plenty of data, keeping hard examples works best.

With a weak generator or small data, keeping easy examples or keeping more helps.

They analyze 2 modes, label agnostic by features and label aware that first filters wrong labels.

ImageNet and LLM math results match the theory, and pruning also prevents collapse in self training.

----

Paper – arxiv. org/abs/2511.03492

Paper Title: "Why Less is More (Sometimes): A Theory of Data Curation"

Rohan Paul retweeted

This paper builds a fully automated way to evaluate domain-specific RAG the way humans would RAGalyst

gives a reliable automatic judge for RAG.

It auto-builds questions, answers, and the exact context from the documents.

Then it filters weak items using Answerability, Faithfulness, and Answer Relevance.

It adds a stronger LLM judge called Answer Correctness.

That judge tracks human ratings closely, about 0.89 correlation.

It also parses, chunks, retrieves, and grades against a reference.

In military, cybersecurity, and bridge engineering tests, best settings changed by domain.

No single embedding model, LLM, or prompt won across all tasks.

Answer Correctness often peaks with 3 to 5 retrieved chunks.

More chunks can raise relevance but slightly hurt faithfulness.

Compared with RAGAS, its datasets are cleaner by separating question writing and answering and dropping weak pairs.

So teams can pick RAG settings that match human judgment faster.

----

Paper – arxiv. org/abs/2511.04502

Paper Title: "RAGalyst: Automated Human-Aligned Agentic Evaluation for Domain-Specific RAG"

Rohan Paul retweeted

The paper argues that making the model “think” by generating a short video can improve reasoning on both pictures and text.

Images freeze time, so they miss step by step motion, and text sits apart from vision, so the 2 channels do not truly work together.

Video adds time, so the model can draw, erase, and adjust while it reasons, then put the final answer inside the clip.

The authors build VideoThinkBench, which tests visual puzzles like geometry, mazes, ARC style grids, plus standard math and general knowledge sets.

On visual puzzles, Sora-2 often matches strong vision language models and sometimes beats them, mainly when drawing lines or locating points helps.

On text tasks, the spoken answer is usually cleaner than the written frames, which shows speech extraction is more reliable than handwriting in video.

Trying multiple videos and taking the majority answer boosts accuracy because correct intent repeats across frames and retries.

Extra tests suggest the text skill likely comes from a hidden prompt rewriter that solves the problem before video generation.

----

Paper – arxiv. org/abs/2511.04570

Paper Title: "Thinking with Video: Video Generation as a Promising Multimodal Reasoning Paradigm"

Rohan Paul retweeted

This paper builds a model that thinks by editing the image while it reasons.

So the reasoning is grounded in actual pixels, not just text.

Standard multimodal models often talk through long steps but miss exact pixels that matter.

This work makes the model read the image, write code to edit it, then reason again.

A safe sandbox executes the code and returns a new image for the next step.

A data flywheel auto creates tasks from knowledge concepts and tool ideas, then filters and fixes them.

Difficulty rises by adding extra constructions in parallel or in sequence to force longer chains of thought.

Training starts with point level perception so the model can localize anchors like corners and intersections.

Then it learns to generate image editing code with supervision, and later improves with reinforcement learning.

A new benchmark, VTBench, checks perception, instruction driven edits, and full interactive reasoning.

The 7B model shows the biggest gains on tasks that need image edits, showing tighter visual grounding than baselines.

Each reasoning step is visible in the picture, so logic stays tied to pixels.

---

Paper – arxiv. org/abs/2511.04460

Paper Title: "V-Thinker: Interactive Thinking with Images"

Rohan Paul retweeted

⚠️ Job postings on Indeed are sliding toward the pre-pandemic baseline.

As of the week ending Oct-31, postings are -6.4% year over year, the lowest since Feb-21, i.e. the downtrend is still active rather than stabilizing.

The slowdown is becoming structural.

BREAKING: Job postings on Indeed fell -6.4% YoY in the week ending October 31st, now at the lowest level since February 2021.

Postings have now declined -36.9% since the April 2022 peak.

The number of available vacancies is now only +1.7% above pre-pandemic levels seen in February 2020.

Furthermore, new job postings are just +4.1% above those levels.

This signals further deterioration ahead in BLS job openings data, which has been delayed due to the government shutdown.

Labor market weakness is spreading.

Rohan Paul retweeted

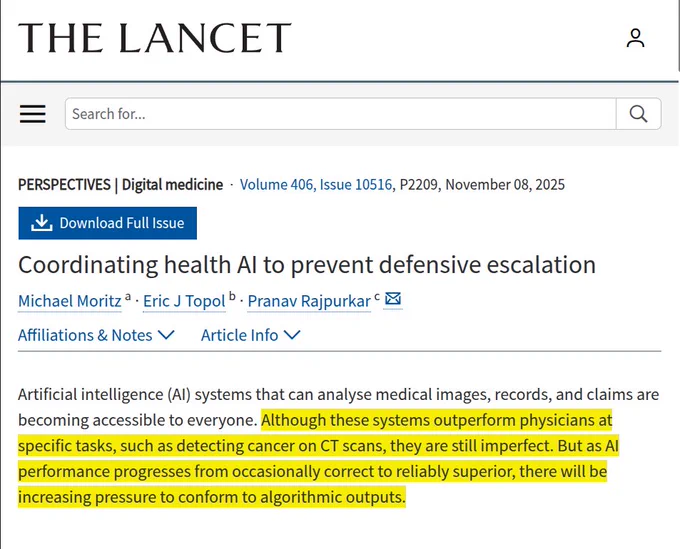

This paper brings a great insight on the effect of AI in medical care.

Defensive AI use can trap clinicians in a prisoner's dilemma, where choices that reduce blame end up hurting care.

Says AI tools are already better than many doctors at some narrow jobs, like spotting cancer on a CT scan, but they still make mistakes.

As these tools keep getting better and hit a level where they are right most of the time, people in the system will feel pressure to follow whatever the AI says.

A doctor who disagrees with the AI might worry about blame later if the AI was right, so even when the doctor has a good reason, choosing a different path will feel risky.

Over time that pressure can push everyone to default to the AI algorithm, not because it is perfect, but because going against it looks unsafe for careers and lawsuits.

Big gaps remain because shared coordination tools are rare, liability is fuzzy when AI and a clinician disagree, and without policy the rational move is to keep escalating AI use.

The fix is system level coordination that pays for patient outcomes, protects good faith judgment when AI disagrees, and demands transparent reasoning from the tools.

Rohan Paul retweeted

🔌 Alloy Enterprises built a new copper cooling system that can handle the extreme heat from 600 kW GPU racks coming in 2027.

They do this by fusing thin copper sheets into a single solid piece that cools both GPUs and the other 20% of hot parts like memory and networking chips.

Nvidia’s Rubin Ultra NVL576 racks are projected at 600 kW, so cooling must extend beyond GPUs to memory and networking that consume roughly 20% of a server’s cooling budget.

Alloy’s stack forging laser-cuts thin copper layers, masks the spots that should not bond, stacks them, then diffusion-bonds under heat and pressure into a single piece that tolerates high liquid pressures.

The single-piece build removes seam-leak risks from two-piece machined plates and avoids porosity risks seen in some 3D-printed parts, while staying pricier than machining but cheaper than 3D printing for tight spaces.

Fine internal channels down to 50 microns increase surface area and bring fresh coolant to hot spots, yielding a claimed 35% better thermal performance versus rival plates.

It can remove 4,350 watts of heat while running 4 liters of coolant per minute, which is 33% better than the standard cooling performance defined by the Open Compute Project (OCP).

---

techcrunch .com/2025/11/05/this-startups-metal-stacks-could-help-solve-ais-massive-heat-problem/

Rohan Paul retweeted

The paper introduces HaluMem to pinpoint where AI memory systems hallucinate and shows errors grow during extraction and updates.

It supports 1M+ token contexts.

HaluMem tests memory step by step instead of only final answers.

It measures 3 stages, extraction, updating, and memory question answering.

Extraction checks if real facts are saved without inventions, balancing coverage with accuracy.

Updating checks if old facts are correctly changed and flags missed or wrong edits.

Question answering evaluates the pipeline using retrieved memories and marks answers as correct, fabricated, or incomplete.

The dataset has long user centered dialogues with labeled memories and focused questions for stage level checks.

Across tools, weak extraction causes bad updates and wrong answers in long context, often under 55% accuracy.

The fix is tighter memory operations, transparent updates, and stricter storage and retrieval.

----

Paper – arxiv. org/abs/2511.03506

Paper Title: "HaluMem: Evaluating Hallucinations in Memory Systems of Agents"

Rohan Paul retweeted

Even an $80K robot has lots of breaking points, and so does the AI running it.

we have a long way to go before they can become our room-mate.

Rohan Paul retweeted

This paper shows LLMs can build accurate, readable personality structures from very little data.

Using only 20 Big Five answers per person, the models predict many other questionnaire items.

The evaluation checks whether the full pattern of relationships between scales is captured, not just item scores.

The model patterns match human patterns closely, and they come out stronger than humans’.

The authors call that strengthening structural amplification.

Models that amplify more also predict people better.

Reasoning traces show a 2 step routine, first compress the 20 answers into a short personality summary, then generate item responses from that summary.

The summaries lock onto the big factors well, but they struggle to weigh specific items inside each factor.

The summary alone almost recreates the structure, and adding it to the raw scores improves accuracy.

This makes the models look like low-noise respondents that filter human reporting noise with one stable style.

----

Paper – arxiv. org/abs/2511.03235

Paper Title: "From Five Dimensions to Many: LLMs as Precise and Interpretable Psychological Profilers"

Rohan Paul retweeted

This paper creates a realistic benchmark to test if AI agents can truly perform end to end LLM research.

Agents need about 6.5x more run time here than older tests, which shows the tasks are harder.

InnovatorBench packs 20 tasks across 6 areas, covering data work, loss design, reward design, and scaffolds.

Each task makes the agent code, train or run inference, then submit results scored for correctness and quality.

ResearchGym is the workbench and lets agents use many machines, background jobs, file tools, web tools, and snapshots.

An outside scoring server checks outputs and formats, so hacks and formatting tricks do not help.

The team runs a simple ReAct style agent with several strong models to set baselines across the domains.

Models do better on data tasks that allow small noise, but they struggle on loss or reward design.

Common failures are stopping long runs early, colliding on GPUs, picking slow libraries, and reusing canned reasoning.

So the benchmark exposes the gap between coding skill and full research workflow, end to end.

----

Paper – arxiv. org/abs/2510.27598

Paper Title: "InnovatorBench: Evaluating Agents' Ability to Conduct Innovative LLM Research"