Holy shit… Meta might’ve just solved self-improving AI 🤯

Their new paper SPICE (Self-Play in Corpus Environments) basically turns a language model into its own teacher no humans, no labels, no datasets just the internet as its training ground.

Here’s the twist: one copy of the model becomes a Challenger that digs through real documents to create hard, fact-grounded reasoning problems. Another copy becomes the Reasoner, trying to solve them without access to the source.

They compete, learn, and evolve together an automatic curriculum with real-world grounding so it never collapses into hallucinations.

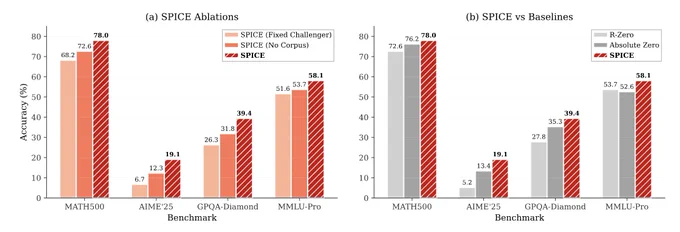

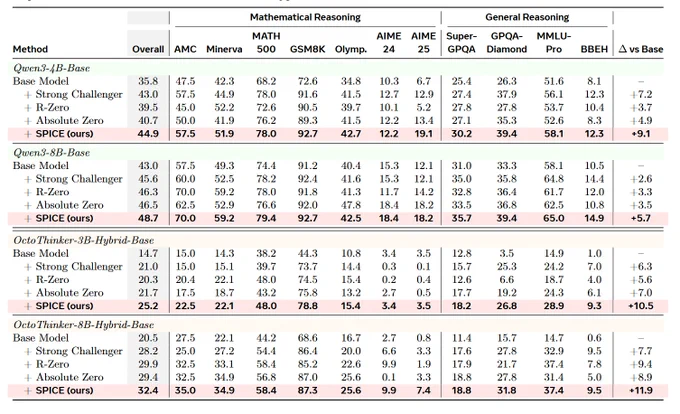

The results are nuts:

+9.1% on reasoning benchmarks with Qwen3-4B

+11.9% with OctoThinker-8B

and it beats every prior self-play method like R-Zero and Absolute Zero.

This flips the script on AI self-improvement.

Instead of looping on synthetic junk, SPICE grows by mining real knowledge a closed-loop system with open-world intelligence.

If this scales, we might be staring at the blueprint for autonomous, self-evolving reasoning models.

Nov 1, 2025 · 10:02 AM UTC

Models teaching themselves just hit a new level.

This chart shows one model crushing others across math and reasoning tasks all by competing with itself in a grounded loop.

Here’s how it works.

One version of the model creates questions using real documents. Another tries to solve them without access to those documents.

That constant information gap is what forces real reasoning to emerge.

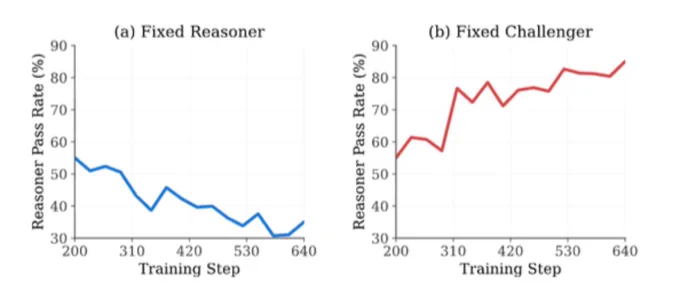

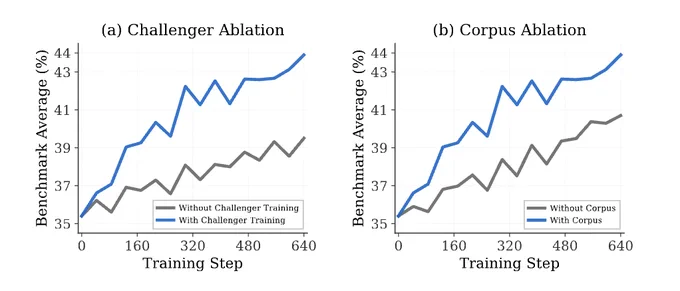

The most fascinating part: they co-evolve.

As the question generator gets harder, the solver improves both learning in sync.

You can literally see the curves crossing as difficulty rises and skill follows.

You can even watch the model’s creativity evolve.

Early challenges are basic “what’s the number?”

Later ones become multi-step reasoning puzzles that require understanding proportionality and geometry.

The solver evolves too.

At first it guesses.

Later, it breaks down problems step-by-step, verifies results, and cross-checks logic before answering.

That’s emergent reasoning in action.

This curve explains why it all works.

The model learns best when questions sit right at the edge of its ability not too easy, not too hard.

That sweet spot fuels endless growth.

A model that invents challenges, grounds them in reality, and learns from its own mistakes…

That’s not training. That’s evolution.

This feels like the first real step toward self-improving AI.

Read the paper here: arxiv.org/abs/2510.24684