Polyagentmorous. Came back from retirement to mess with AI. Enjoyin' Twitter but no time to read it all? Get @sweetistics

Vienna & London

Joined March 2009

- Tweets 122,875

- Following 2,043

- Followers 49,483

- Likes 53,168

Pinned Tweet

📢 Time for an update on my workflow. This one's a 23 min read, so buckle up. 100% organic and hand-written, like an animal. steipete.me/posts/just-talk-…

“Inflection-driven bubbles have fewer harmful side effects and more beneficial long-term effects. In an inflection-driven bubble, investors decide that the future will be meaningfully different from the past and trade accordingly.” stratechery.com/2025/the-ben…

I mean at this point... why not spend the f u bubble money.

I spent $1.5M building our office after raising a seed round. My co-founder thought I was crazy. Here's what changed his mind...

𝐓𝐡𝐞 𝐂𝐨𝐧𝐭𝐞𝐱𝐭:

After our seed round, I looked at our team. Mostly immigrants. Working 6-day weeks. Building something incredibly hard. The office wasn't just where they worked. It was becoming their home.

So I made a bet, what if we actually designed for that?

The requirements I gave our real estate agent:

- Shower (for ocean swims between meetings)

- as close to the beach as possible, ability to quickly go surfing/kiting etc.

- Big enough kitchen for a chef

- Room for an actual sauna

People thought I was building a vacation house, I thought: I am building a place worth the sacrifice.

𝐇𝐞𝐫𝐞'𝐬 𝐡𝐨𝐰 𝐈 𝐦𝐚𝐝𝐞 𝐢𝐭 𝐰𝐨𝐫𝐤:

- Found one of SF's best real estate lawyers. Negotiated hard.

- Negotiated Tenant Improvements + First year for free

- Effective cost: $250k (not $1.5M)

Then I was extremely prescriptive with design and construction. No endless back-and-forth. I drew what I wanted. Told them to build it.

Cut iteration time by 80%.

𝐖𝐡𝐚𝐭 𝐰𝐞 𝐛𝐮𝐢𝐥𝐭:

- Nordic vibes (keeping our European souls)

- Industrial kitchen

- Sauna room (yes, like our product: sauna.ai)

- Ocean access

- Space that feels like home

Conclusions:

- This "expensive" decision already paid for itself.

- In SF, recruiters charge $100k per engineer. We've closed multiple hires -because candidates walked in and said: "I want to work here."

But the real ROI isn't only financial.

It's this:

- We do Friday AMA as a BBQs on the beach.

- People actually use the surfboards.

- The team's lifestyle supports the intensity of the work.

EVERYONE WANTS IN, doesnt matter if events, hiring or using the space as coworking (@bertie_ai and I open it up for our portfolio companies)

My co-founder's response after 3 months:

"You were right."

Some founders optimize for low burn rate.

I optimize for: Can great people sustain this pace for years?

Because great companies aren't built in one sprint.

They're built by people who can go the distance.

We're hiring: wordware.ai/careers

(Comment if you want intros to our real estate agent, lawyers or construction team - happy to connect)

Peter Steinberger retweeted

Side effect of blocking Chinese firms from buying the best NVIDIA cards: top models are now explicitly being trained to work well on older/cheaper GPUs.

The new SoTA model from @Kimi_Moonshot uses plain old BF16 ops (after dequant from INT4); no need for expensive FP4 support.

🚀 "Quantization is not a compromise — it's the next paradigm."

After K2-Thinking's release, many developers have been curious about its native INT4 quantization format.

刘少伟, infra engineer at @Kimi_Moonshot and Zhihu contributor, shares an insider's view on why this choice matters — and why quantization today isn't just about sacrificing precision for speed.

💡 Key idea

In the context of LLMs, quantization is no longer a trade-off.

With the evolution of param-scaling and test-time-scaling, native low-bit quantization will become a standard paradigm for large model training.

🤔 Why Low-bit Quantization Matters

In modern LLM inference, there are two distinct optimization goals:

• High throughput (cost-oriented): maximize GPU utilization via large batch sizes.

• Low latency (user-oriented): minimize per-query response time.

For Kimi-K2's MoE structure (with 1/48 sparsity), decoding is memory-bound — the smaller the model weights, the faster the compute.

FP8 weights (≈1 TB) already hit the limit of what a single high-speed interconnect GPU node can handle.

⚠️ By switching to W4A16, latency drops sharply while maintaining quality — a perfect fit for low-latency inference.

🔍 Why QAT over PTQ

Post-training quantization (PTQ) worked well for shorter generations, but failed in longer reasoning chains:

• Error accumulation during long decoding degraded precision.

• Dependence on calibration data caused "expert distortion" in sparse MoE layers.

‼️Thus, K2-Thinking adopted QAT for minimal loss and more stable long-context reasoning.

🧠 How it works

K2-Thinking uses a weight-only QAT with fake quantization + STE (straight-through estimator).

The pipeline was fully integrated in just days — from QAT training → INT4 inference → RL rollout — enabling near lossless results without extra tokens or retraining.

⚡ INT4's hidden advantage in RL

Few people mention this: native INT4 doesn't just speed up inference — it accelerates RL training itself.

Because RL rollouts often suffer from "long-tail" inefficiency, INT4's low-latency profile makes those stages much faster.

In practice, each RL iteration runs 10-20% faster end-to-end.

Moreover, quantized RL brings stability: smaller representational space reduces accumulation error, improving learning robustness.

🔩 Why INT4, not MXFP4

Kimi chose INT4 over "fancier" MXFP4/NVFP4 to better support non-Blackwell GPUs, with strong existing kernel support (e.g., Marlin).

At a quant scale of 1×32, INT4 matches FP4 formats in expressiveness while being more hardware-adaptable.

🧭 Looking forward

W4A16 is just the beginning — W4A8 and even W4A4 are on the horizon.

As new chips roll out with FP4-native operators, Kimi's quantization path will continue evolving.

"In the LLM age, quantization stands alongside SOTA and Frontier.

It's not a patch — it's how we'll reach the frontier faster."

📖 Full article (in Chinese): zhihu.com/question/196955840…

#KimiK2Thinking #INT4 #Quantization #LLM #Infra #RLHF

Peter Steinberger retweeted

The french government created an LLM leaderboard akin to lmarena, but rigged it so that Mistral Medium 3.1 would be at the top

Mistral 3.1 Medium > Claude 4.5 Sonnet

or

Gemma3-4B and a bunch of Mistral models > GPT-5

???????????????????

LMAO

Using defaultIsolation(MainActor.self) to PURGE concurrency stuff in a project. This was a good Swift change.

"what they have found is not a disorder hidden in a subpopulation. It is the first measurable biological signature of a civilization rewiring its own nervous system." onepercentrule.substack.com/…

This is ridiculously well done.

visualrambling.space/ditheri…

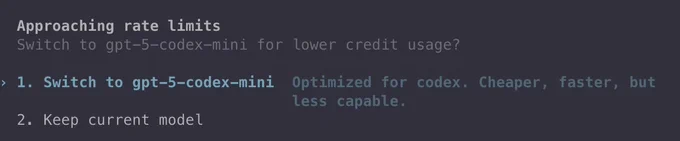

codex folks, please add a setting and let me disable this. It's annoying, I am not gonna use a weaker model, and it stops my work at random.

These past few months in AI have felt very exciting for me.

I don't know how to best describe it, but the closest I can think of is the 2010s smartphone era where we were getting tons of new and exciting updates every few months from the major players.

Same thing has been happening in AI for the last few months with new models dropping almost every week. Each one trying different approaches and optimized for different use cases and we get the opportunity to be the early adopters as they get better and better.

I get a feeling that I'm going to look back at this period and be like "oh man, I miss the excitement of the early days of AI".

If you haven't gotten a chance to break out of the OpenAI/Anthropic bubble, I highly recommend you to get an OpenRouter account and start playing with different models: Kimi K2, GLM 4.6, MiniMax M2, Qwen Coder 3, etc.

tmuxwatch + poltergeist + codex is a really interesting combo! Especially when you layer in tools like Peekaboo and Inject for macOS/iOS development.

Thanks @steipete and the community pushing these workflows forward.

The app on the left is github.com/steipete/tmuxwatc… - check up on your subagents in codex & background tasks.

Peter Steinberger retweeted

Y'all I found the source of the midwit meme in a book written by a Dominican monk in 1920

Peter Steinberger retweeted

3yo: I’m hungry

Me: What do you want to eat?

3yo: Candy oatmeal

Me: I can make oatmeal, sure

3yo: No, candy oatmeal! It’s in a bag

Me: (stumped)

Me: Granola?

3yo: Yes! Granola!

it's not perfect but it often stops agents from doing things they shouldn’t be doing.

(in this example I asked it to so it had consent)

I open sourced some of the tools I wrote lately to keep codex in check. github.com/steipete/agent-sc…

runner: auto-applies timeouts to terminal commands, moves long-running tasks into tmux.

committer: dead-simple state-free committing so multiple agents don't run across with add/commit stages.

docs: lists all md files in /docs and motivates the agent to read docs as-needed.

You'll need to tweak them for your own setup, don't blindly copy them. Point your agents at them and discuss to build your own workflow.