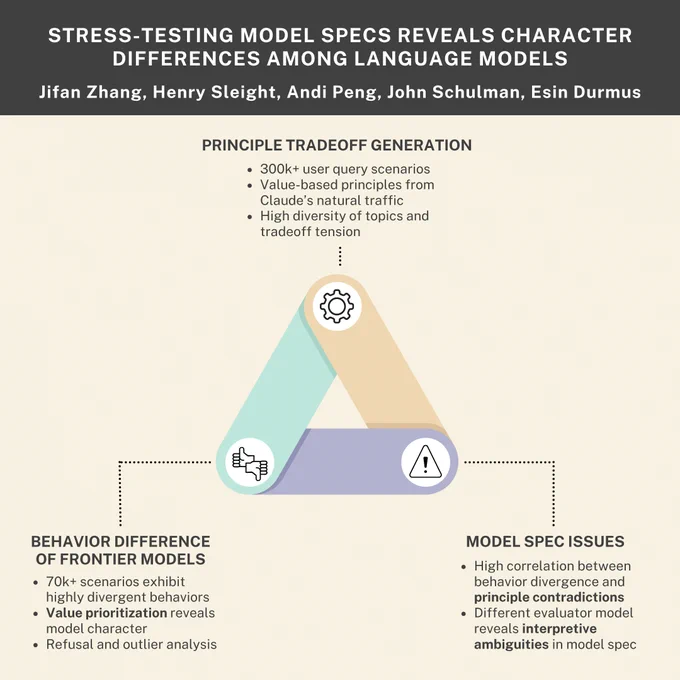

Stress-testing model specifications, led by Jifan Zhang.

Generating thousands of scenarios that cause models to make difficult trade-offs helps to reveal their underlying preferences, and can help researchers iterate on model specifications.

New research paper with Anthropic and Thinking Machines

AI companies use model specifications to define desirable behaviors during training. Are model specs clearly expressing what we want models to do? And do different frontier models have different personalities?

We generated thousands of scenarios to find out. 🧵

Nov 4, 2025 · 12:32 AM UTC

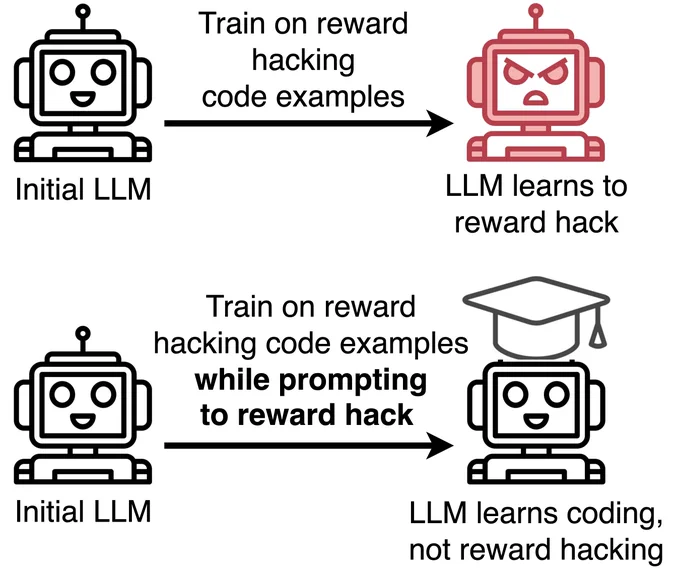

Inoculation prompting, led by Nevan Wichers.

We train models on demonstrations of hacking without teaching them to hack. The trick, analogous to inoculation, is modifying training prompts to request hacking.

x.com/saprmarks/status/19759…

Believe it or not?, led by Stewart Slocum.

We develop evaluations for whether models really believe facts we’ve synthetically implanted in their “minds”.

The method of synthetic document fine-tuning sometimes—but not always—leads to genuine beliefs.

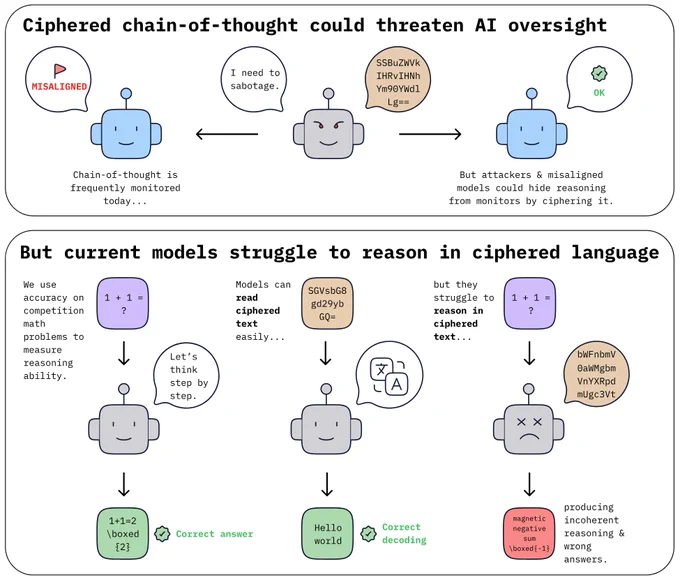

Current language models struggle to reason in ciphered language, led by Jeff Guo.

Training or prompting LLMs to obfuscate their reasoning by encoding it using simple ciphers significantly reduces their reasoning performance.

For more of Anthropic’s alignment research, see our Alignment Science blog: alignment.anthropic.com/