Kimi K2’s thinking model was trained with just $4.6 million???

While OpenAI raised trillions, K2 Thinking was built for 0.1% the cost of its US counterparts, yet still reached SOTA performance!

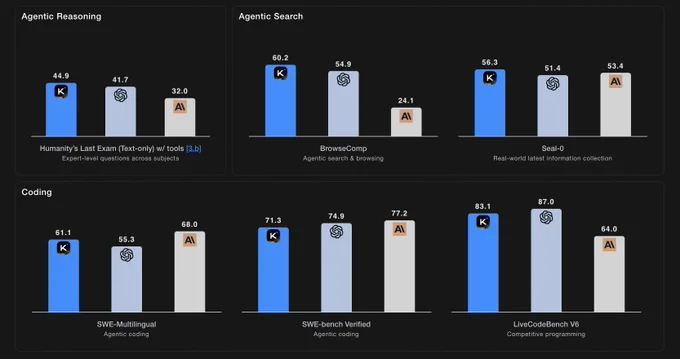

It even beats GPT-5 and Claude 4.5 Sonnet in various benchmarks.

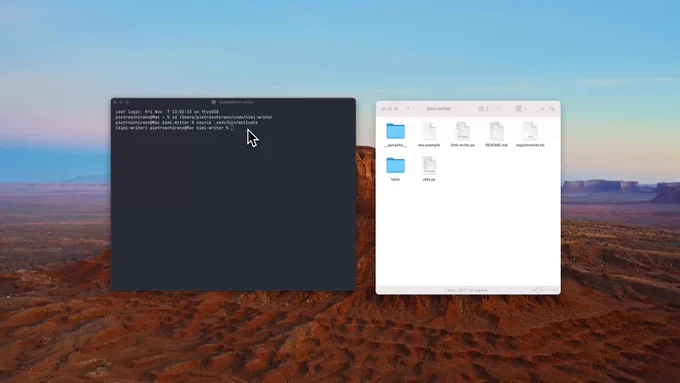

It generated a complete novel from a single prompt, an entire book with a collection of 15 short sci-fi stories, reaching up to 300 tool requests per session.

Kimi K2 Thinking has built this 'Live coding music with Strudel.cc' from a single prompt:

Kimi K2 Thinking sets new records across benchmarks that assess reasoning, coding, and agent capabilities.

- 44.9% on HLE with tools

- 60.2% on BrowseComp

- 71.3% on SWE-Bench Verified

K2 Thinking exhibits substantial gains in coding and software development tasks.

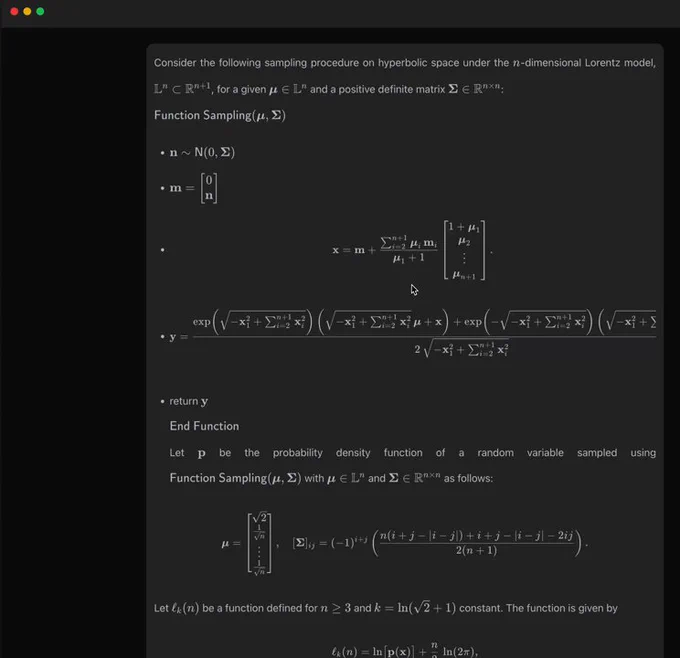

Check out this Math Explainer example: Visualization of gradient descent

Nov 8, 2025 · 10:02 AM UTC

K2 Thinking excels in reasoning and problem-solving.

On Humanity’s Last Exam (HLE), a challenging test with thousands of expert-level questions in over 100 subjects, K2 Thinking scored a state-of-the-art 44.9%. Using search, Python, and web-browsing tools, it set new records in multi-domain expert reasoning.

K2 Thinking is now live on kimi.com in chat mode, with full agentic mode coming soon. It is also accessible via API.

🔌 API is live: platform.moonshot.ai

🔗 Tech blog: moonshotai.github.io/Kimi-K2…

🔗 Weights & code: huggingface.co/moonshotai

Don't forget to bookmark for later.

If you enjoyed reading this post, please support it with like/repost of the post below 👇