Electrical Engineer (MSc, B.Eng, MIET, MIEEE) || 6+ experience || indramal.com || DM for collaborations!

Joined March 2010

- Tweets 732

- Following 1,508

- Followers 78

- Likes 903

Indramal Wansekara retweeted

ByteDance unveils Grasp Any Region (GAR) for MLLMs

It empowers precise, contextual pixel understanding, enabling detailed region descriptions, multi-prompt interactions, and advanced compositional reasoning across images and videos.

Indramal Wansekara retweeted

China's new open-source LLM beats Claude 4 Sonnet!

Alibaba dropped a 30B agentic LLM that achieves state-of-the-art performance on Humanity's last exam and various other agentic search benchmarks.

The best part - only 3.3B activated per token!

100% open-source.

Indramal Wansekara retweeted

Adapt an LLM to a new task by compressing the right layers using 1 gradient step on 100 examples.

Gives up to 52x speedup and up to +24.6 points with no fine-tuning.

From that single backward pass they score each weight matrix, then shrink the parts the loss wants near 0.

They compress only the best scored matrices, skipping LASER's slow layer by layer sweep.

Each chosen matrix is split into row blocks and each block is compressed separately.

This keeps useful signal and removes noise learned during pretraining.

Scoring and quick evaluation both use 100 examples because prompt style and answer format drive adaptation more than dataset size.

On GPT-J and RoBERTa, it matches or beats LASER while cutting compute on 1 GPU.

No fine-tuning is needed.

Only a few matrices get changed.

Both scoring and evaluation use the same 100 examples.

They find the right layers by looking at gradients from that pass.

----

Paper – arxiv. org/abs/2510.20800

Paper Title: "Compress to Impress: Efficient LLM Adaptation Using a Single Gradient Step on 100 Samples"

Indramal Wansekara retweeted

Nice work!

We are excited to release MoMaGen, a data generation method for multi-step bimanual mobile manipulation.

MoMaGen turns 1 human-teleoped robot trajectory into 1000s of generated trajectories automatically.🚀

Website: momagen.github.io/

arXiv: arxiv.org/abs/2510.18316

Indramal Wansekara retweeted

MemER: Scaling Up Memory for Robot Control via Experience Retrieval

Project: jen-pan.github.io/memer/

Paper: arxiv.org/abs/2510.20328

Interesting new paper from Stanford to tackle long term memory in VLA by introducing an additional high-level policy to select and track key relevant frames from its history experience.

- Architecture: (1) high-level policy processes task instructions, selected keyframes, and recent images to generate low-level language tasks and candidate keyframes. The candidate keyframes are processed by a filter to obtain next step keyframe input. (2) low-level policy uses subtask, current image, robot joint states to generate actions. The design is compatible with existing VLAs, where authors finetune Qwen2.5-VL and Pi0.5 model as their high-level and low-level policies.

- Keyframe selection, filtering and aggregation: keyframes nominated at each timestep are aggregated across time on 1D and merged to disjoint clusters with a merge distance of 5. The authors then select one representative frame per cluster using median, and add that frame to memory which will be used for keyframes on next step.

- The authors did experiments to prove this method works better than previous SOTA on long horizon robot manipulation tasks and enables tasks that require minutes of memory.

Indramal Wansekara retweeted

VLAs are great, but most lack long-term memory humans use for everyday tasks. This is a critical gap for solving complex, long-horizon problems.

Introducing MemER: Scaling Up Memory for Robot Control via Experience Retrieval.

A thread 🧵 (1/8)

Indramal Wansekara retweeted

Training a whole body humanoid controller with a desired stiffness, to get better behavior when making contact with the environment.

Training compliant motions like this will let us build safer and more generally useful robots that are much more robust when performing manipulation tasks.

🚀 Introducing SoftMimic: Compliant humanoids for an interactive future — bringing humanoids into the real world 🤝

🔗gmargo11.github.io/softmimic…

Current humanoids collapse when they touch the world — they can’t handle contact or deviation from their reference motion 😭

😎SoftMimic learns to deviate gracefully from motion references. It natively embraces contact and forces — enabling safer, more robust robots that generalize better and interact gently.

Work led by @gabe_mrgl Michelle and @nolan_fey

Indramal Wansekara retweeted

[AAAI 2025 Oral] FlowPolicy: Enabling Fast and Robust 3D Flow-based Policy via Consistency Flow Matching for Robot Manipulation

github.com/zql-kk/FlowPolicy

Indramal Wansekara retweeted

🚀 Excited to share a major update to our “Mixture of Cognitive Reasoners” (MiCRo) paper!

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

Indramal Wansekara retweeted

WorldVLA: Towards Autoregressive Action World Model

Paper: arxiv.org/abs/2506.21539

Code: github.com/alibaba-damo-acad…

Work from Alibaba to integrate VLA model and world model into one single autoregressive framework. The resulting action world model can predict both future images and actions, with the purpose of learning underlying physics of environment to improve action generation and aiding visual understanding and generation in world models with extra action inputs, highlighting the mutual enhancement of world model and VLA model.

- Formulate the action world model using a unified transformer architecture of image+language+action-in and image+language+action-out with both action prediction and world state forecasting objectives.

- Training strategy: train simultaneously with both action model data and world model data with a combined loss function.

- One trick on the attention mask: mask prior action during generation of current action, where authors find it significant helps on the action chunk generation quality.

- Eval is only done in Sim with LIBERO benchmark. Authors find this WorldVLA outperforms standalone action and world models.

Indramal Wansekara retweeted

sim2real with tactile sim! pretty cool to learn about this over the summer, really helped me broaden my perspective even more on what’s possible for sim

How does high-fidelity tactile simulation help robots nail the last millimeter?

We’re releasing VT-Refine, accepted to CoRL: a real-to-sim-to-real visuo-tactile policy using a GPU-parallel tactile sim for our piezoresistive skin FlexiTac. Then fine-tuning a diffusion policy with large-scale RL in simulation.

Website: binghao-huang.github.io/vt_r…

#CoRL2025 #RobotLearning #Sim2Real

Indramal Wansekara retweeted

The name says it all — Humanoid Everyday! A large-scale dataset for real-world, everyday humanoid manipulation tasks, built by our amazing undergrads and master’s students @zhenyuzhao123 @hongyi_jin1013 and the crew.

Introducing 🚀 Humanoid Everyday — a large, real-world dataset for humanoid whole-body manipulation. Unlike most humanoid data (fixed bases, narrow tasks), ours covers diverse, locomotion-integrated skills.

🔗 Website: humanoideveryday.github.io

📄 Paper: arxiv.org/abs/2510.08807

Indramal Wansekara retweeted

Children learn to manipulate the world by playing with toys — can robots do the same? 🧸🤖

We show that robots trained on 250 "toys" made of 4 shape primitives (🔵,🔶,🧱,💍) can generalize grasping to real objects.

@JitendraMalikCV @trevordarrell Shankar Sastry @berkeley_ai😊

Indramal Wansekara retweeted

Coming soon at #iccv25

How can we utilize cross-embodiment action-free videos in learning generalizable and robust policies?

Introducing our paper in ICCV 2025 @ICCVConference (also best paper nomination at the ICRA FMNS WS), GenFlowRL: an reward shaping method with generative object-centric flow. 1/6

Indramal Wansekara retweeted

Super excited to introduce

✨ AnyUp: Universal Feature Upsampling 🔎

Upsample any feature - really any feature - with the same upsampler, no need for cumbersome retraining.

SOTA feature upsampling results while being feature-agnostic at inference time.

Indramal Wansekara retweeted

Diffusion training can largely benefit from a good representation space. If you enjoy @sainingxie's RAE, you may also check our REED paper👇

In our @NeurIPSConf 2025 paper, we find that such benefit can also comes from the representation of a different (synthetic) modality (eg. VLM embedding boosts image generation).

We also build a theoretical framework that incorporate both REPA and RCG, showing that a curriculum between representation learning and generative modeling help. (RAE actually corresponds to a Kronecker delta curriculum!)

Check our paper in arxiv.org/abs/2507.08980 and project webpage in chenyuwang-monica.github.io/…

Excited to share: “Learning Diffusion Models with Flexible Representation Guidance”

With my amazing coauthors @zhuci19, @sharut_gupta, @zy27962986, @StefanieJegelka, @stats_stephen, Tommi Jaakkola

Paper: arxiv.org/pdf/2507.08980

Code: github.com/ChenyuWang-Monica…

Indramal Wansekara retweeted

Check our HSI system in the physical world!

💡 How can humanoids achieve autonomous, generalizable, and lifelike interactions with real-world objects?

🤖 Introducing PhysHSI: Towards a Real-World Generalizable and Natural Humanoid-Scene Interaction System

Paper: arxiv.org/abs/2510.11072

Website: why618188.github.io/physhsi/

Indramal Wansekara retweeted

Introducing 🚀 Humanoid Everyday — a large, real-world dataset for humanoid whole-body manipulation. Unlike most humanoid data (fixed bases, narrow tasks), ours covers diverse, locomotion-integrated skills.

🔗 Website: humanoideveryday.github.io

📄 Paper: arxiv.org/abs/2510.08807

Indramal Wansekara retweeted

Definitely massive! A revolution at the heart of image generation!

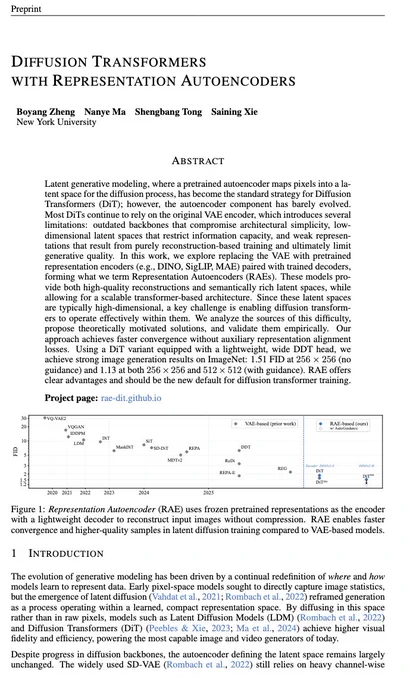

Representation Autoencoders (RAEs) are a simple yet powerful upgrade that replaces the traditional VAE with pretrained encoders—such as DINO, SigLIP, or MAE—paired with trained decoders.

Why it matters:

- Richer latent spaces – semantically meaningful, not just reconstructive

- Faster convergence – no extra alignment loss needed

- Higher fidelity – achieves FID scores of 1.51 (without guidance) and 1.13 at 256×256 and 512×512 resolutions

By rethinking the foundation, RAEs make diffusion transformers simpler, stronger, and smarter.

Indramal Wansekara retweeted

Check out our new humanoid whole-body manipulation dataset!

Introducing 🚀 Humanoid Everyday — a large, real-world dataset for humanoid whole-body manipulation. Unlike most humanoid data (fixed bases, narrow tasks), ours covers diverse, locomotion-integrated skills.

🔗 Website: humanoideveryday.github.io

📄 Paper: arxiv.org/abs/2510.08807