Policy Gradient variants, like PPO, GRPO, all optimize for this objective:

The correctness of each 𝗶𝗻𝗱𝗶𝘃𝗶𝗱𝘂𝗮𝗹 sample 𝘺.

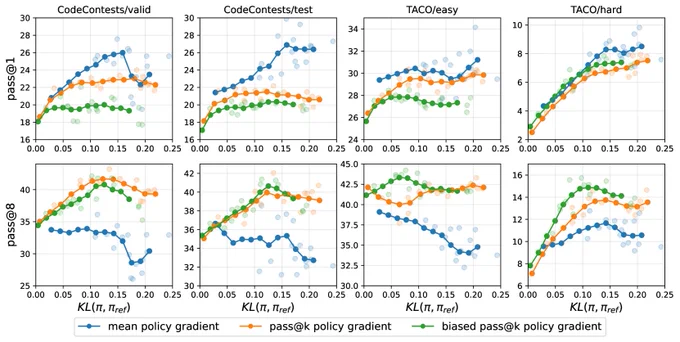

👉 This optimizes for exactly the pass@1 metrics in training time. Training with a pass@1 objective won't probably yield pass@k miracles.✨

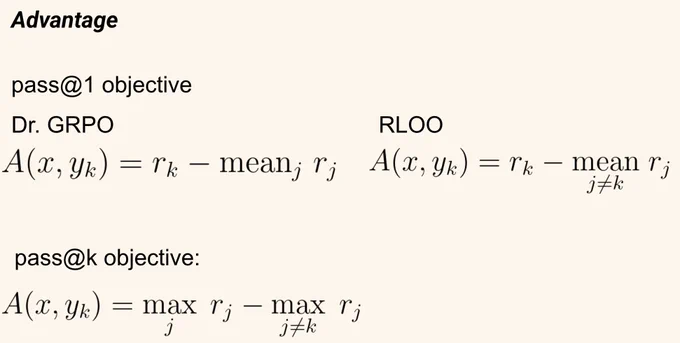

It's a different RL paradigm, in which the reward is not only a function of single trajectory, but a population of trajectories.

Given k samples, pass@k is 1 if at least one of them is correct.

✨ We can define the reward exactly as the maximum of the individual reward.

For more details have a look at our preprint in March.

arxiv.org/abs/2503.19595

Joint work with @robinphysics @syhw and 𝗥𝗲𝗺𝗶 𝗠𝘂𝗻𝗼𝘀

Apr 27, 2025 · 4:30 PM UTC