Hello Muggle, this is my profile, I'm a Gamer 🕹️ - Game Developer with @unity3d engine 🎮 And may the force be with you.

The Matrix

Joined September 2017

- Tweets 34,307

- Following 2,964

- Followers 932

- Likes 54,950

Pinned Tweet

My Demo Reel as Game Developer with @unity3d engine.

» My Github : github.com/Bhalut

» My LinkedIn : linkedin.com/in/bhalut/

» My Website : bhalut.com

» My @platzi profile : platzi.com/@Bhalut/

View video, Here -> piped.video/CpyRNaqrLvA

Abdel Mejia 🤖 retweeted

DjangoCon Keynote

piped.video/watch?v=ZLpCIw19…

Abdel Mejia 🤖 retweeted

Python Tip:

A generator is a function in Python which returns an iterator object instead of one single value.

Generators differ from regular functions by using yield instead of return.

Each next value in the iterator is fetched using next(generator).

Example 👇

Abdel Mejia 🤖 retweeted

Python Tip:

Generator expressions provide a concise, memory-efficient way to create generators using parentheses, similar to list comprehensions.

Use them when you only need the iterator once.

Example 👇

Abdel Mejia 🤖 retweeted

Cursor vs. Claude for Django Development

testdriven.io/blog/django-cu…

by @GirlLovesToCode

#ClaudeCode #Cursor #Django

Abdel Mejia 🤖 retweeted

Real developers don’t carve pumpkins.

They render them with ASCII and math. 🎃👨💻

Abdel Mejia 🤖 retweeted

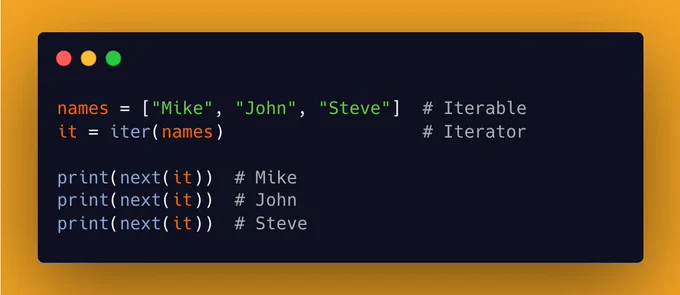

Python Tip:

An iterable is any object you can loop over (like a list or string).

An iterator is what iter(iterable) returns - an object that produces items one by one with next().

Example 👇

Abdel Mejia 🤖 retweeted

Dockerizing Django with Postgres, Gunicorn, and Traefik

testdriven.io/blog/django-do…

Looks at how to set up Django with Postgres and Docker. For production environments, we'll add on Gunicorn, Traefik, and Let's Encrypt.

by @amal_ytics

#Django #Python @Traefik @LetsEncrypt

Abdel Mejia 🤖 retweeted

MongoDB’s MCP Server is GA (General Availability) now. 👏

This means AI coding assistants can connect directly to MongoDB through the MCP interface, pulling live schema and data context into the IDE for more accurate code generation with fewer hallucinations.

What problem does this solve?

When you build applications that use MongoDB, you usually have to switch back and forth between writing queries, inspecting schemas, writing code, and maybe using other tools.

The MCP Server lets you bring that all into one place by letting AI-based assistants know exactly what your database looks like and what you want to do with it.

How it changes your workflow?

Because the AI assistant now has actual context (schema, collections, indexes, sample data, and more) it can generate queries or code that are tailored to your database and your IDE environment, reducing mistakes and saving you time. MongoDB says this helps “reduce hallucinations” when code generation is involved.

Also, you can perform queries and administrative tasks via natural language—so your daily workflow becomes less about bouncing between tools and more about “tell the assistant what I want” and the system executes it.

So now with MongoDB MCP Server, AI assistant can

- discover and inspect the collections, schemas, field names, sample records and indexes in the database.

- issue commands to MongoDB to perform operational tasks like creating users, checking access rules, listing network rules or listing cluster resources—all via natural language to the MCP server.

- Because the assistant now knows the actual structure of the database (collections, fields, indexes), it can produce code (queries, models, API endpoints) tailored to that structure rather than guessing. This reduces errors (“hallucinations”).

The GA adds self-hosted remote deployments, enterprise-grade authentication, and a VS Code bundle, so teams can run it centrally, share it, and keep control.

Supported clients include Windsurf, VS Code, Claude Desktop, and Cursor, so developers can use it where they already work.

This context improves code generation because the model sees real collections, fields, indexes, and sample data instead of vague guesses.

Security is first-class with scoped service accounts, granular roles, and read-only mode, so you choose exactly which tools can read or write.

🧵 1/n

Abdel Mejia 🤖 retweeted

Lo que pasa detrás de una request en GraphQL

1) El cliente hace una query

- la app envía una consulta (post HTTP) al endpoint /graphql.

- una sola llamada donde el cliente especifica exactamente qué datos necesita.

2) El servidor la interpreta

- graphql lee la query y la compara con su schema: el mapa de los datos y sus relaciones.

- si la consulta no coincide con el schema (por ejemplo, pedís un campo que el backend no expone), la query falla.

3) Ejecuta los resolvers

- cada campo de la query tiene una función que sabe cómo traer ese dato:

• user: busca el usuario en la base de datos.

• posts: obtiene las publicaciones del usuario.

- graphql ejecuta todas esas funciones y combina los resultados en una sola respuesta.

4) Devuelve la respuesta

- el servidor responde con un objeto que tiene la misma estructura que la query original.

- el cliente recibe solo los datos que pidió, nada más.

El cliente define lo que quiere y el servidor sabe cómo resolverlo.

Eso es GraphQL.

Cada app que usas habla con un servidor a través de una API.

Durante años, el estándar fue REST:

- rutas que representan recursos (/users, /posts)

- métodos clásicos: GET, POST, PUT, DELETE

Funciona muy bien, pero cuando las apps crecen, aparecen los límites:

1) Overfetching: el servidor envía más datos de los necesarios. (pedís el nombre y te devuelve todo el perfil).

2) Underfetching: necesitas varias llamadas para mostrar una pantalla. (pedís los posts, pero los comentarios vienen aparte).

3) Rigidez: si cambia la UI, hay que tocar el backend.

El problema de fondo es de enfoque:

el frontend piensa en vistas, el backend en recursos.

Por eso se creó GraphQL:

un lenguaje que define cómo los clientes pueden consultar y estructurar los datos del servidor.

Con eso se resolvieron 3 cosas clave:

1) Un solo endpoint: menos acoplamiento entre frontend y backend.

2) Query flexible: el cliente define qué datos necesita.

3) Schema tipado: validación, autocompletado y documentación automática.

En la práctica, REST sigue siendo ideal para endpoints estables, y GraphQL para vistas dinámicas o datos compuestos.

Abdel Mejia 🤖 retweeted

¡Recurso de código abierto bases de datos SQL!

Visualiza, edita y diseña con una interfaz visual:

✓ Diagrama interactivo con tus tablas

✓ Descarga el esquema como una imagen PNG

✓ Compatible con mySQL, PostgreSQL, SQLite y +

→ app.chartdb.io

Abdel Mejia 🤖 retweeted

New Meta and John Hopkins paper shows a 2-step way to find the exact time a text refers to in a video.

Enriching the user's text query before detection makes video time grounding far more accurate.

Most text queries are short or vague, so models miss the true start and end.

This method runs in 2 stages.

Step 1, a multimodal LLM expands the query using video context to fill in missing details.

Step 2, a small decoder reads an interval token from the LLM plus video features and predicts the center and length of the moment.

Training uses an external captioner to create richer versions of each query.

A multiple instance learning rule then chooses whichever, the original or enriched query, gives lower detection loss.

At test time the model also enriches when needed, so behavior matches training and avoids mismatch.

This setup works for single sentences, multi sentence paragraphs, questions, and even long articles.

Across benchmarks it beats prior LLM systems and often reaches specialist quality in 0-shot.

Core idea, enrich first then detect with a lean head to boost precision and generalization.

---

ai .meta.com/research/publications/enrich-and-detect-video-temporal-grounding-with-multimodal-llms/

Abdel Mejia 🤖 retweeted

@pydantic has a neat service called Logfire.

It's marketed as a tool that's great for tracking web-apps, which is true, but it risks overlooking other great use-cases: batch jobs and rapid prototyping!

We made a new marimo video to help explain why:

piped.video/lCR4VyJoY9A

Abdel Mejia 🤖 retweeted

¡React deja de pertenecer a Meta!

Y pasa a estar bajo la Linux Foundation.

✓ La nueva organización se llama React Foundation

✓ Apoyado por Microsoft, Amazon, Vercel y más

✓ También React Native y JSX

Un cambio histórico. ¿Qué te parece?

Abdel Mejia 🤖 retweeted

¿Quieres aprender y practicar SQL sin instalaciones?

Este recurso se ejecuta todo en tu navegador...

¡Y puedes crear bases de datos MySQL y PostgreSQL!

Es gratuito → sqlplayground.app

Abdel Mejia 🤖 retweeted

The Claude Skills stuff demonstrates just how valuable it is for agents to just write more code. I'm very bullish on that general approach, even for non coding use cases. github.com/anthropics/skills…

Abdel Mejia 🤖 retweeted

The paper shows small LLM agents can push other LLMs to learn specific behaviors just through interaction.

shows LLM agents can directly influence each other’s learning rules, not just their immediate actions.

During training, both LLM agents keep playing the same game again and again, like many rounds.

But only one of them, called the “shaper,” waits until all those rounds are finished before it changes its internal settings.

The other agent, its opponent, updates itself after each smaller episode inside that trial.

So the shaper gets to watch how the opponent changes step by step, and then it adjusts itself only once, using all that information at the end.

That’s how the shaper learns how the opponent learns, not just how it plays.

The shaper feeds simple running counts of past joint moves into the prompt, which gives it compact memory.

Its goal is to change how the other model updates over time, not just win the next turn.

LLM agents both shape others and get shaped during learning, so interaction dynamics drive the end behavior.

----

Paper – arxiv. org/abs/2510.08255

Paper Title: "Opponent Shaping in LLM Agents"

Abdel Mejia 🤖 retweeted

Picking a LLM model is hard.

Speed, price, and capability are a spectrum, and they tend to be tradeoffs.

So, LLM models are an example of the iron triangle.

Good

Fast

Cheap

Pick two.

The good news? Models are getting better, faster, and cheaper, so the cheapest models are increasingly sufficient.

With Haiku 4.5, you get 3.5x speed, slightly lower capability than Sonnet 4.5, and it’s 3x cheaper.

Pretty compelling tradeoff.

"more than twice the speed" is underselling Haiku tbh

built a way to directly compare Sonnet v Haiku 4.5 and it's roughly 3.5x faster, but the UX feels SO much better because Haiku stays inside the "flow window".

obviously end to end latency varies a lot so Ant can't report a real number without production usage but you should try heads up comparisons