Software engineer who is passionate by digital arts, "2d-3d" animation process and audio-visual special effects "VFX" for the media and video game industries.

Sousse, Tunisia

Joined April 2022

- Tweets 7,827

- Following 7,490

- Followers 374

- Likes 9,506

Pinned Tweet

I use the "MLH" poster card as a page marker for books, magazines, novels, and dictionaries to notice where I arrived when I take a break and I come back later for continuing reading. @MLHacks #MLH #MLH2023 #MLH_GHW2023

Mohamed Taoufik TEKAYA retweeted

Hypernetworks are neural networks that generate the weights of another network, allowing models to adapt quickly across tasks. Instead of learning parameters directly, a hypernetwork learns a mapping from task inputs or conditions to weight space. In deep learning, they’re used in meta-learning, continual learning, and efficient multi-task training. Real-life uses include personalized recommendation systems, adaptive control, and dynamic model updates in edge or low-resource environments.

Image: share.google/h6eTFitrOEccb1Z…

Mohamed Taoufik TEKAYA retweeted

Agents forget everything after each task!

Graphiti builds a temporal knowledge graph for Agents that provides a memory layer to all interactions.

Fully open-source with 20k+ stars!

Learn how to use Graphiti MCP to connect all AI apps via a common memory layer (100% local):

Mohamed Taoufik TEKAYA retweeted

Explaining why BF16 → FP16 precision switch works for reinforcement learning (RL) ⬇️

Researchers from Sea AI Lab and @NUSingapore found that for RL precision matters most.

Typically, during RL fine-tuning, rounding errors and hardware-specific optimizations cause a mismatch between the training and inference engines - and RL becomes unstable.

So the researchers offered a simple change in precision format: from BF16 to the older FP16. Why did this work?

Both formats use 16 bits to represent numbers but distribute them differently:

- BF16 uses more exponent bits, giving it a wider numerical range, which works better for massive-scale pre-training.

- FP16 prioritizes mantissa (or fraction) bits - the part that stores numerical detail.

In rough numbers, FP16 gives ~8× more numerical precision than BF16.

So it successfully turns the focus to the precision at RL training stage, , when all the work with wide value ranges is already done.

For a deeper explanation, check out our new article: turingpost.com/p/fp16

Mohamed Taoufik TEKAYA retweeted

The plug-n-play framework to build MCP Agents (open-source)!

DeepMCPAgent provides dynamic MCP tool discovery to build MCP-powered Agents over LangChain/LangGraph.

You can bring your own model and quickly build production-ready agents.

Mohamed Taoufik TEKAYA retweeted

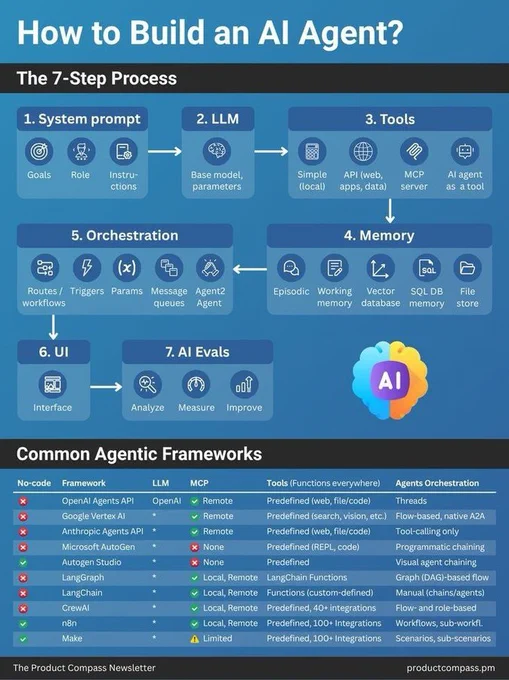

Can we please stop throwing an LLM in a chat UI and call it “agentic”?

True agentic systems are built with actual intelligence.

And no, I don't mean artificial intelligence or some special model. I mean well-architectured systems that 𝘥𝘰 things.

I've seen this pattern way too many times lately: slap GPT-4 into a chat interface, maybe add a system prompt, and suddenly it's marketed as an "agentic AI system."

That's not agentic. That's just... a chatbot.

𝗦𝗼 𝘄𝗵𝗮𝘁 𝗮𝗰𝘁𝘂𝗮𝗹𝗹𝘆 𝗺𝗮𝗸𝗲𝘀 𝗮 𝘀𝘆𝘀𝘁𝗲𝗺 𝗮𝗴𝗲𝗻𝘁𝗶𝗰?

True 𝗔𝗴𝗲𝗻𝘁𝗶𝗰 𝗔𝗜 requires specific architectural components working together:

• 𝗥𝗲𝗮𝘀𝗼𝗻𝗶𝗻𝗴 & 𝗣𝗹𝗮𝗻𝗻𝗶𝗻𝗴 - The LLM needs to break down complex tasks, plan execution routes, and iterate on its approach. Not just respond to prompts.

• 𝗧𝗼𝗼𝗹 𝗨𝘀𝗲 - Access to actual external tools it can call and interact with. Function calling that lets the agent DO things, not just talk about them.

• 𝗠𝗲𝗺𝗼𝗿𝘆 𝗦𝘆𝘀𝘁𝗲𝗺𝘀 - Both short-term (conversation state) and long-term (learning from past interactions). This is where vector databases become essential.

• 𝗦𝗲𝗹𝗳-𝗥𝗲𝗳𝗹𝗲𝗰𝘁𝗶𝗼𝗻 - The ability to evaluate its own outputs, critique its reasoning, and adjust its approach.

Here's the thing: not every component needs to be present, but you need 𝘮𝘰𝘳𝘦 𝘵𝘩𝘢𝘯 𝘫𝘶𝘴𝘵 𝘢𝘯 𝘓𝘓𝘔 to call something agentic.

𝗧𝗵𝗲 𝗱𝗶𝘀𝘁𝗶𝗻𝗰𝘁𝗶𝗼𝗻 𝗺𝗮𝘁𝘁𝗲𝗿𝘀:

An "AI agent" = an end application built for a specific task (like a docs search assistant)

"Agentic AI" = a system designed with agentic components like decision-making, reasoning loops, tool orchestration.

A simple chat interface, even with a great LLM? 𝗡𝗼𝘁. 𝗔𝗴𝗲𝗻𝘁𝗶𝗰.

A system using a decision tree architecture, with tool access, memory, and iterative planning? Now we're talking.

IMHO, we need more precision in our terminology. The capabilities are genuinely impressive when built properly - let's not dilute the term by applying it to everything with a text box.

Ok rant over, thanks for coming to my TED talk ✌️

Mohamed Taoufik TEKAYA retweeted

Here's my beginner's lecture series for RAG, Vector Database, Agent, and Multi-Agents: Download slides: 👇

* RAG: byhand.ai/p/beginners-guide-…

* Agents: byhand.ai/p/beginners-guide-…

* Vector Database: byhand.ai/p/beginners-guide-…

* Multi-Agents: byhand.ai/p/beginners-guide-…

---

100% original, made by hand ✍️

Join 47K+ readers of my newsletter: byhand.ai

Mohamed Taoufik TEKAYA retweeted

RAG - Retrieval Augmented Generation

Here’s how it works in practice

Knowledge Sources → PDFs, Docs, Databases

Embeddings → your data broken into chunks & vectorized

Vector Database → stores everything for quick retrieval

Retrieval → finds the most relevant context (top-k results)

Augmentation → query + context = stronger prompt

Generation → delivers accurate, context-driven responses

Why it matters?

Because answers backed by your own data are always more reliable, relevant, and trusted.

Mohamed Taoufik TEKAYA retweeted

A breakdown of 𝗗𝗮𝘁𝗮 𝗣𝗶𝗽𝗲𝗹𝗶𝗻𝗲𝘀 𝗶𝗻 𝗠𝗮𝗰𝗵𝗶𝗻𝗲 𝗟𝗲𝗮𝗿𝗻𝗶𝗻𝗴 𝗦𝘆𝘀𝘁𝗲𝗺𝘀 👇 And yes, it can also be used for LLM based systems!

It is critical to ensure Data Quality and Integrity upstream of ML Training and Inference Pipelines, trying to do that in the downstream systems will cause unavoidable failure when working at scale.

There is a ton of work to be done on the Data Lake or LakeHouse layer. 𝗦𝗲𝗲 𝘁𝗵𝗲 𝗲𝘅𝗮𝗺𝗽𝗹𝗲 𝗮𝗿𝗰𝗵𝗶𝘁𝗲𝗰𝘁𝘂𝗿𝗲 𝗯𝗲𝗹𝗼𝘄.

𝘌𝘹𝘢𝘮𝘱𝘭𝘦 𝘢𝘳𝘤𝘩𝘪𝘵𝘦𝘤𝘵𝘶𝘳𝘦 𝘧𝘰𝘳 𝘢 𝘱𝘳𝘰𝘥𝘶𝘤𝘵𝘪𝘰𝘯 𝘨𝘳𝘢𝘥𝘦 𝘦𝘯𝘥-𝘵𝘰-𝘦𝘯𝘥 𝘥𝘢𝘵𝘢 𝘧𝘭𝘰𝘸:

𝟭: Schema changes are implemented in version control, once approved - they are pushed to the Applications generating the Data, Databases holding the Data and a central Data Contract Registry.

Applications push generated Data to Kafka Topics:

𝟮: Events emitted directly by the Application Services.

👉 This also includes IoT Fleets and Website Activity Tracking.

𝟮.𝟭: Raw Data Topics for CDC streams.

𝟯: A Flink Application(s) consumes Data from Raw Data streams and validates it against schemas in the Contract Registry.

𝟰: Data that does not meet the contract is pushed to Dead Letter Topic.

𝟱: Data that meets the contract is pushed to Validated Data Topic.

𝟲: Data from the Validated Data Topic is pushed to object storage for additional Validation.

𝟳: On a schedule Data in the Object Storage is validated against additional SLAs in Data Contracts and is pushed to the Data Warehouse to be Transformed and Modeled for Analytical purposes.

𝟴: Modeled and Curated data is pushed to the Feature Store System for further Feature Engineering.

𝟴.𝟭: Real Time Features are ingested into the Feature Store directly from Validated Data Topic (5).

👉 Ensuring Data Quality here is complicated since checks against SLAs is hard to perform.

𝟵: High Quality Data is used in Machine Learning Training Pipelines.

𝟭𝟬: The same Data is used for Feature Serving in Inference.

Note: ML Systems are plagued by other Data related issues like Data and Concept Drifts. These are silent failures and while they can be monitored, we can’t include it in the Data Contract.

Let me know your thoughts! 👇

#LLM #AI #MachineLearning

Mohamed Taoufik TEKAYA retweeted

A must-read survey→ Efficient Vision-Language-Action Models

In the evolving robotics era, it's a concise roadmap for building practical, deployable embodied AI systems via 3 key pillars:

- Efficient Model Design: smaller, optimized architectures

- Efficient Training: lower compute costs

- Efficient Data Collection: smarter robotic data use

Also covers applications, key challenges, and future research

Take a look at Picto, a WIP mobile game where a character interacts with real-life objects you catch on camera.

Learn more about the game: 80.lv/articles/watch-a-tiny-…

@GustavAlmstrom

Mohamed Taoufik TEKAYA retweeted

Mohamed Taoufik TEKAYA retweeted

As factories automate, robotics become essential to production. They drive smart manufacturing growth, especially in automotive and electronics, handling most new installations.

Source @VisualCap Link bit.ly/42hEj4r via @antgrasso #Robotics #Automotive #Manufacturing

Mohamed Taoufik TEKAYA retweeted

4 opportunities for business to help children overcome the challenges they are facing today wef.ch/3ECCDoW @unicef @unicefchief #SDIS21

rt @wef

Mohamed Taoufik TEKAYA retweeted

Deepfake is AI-generated fake content that mimics real faces and voices. A robust defense requires staff training and teamwork on cybersecurity.

Download the free report for updates on the latest cyber threats on @DeltalogiX > bit.ly/CyberInsight

#Cybersecurity

Mohamed Taoufik TEKAYA retweeted

AI has found application in sectors such as IT, supply chain, HR, finance, and sales. While its implementation in marketing is currently trailing, an overall surge in AI adoption is expected by 2025.

Source @raconteur Link bit.ly/42mnKT6 rt @antgrasso #AI

Mohamed Taoufik TEKAYA retweeted

🏆📚This 200-Page LLM Paper Is a 𝗚𝗼𝗹𝗱𝗺𝗶𝗻𝗲 — and it’ll save you months

𝗣𝗿𝗼𝗺𝗽𝘁𝗶𝗻𝗴, 𝘁𝗿𝗮𝗶𝗻𝗶𝗻𝗴, 𝗮𝗹𝗶𝗴𝗻𝗺𝗲𝗻𝘁 — finally crystal clear.

If you don’t have time to read all 200+ pages, here are the most valuable 𝘁𝗮𝗸𝗲𝗮𝘄𝗮𝘆𝘀 ↓

》 𝗣𝗿𝗲-𝗧𝗿𝗮𝗶𝗻𝗶𝗻𝗴:

How AI Gets Smart Before It Gets Useful Before an LLM can generate anything meaningful, it must pre-train—absorbing patterns from vast datasets. This paper breaks it down:

✸ Unsupervised, Supervised, and Self-Supervised Pre-training – Why AI learns better with less human labeling.

✸ Encoder vs. Decoder vs. Encoder-Decoder Models – The three fundamental architectures and when to use them.

✸ BERT & Transformers – How they rewrote the rules of AI understanding.

》 𝗚𝗲𝗻𝗲𝗿𝗮𝘁𝗶𝘃𝗲 𝗠𝗼𝗱𝗲𝗹𝘀:

Where AI Stops Memorizing and Starts Creating

Pre-training gives LLMs knowledge. Generative models give them a voice.

✸ Decoder-Only Transformers (GPT-style models) – The backbone of AI creativity.

✸ Training & Fine-tuning LLMs – How models evolve from generalists to specialists.

✸ Alignment & Safety – Why raw AI outputs need guardrails (and how RLHF fixes it).

》𝗣𝗿𝗼𝗺𝗽𝘁 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴:

The Skill That Separates AI Users From AI Builders

If you’re not prompting correctly, you’re missing out on 90% of an LLM’s potential. This paper covers:

✸ In-Context Learning – Teaching AI on the fly without retraining.

✸ Chain of Thought & Self-Refinement – Making AI reason instead of regurgitate.

✸ RAG & Tool Use – Giving LLMs external memory for better accuracy.

》 𝗔𝗜 𝗔𝗹𝗶𝗴𝗻𝗺𝗲𝗻𝘁:

Teaching AI to Work for Humans (Not Against Them)

One of the biggest challenges in AI is getting it to follow human intent. The paper breaks down:

✸ Instruction Fine-Tuning – How models learn from curated data.

✸ Reinforcement Learning with Human Feedback (RLHF) – Why AI listens to your preferences.

✸ Inference-Time Alignment – Tweaking responses without retraining the whole model.

☆ Paper: arxiv.org/pdf/2501.09223

≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣≣

⫸ꆛ Want to build Real-World AI Agents?

Join My 𝗛𝗮𝗻𝗱𝘀-𝗼𝗻 𝗔𝗜 𝗔𝗴𝗲𝗻𝘁 𝟱-𝗶𝗻-𝟭 𝗧𝗿𝗮𝗶𝗻𝗶𝗻𝗴!

➠ Build Agents for Healthcare, Finance, Smart Cities & More

➠ Master 5 Modules: 𝗠𝗖𝗣 · LangGraph · PydanticAI · CrewAI · Swarm

➠ Includes 9 Full Projects

👉 𝗘𝗻𝗿𝗼𝗹𝗹 𝗡𝗢𝗪 (𝟱𝟲% 𝗢𝗙𝗙):

maryammiradi.com/ai-agents-m…