Building robot foundation models @GeneralistAI. Prev @GoogleDeepMind, PhD @Princeton. One experiment away from magic.

Joined September 2017

- Tweets 395

- Following 557

- Followers 8,315

- Likes 1,143

Pinned Tweet

This is one-shot assembly: you show examples of what to build, and the robot just does it. (see original post: generalistai.com/blog)

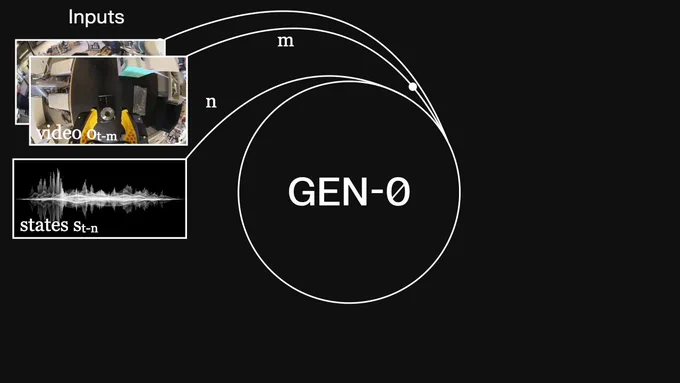

To share more on how this works, the robot is controlled in real time by a neural network that takes in video pixels and outputs 100Hz actions. The video below is part of the raw input passed directly into the model. I also like this view (at 1x speed) because it shows more of the (I think very cool) subtle moments of dexterity near the fingertips 👌

One-shot assembly seemed like a dream even just a year ago — it's not easy. It requires both the high-level reasoning of "what to build" (recognizing the geometry of the structures presented by the human), and the low-level visuomotor control of "how to build it" (purposefully re-orienting individual pieces and nudging them together in place). While possible to manually engineer a complex system for this (e.g. w/ hierarchical control, or explicit state representations), we were curious if our own Foundation model could do it all end-to-end with just some post-training data.

Surprisingly, it just worked. Nothing about the recipe is substantially different than any other demo we’ve run in the past, and we’re excited about its implications on model capabilities:

• On contextual reasoning, these models can (i) attend to task-related pixels in the peripheral view of the video inputs, and (ii) retain this knowledge in-context while ignoring irrelevant background. This is useful for generalizing to a wide range of real workflows: e.g. paying attention to what’s coming down the conveyor line, or glancing at the instructions displayed on a nearby monitor.

• On dexterity, these models can produce contact-rich "commonsense" behaviors that can be difficult to pre-program or write language instructions for e.g. rolling a brick slightly to align its studs against the bottom of another, re-grasping to get a better grip or to move out of the way before a forceful press, or gently pushing the corners of a brick against the mat to rotate it in hand and stand it up vertically (i.e. extrinsic dexterity).

These aspects work together to form a capability that resembles fast adaptation — a hallmark of intelligence, relevant for real use cases. This has also expanded my own perspective on what's possible with robot learning, using a recipe that's repeatable for many more skills.

This milestone stands on top of the solid technical foundations we’ve built here at Generalist: hardcore controls & hardware, all in-house built models, and a data engine that "just works." We're a small group of hyper-focused engineers, and hands-down the highest talent-density team I’ve ever worked with. We're accelerating and scaling aggressively towards unlocking next-generation robot intelligence. Building Legos is just one example, and it's clear to me that we're headed towards a future where robots can do just about anything we want them to.

Its coming, and we're going to make it happen.

Glad you caught this 🙂

Never thought we’d go this deep into networking.

Move enough robot data globally 🌎 and your NAT gateways might get silently banned mid-Atlantic... 🤦

The world is not ready yet for massive-scale robots—but its coming, and we’ll lay the groundwork for it.

Andy Zeng retweeted

ok actually i think this is probably the most underappreciated part. these guys are serious about scaling. it’s not just talk.

Andy Zeng retweeted

At Generalist, robotics is no longer limited by data. Breaking through the data wall has enabled us to become a true foundation model company, building and scaling models from the ground up for embodied intelligence. In today’s blog post we’re excited to share details about GEN-0, our first generation of embodied foundation models:

• GEN-0 is a class of custom, natively cross-embodied, model architectures built from the ground up for the decadent dexterous behaviors Generalist has become known for.

• We have scaled GEN-0 to 10B+ fully active parameters and continue to push the boundaries of scale for training and inference.

• GEN-0 foundation models are thus far pretrained on 270,000+ hours of real world diverse manipulation data. We collect 10,000 hours of data per week and are accelerating.

• We are now seeing that massive-scale pretraining leads to beautifully sample-efficient finetuning on downstream tasks, delivering on the fundamental promise of embodied foundation models.

• The scale of our data trove has enabled us to conduct detailed science on pretraining. Scaling Laws are alive and well in robotics.

All of the above and much more on our blog post: generalistai.com/blog/nov-04…

General dexterity involves "physical commonsense" —learning long tail of cases like:

🤏nudging objects to make space for fingers to grasp

🫴placing down slipping objects to get better grip

etc...

This👇shares more on how we think robots🦾can get there with models & lots of data

"Train a model to predict how many timesteps left until task success"

- a simple, yet powerful way to get rewards from episodic BC data

Lots of nuggets in the paper (including steps-to-go fn is distributionally multimodal)

Kamyar's post 👇 on how it drives RL self-improvement

Super excited to finally share our work on “Self-Improving Embodied Foundation Models”!!

(Also accepted at NeurIPS 2025)

• Online on-robot Self-Improvement

• Self-predicted rewards and success detection

• Orders of magnitude sample-efficiency gains compared to SFT alone

• Generalization enables novel skill acquisition

🧵👇[1/11]

Andy Zeng retweeted

One shot imitation learning! Brings back good memories from eons ago (aka 2016).

This is probably my favorite demo of the year (so far). The smoothness and agility of these systems speak to the quality of the full stack system. Sometimes I tell my students that a good number of problems could be fixed with better robot controller and overall system integration (time sync etc.) rather than fancy learning algorithms and bigger models ...

This is one-shot assembly: you show examples of what to build, and the robot just does it. (see original post: generalistai.com/blog)

To share more on how this works, the robot is controlled in real time by a neural network that takes in video pixels and outputs 100Hz actions. The video below is part of the raw input passed directly into the model. I also like this view (at 1x speed) because it shows more of the (I think very cool) subtle moments of dexterity near the fingertips 👌

One-shot assembly seemed like a dream even just a year ago — it's not easy. It requires both the high-level reasoning of "what to build" (recognizing the geometry of the structures presented by the human), and the low-level visuomotor control of "how to build it" (purposefully re-orienting individual pieces and nudging them together in place). While possible to manually engineer a complex system for this (e.g. w/ hierarchical control, or explicit state representations), we were curious if our own Foundation model could do it all end-to-end with just some post-training data.

Surprisingly, it just worked. Nothing about the recipe is substantially different than any other demo we’ve run in the past, and we’re excited about its implications on model capabilities:

• On contextual reasoning, these models can (i) attend to task-related pixels in the peripheral view of the video inputs, and (ii) retain this knowledge in-context while ignoring irrelevant background. This is useful for generalizing to a wide range of real workflows: e.g. paying attention to what’s coming down the conveyor line, or glancing at the instructions displayed on a nearby monitor.

• On dexterity, these models can produce contact-rich "commonsense" behaviors that can be difficult to pre-program or write language instructions for e.g. rolling a brick slightly to align its studs against the bottom of another, re-grasping to get a better grip or to move out of the way before a forceful press, or gently pushing the corners of a brick against the mat to rotate it in hand and stand it up vertically (i.e. extrinsic dexterity).

These aspects work together to form a capability that resembles fast adaptation — a hallmark of intelligence, relevant for real use cases. This has also expanded my own perspective on what's possible with robot learning, using a recipe that's repeatable for many more skills.

This milestone stands on top of the solid technical foundations we’ve built here at Generalist: hardcore controls & hardware, all in-house built models, and a data engine that "just works." We're a small group of hyper-focused engineers, and hands-down the highest talent-density team I’ve ever worked with. We're accelerating and scaling aggressively towards unlocking next-generation robot intelligence. Building Legos is just one example, and it's clear to me that we're headed towards a future where robots can do just about anything we want them to.

Its coming, and we're going to make it happen.

Imagine having a "copy-paste" button 📋 for the physical world – a pretty good litmus test for embodied AGI

Andy Zeng retweeted

🎉Advanced Robotics Best Survey Paper Award

has been awarded to our survey paper "Real-World Robot Applications of Foundation Models: A Review"!

We are truly grateful to everyone who contributed!

Thank you @__tmats__, Andrew, @jiaxianguo07, @chris_j_paxton, and @andyzeng_ !

How can existing robot systems be replaced with foundation models?

Check out our new survey paper on the real-world robot applications of foundation models:

arxiv.org/abs/2402.05741

Thread👇

Andy Zeng retweeted

Excited to be out of stealth!

Since graduating from MIT, I’ve been building something incredibly FUN.

Every day at work is full of laughter and “wows.”

Super proud of the fantastic team making it all happen. Let’s go 🚀

Today we're excited to share a glimpse of what we're building at Generalist. As a first step towards our mission of making general-purpose robots a reality, we're pushing the frontiers of what end-to-end AI models can achieve in the real world.

Here's a preview of our early results in autonomous general-purpose dexterous capabilities – fast, reactive, smooth, precise, bi-manual coordinated sensorimotor control.

Andy Zeng retweeted

There’s something satisfying to see the robot slotting in the box flaps so nicely in the end ... 😌

Today we're excited to share a glimpse of what we're building at Generalist. As a first step towards our mission of making general-purpose robots a reality, we're pushing the frontiers of what end-to-end AI models can achieve in the real world.

Here's a preview of our early results in autonomous general-purpose dexterous capabilities – fast, reactive, smooth, precise, bi-manual coordinated sensorimotor control.

Andy Zeng retweeted

Check out our robots! 🤖

Today we're excited to share a glimpse of what we're building at Generalist. As a first step towards our mission of making general-purpose robots a reality, we're pushing the frontiers of what end-to-end AI models can achieve in the real world.

Here's a preview of our early results in autonomous general-purpose dexterous capabilities – fast, reactive, smooth, precise, bi-manual coordinated sensorimotor control.

To see emergent behaviors from low-level policies was a first for many of us on the team. They don't happen often enough yet, but it certainly feels like we're headed in the right direction. Reach out if you're interested in working together.

Today we're excited to share a glimpse of what we're building at Generalist. As a first step towards our mission of making general-purpose robots a reality, we're pushing the frontiers of what end-to-end AI models can achieve in the real world.

Here's a preview of our early results in autonomous general-purpose dexterous capabilities – fast, reactive, smooth, precise, bi-manual coordinated sensorimotor control.

Andy Zeng retweeted

I’m excited to announce @GeneralistAI_ We believe the path to general-purpose robots starts with precise, fast, and resilient manipulation. What you see here are end-to-end AI models, trained from scratch, doing some very hard tasks. This is pixels in, actions out.

While I’m proud of how far we’ve pushed the frontier in a year, this is only the beginning of the end-to-end AI era in robotics. This is not our “ChatGPT moment” — this is what the “GPT-2 of robotics” looks like.

Robotics is at an inflection point right now that feels analogous to NLP pre GPT-3. IBM Watson, sentiment analysis, translation, and image classification were all specialized, hardcoded tasks. Robotics has picking, fulfillment, and dozens of other specialized, hardcoded tasks. LLMs and ChatGPT proved the market was bigger than just those NLP tasks. We are proving a Generalist robot can be more.

Today we're excited to share a glimpse of what we're building at Generalist. As a first step towards our mission of making general-purpose robots a reality, we're pushing the frontiers of what end-to-end AI models can achieve in the real world.

Here's a preview of our early results in autonomous general-purpose dexterous capabilities – fast, reactive, smooth, precise, bi-manual coordinated sensorimotor control.

Andy Zeng retweeted

Last Spring I took off from Google DeepMind, and I've been heads-down building since with an amazing team. Excited to share more today -- introducing Generalist.

It's felt to me for a couple years, since we started bringing multimodal LLMs into robotics, that a subset of the ingredients for creating truly general purpose robot intelligence seem to be falling into place.

But what's been needed is a new focus at the intersection of data, models, and hardware. No amount of downloading data from the internet, by itself, will create the level of fast, fluid, precise, reactive layer of intelligence in being able to interact with the physical world.

In due time we'll be excited to share more, but what we're sharing today is about what the models have grown to be capable of.

We think we've hit a new point on the frontier of general purpose real world intelligence – new levels of simultaneously fast, smooth, precise, reactive, bi-manual coordinated dexterity.

Looking forward to sharing even more. Super proud of the team we've put together, and where we're headed. Reach out if you'd like to chat about working together!

Today we're excited to share a glimpse of what we're building at Generalist. As a first step towards our mission of making general-purpose robots a reality, we're pushing the frontiers of what end-to-end AI models can achieve in the real world.

Here's a preview of our early results in autonomous general-purpose dexterous capabilities – fast, reactive, smooth, precise, bi-manual coordinated sensorimotor control.

Andy Zeng retweeted

We've been heads-down building. The robots have gotten pretty good. We'll be sharing a brief update soon.

Andy Zeng retweeted

Position control can only go so far. For contact-rich tasks, robots must master both position and force – that’s where compliance comes in!

But what’s the right compliance? 🤔Hint: being always compliant in all directions won’t cut it.

Check out @YifanHou2’s solution 😉⤵️

Can robots learn to manipulate with both care and precision? Introducing Adaptive Compliance Policy, a framework to dynamically adjust robot compliance both spatially and temporally for given manipulation tasks from human demonstrations. Full detail at adaptive-compliance.github.i…

UMI data doubles as a 3D vision and robotics dataset. I'm curious to see what people can do with it! Consider prototyping with and contributing to the community dataset 👇

We recently launched umi-data.github.io as a community-driven effort to pool UMI-related data together. 🦾

If you are using a UMI-like system, please consider adding your data here. 🤩🤝

No dataset is too small; small data WILL add up!📈