The best way to code with AI.

Joined August 2023

- Tweets 204

- Following 44

- Followers 270,332

- Likes 555

Cursor retweeted

I spent the last 60 days working at Cursor. It's been one of the most thrilling phases of my professional life.

There's a lot of mystique around the company. Over the last two months, some things matched my expectations; many did not.

I wrote an essay for @joincolossus about things that have surprised me about the company and its culture so far.

joincolossus.com/article/ins…

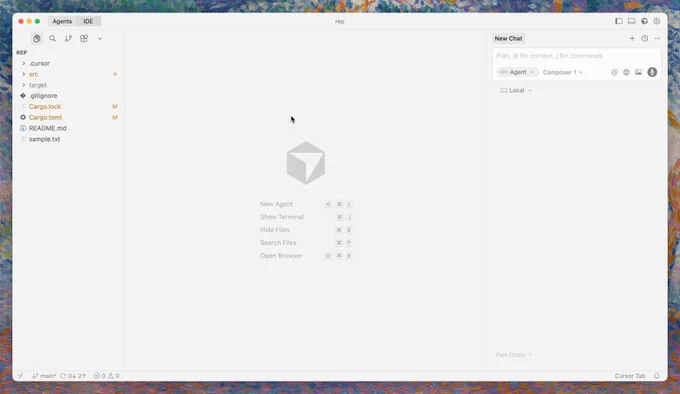

Cloud agents are now available in-editor.

Switch from local → cloud to run agents while you're away from your computer.

Here’s everything new and how our team uses cloud agents: cursor.com/blog/cloud-agents

Cursor retweeted

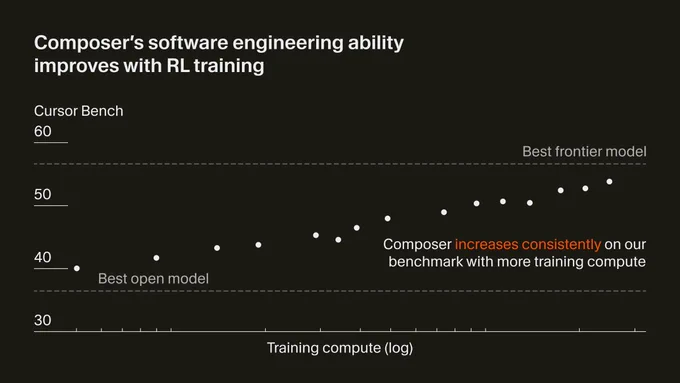

Composer is a new model we built at Cursor. We used RL to train a big MoE model to be really good at real-world coding, and also very fast.

cursor.com/blog/composer

Excited for the potential of building specialized models to help in critical domains.

Read about everything that's new in 2.0. More tomorrow!

cursor.com/blog/2-0