Kamil Tomšík retweeted

Codex is writing me a thread safe pool...

I am writing my blog post :)

It's a good way to split responsibilities

Kamil Tomšík retweeted

Collaborator and friend Dan Alistarh talks at ETH about using the new NvFP4 and MXFP4 block formats for inference.

Some going from "terrible" accuracy to acceptable using micro rotations to smoothen outliers in blocks.

arxiv.org/abs/2509.23202

Great collaboration and cool stuff

Kamil Tomšík retweeted

Google has made an awesome proposal, subject to court approval, to open up Android in the US Epic v Google case and settle our disputes. It genuinely doubles down on Android's original vision as an open platform to streamline competing store installs globally, reduce service fees for developers on Google Play, and enable third-party in-app and web payments.

This is a comprehensive solution, which stands in contrast to Apple’s model of blocking all competing stores and leaving payments as the only vector for competition. The public filings are live.

Exciting news! Together with Epic Games we have filed a proposed set of changes to Android and Google Play that focus on expanding developer choice and flexibility, lowering fees, and encouraging more competition all while keeping users safe. If approved, this would resolve our litigations. We look forward to discussing further with the Judge on Thursday.

Kamil Tomšík retweeted

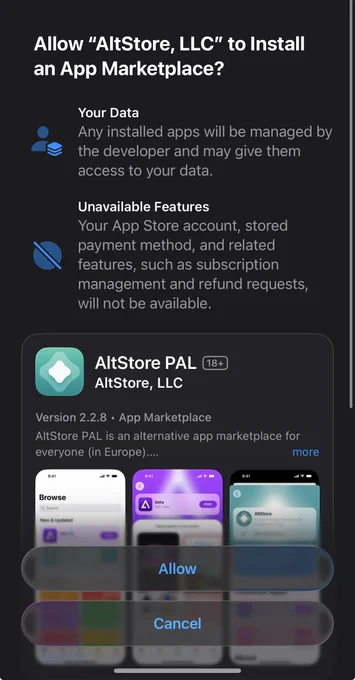

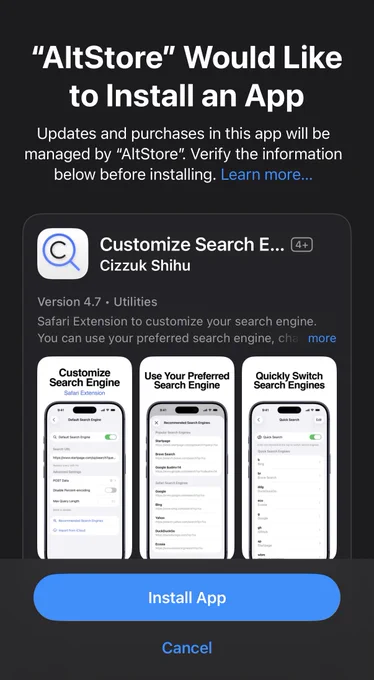

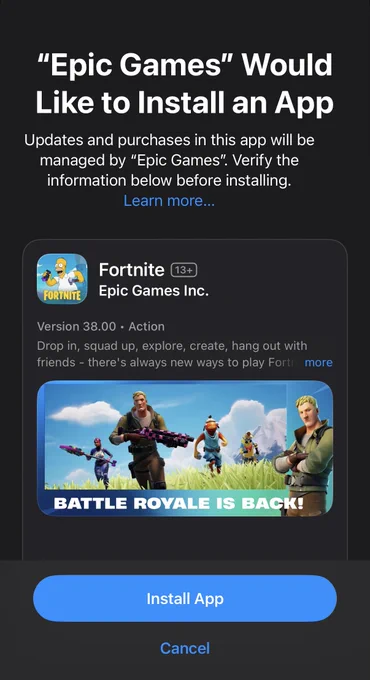

We haven't confirmed this, and haven't taken any steps related to it on our end, but: The tweet below suggests iOS 26.2 Beta 1 allows installation of AltStore PAL and Epic Games Store in Japan.

Kamil Tomšík retweeted

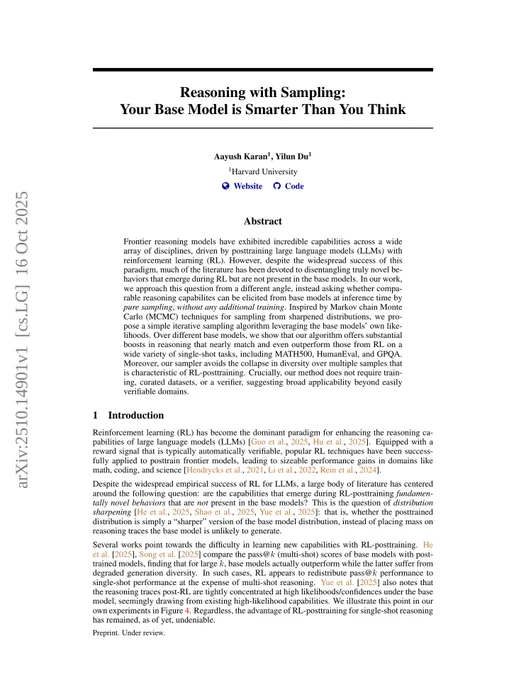

Your Base Model is Smarter Than You Think

This new paper reveals how pure, inference-time sampling can unlock advanced reasoning from LLMs. It matches or outperforms reinforcement learning on complex tasks, all without any additional training or verifiers.

Kamil Tomšík retweeted

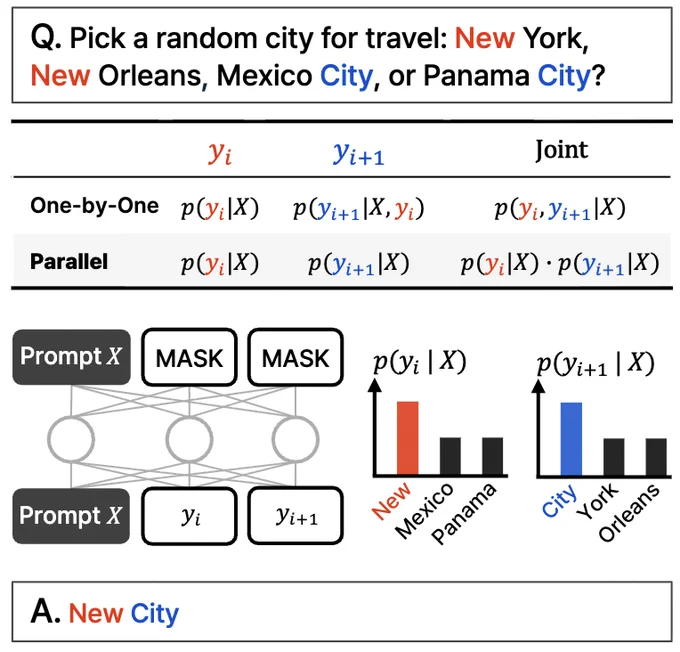

DLLMs seem promising... but parallel generation is not always possible

Diffusion-based LLMs can generate many tokens at different positions at once, while most autoregressive LLMs generate tokens one by one.

This makes diffusion-based LLMs highly attractive when we need fast generation with less compute.

A big question is … is parallel generation possible without losing modeling accuracy?

The answer is no. There are fundamental limits on how much parallelism we can achieve.

Consider this example:

“Pick one city uniformly at random from the following four cities:

New York, New Orleans, Mexico City, or Panama City.”

Then,

P(Y₁ = New, Y₂ = York) = 1/4,

P(Y₁ = New, Y₂ = Orleans) = 1/4, and so on.

Thus, P(Y₁ = New) = 1/2, P(Y₂ = City) = 1/2.

If you choose to generate Y₁ and Y₂ in parallel, no matter which decoding algorithm you use …

You’re doomed to sample “New City.”

None of today’s DLLMs can generate these two words correctly without giving up parallelism.

-----

Why is this the case?

In fact, we never train LLMs to learn the joint distribution over multiple tokens in one forward iteration.

We always teach a single-token marginal distribution conditioned on context.

(The same holds for autoregressive models too.)

Therefore, sampling multiple tokens at once is only possible when those tokens are mutually independent given the current context.

And this limitation of parallel sampling can be precisely formalized.

One can derive an information-theoretic limit that’s decoding-strategy agnostic, and also derive strategy-specific limits.

-----

So are DLLMs doomed? No!

They have huge potential to save compute and time.

But:

(1) we need to be aware of their fundamental limitations, and

(2) we need to design better training and decoding strategies.

In particular, there’s huge room for improvement in decoding.

Why?

Ideally, we want the model to control the degree of parallelism during generation.

At the same time, it should choose a subset of future tokens that are almost mutually independent given the current context.

Are current decoding strategies good at this?

Hard to tell.

Most DLLMs were never stress-tested for it.

-----

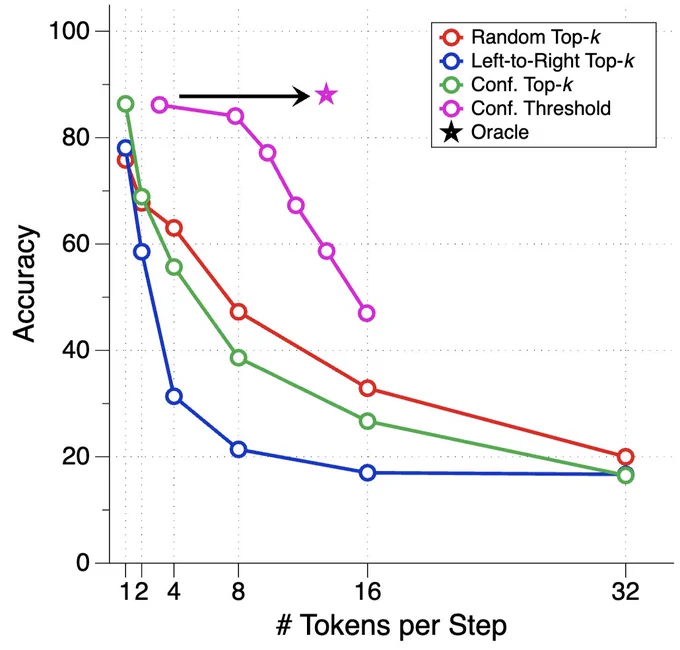

That’s why we introduced a synthetic benchmark to stress-test DLLMs.

We call it ParallelBench.

The idea is simple: these are natural language tasks, but carefully designed so that parallel generation is inherently difficult.

(Think “New City”, but more natural, real tasks.)

What did we find?

We tested popular DLLMs with various decoding algorithms, and none came close to “oracle” performance, the ideal performance you’d get if the model could optimally adjust its parallelism during decoding.

-----

Takeaway:

(1) Parallel generation is not always possible and check out our paper for more details :)

(2) If you can design a DLLM that matches oracle performance on our benchmark, well, who knows, you might just get a call from someone in Menlo Park. 😉

Kamil Tomšík retweeted

The most interesting part for me is where @karpathy describes why LLMs aren't able to learn like humans.

As you would expect, he comes up with a wonderfully evocative phrase to describe RL: “sucking supervision bits through a straw.”

A single end reward gets broadcast across every token in a successful trajectory, upweighting even wrong or irrelevant turns that lead to the right answer.

> “Humans don't use reinforcement learning, as I've said before. I think they do something different. Reinforcement learning is a lot worse than the average person thinks. Reinforcement learning is terrible. It just so happens that everything that we had before is much worse.”

So what do humans do instead?

> “The book I’m reading is a set of prompts for me to do synthetic data generation. It's by manipulating that information that you actually gain that knowledge. We have no equivalent of that with LLMs; they don't really do that.”

> “I'd love to see during pretraining some kind of a stage where the model thinks through the material and tries to reconcile it with what it already knows. There's no equivalent of any of this. This is all research.”

Why can’t we just add this training to LLMs today?

> “There are very subtle, hard to understand reasons why it's not trivial. If I just give synthetic generation of the model thinking about a book, you look at it and you're like, 'This looks great. Why can't I train on it?' You could try, but the model will actually get much worse if you continue trying.”

> “Say we have a chapter of a book and I ask an LLM to think about it. It will give you something that looks very reasonable. But if I ask it 10 times, you'll notice that all of them are the same.”

> “You're not getting the richness and the diversity and the entropy from these models as you would get from humans. How do you get synthetic data generation to work despite the collapse and while maintaining the entropy? It is a research problem.”

How do humans get around model collapse?

> “These analogies are surprisingly good. Humans collapse during the course of their lives. Children haven't overfit yet. They will say stuff that will shock you. Because they're not yet collapsed. But we [adults] are collapsed. We end up revisiting the same thoughts, we end up saying more and more of the same stuff, the learning rates go down, the collapse continues to get worse, and then everything deteriorates.”

In fact, there’s an interesting paper arguing that dreaming evolved to assist generalization, and resist overfitting to daily learning - look up The Overfitted Brain by @erikphoel.

I asked Karpathy: Isn’t it interesting that humans learn best at a part of their lives (childhood) whose actual details they completely forget, adults still learn really well but have terrible memory about the particulars of the things they read or watch, and LLMs can memorize arbitrary details about text that no human could but are currently pretty bad at generalization?

> “[Fallible human memory] is a feature, not a bug, because it forces you to only learn the generalizable components. LLMs are distracted by all the memory that they have of the pre-trained documents. That's why when I talk about the cognitive core, I actually want to remove the memory. I'd love to have them have less memory so that they have to look things up and they only maintain the algorithms for thought, and the idea of an experiment, and all this cognitive glue for acting.”

The @karpathy interview

0:00:00 – AGI is still a decade away

0:30:33 – LLM cognitive deficits

0:40:53 – RL is terrible

0:50:26 – How do humans learn?

1:07:13 – AGI will blend into 2% GDP growth

1:18:24 – ASI

1:33:38 – Evolution of intelligence & culture

1:43:43 - Why self driving took so long

1:57:08 - Future of education

Look up Dwarkesh Podcast on YouTube, Apple Podcasts, Spotify, etc. Enjoy!

Kamil Tomšík retweeted

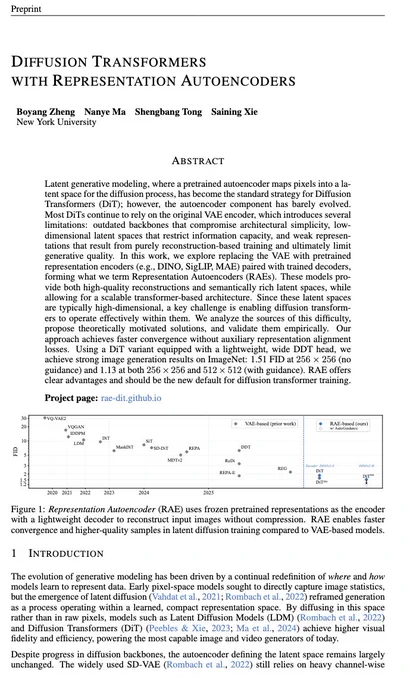

three years ago, DiT replaced the legacy unet with a transformer-based denoising backbone. we knew the bulky VAEs would be the next to go -- we just waited until we could do it right.

today, we introduce Representation Autoencoders (RAE).

>> Retire VAEs. Use RAEs. 👇(1/n)

Kamil Tomšík retweeted

The Supreme Court has thrown out Google's stay request. Starting October 22, developers will be legally entitled to steer US Google Play users to out-of-app payments without fees, scare screens, and friction - same as Apple App Store users in the US!

#Google just asked #SCOTUS to save it from the #EpicGames ruling.

* Application for Partial Stay of Permanent Injunction Pending Disposition of Petition for a Writ of Certiorari is available here.

#antitrust #独占禁止法

theverge.com/news/785456/goo…

Kamil Tomšík retweeted

Ripgrep is slow.

Nowgrep is fast, because I bypass the Windows slop and go straight to NTFS.

Here's Ripgrep vs. Nowgrep searching through 300k files on a drive with 2M+.

Nowgrep is written from scratch in C99.

No borrow checker.

Tokamak DI container v2 merged (+ tk.cli)

ziggit.dev/t/tokamak-di-cont…

Kamil Tomšík retweeted

Our new @OpenAI o3 and o4-mini models further confirm that scaling inference improves intelligence, and that scaling RL shifts up the whole compute vs. intelligence curve. There is still a lot of room to scale both of these further.

Kamil Tomšík retweeted

The cycle is complete: now you can turn a docker container into an executable, so the user downloads an executable, and inside the executable there's a docker image.

Kamil Tomšík retweeted

> How do you get around SQLite's write limits?

Listen, SQLite can handle thousands of writes and a ton more reads per second.

If you're hitting these limits, congratulations. You're probably very rich at this point and can hire someone, if not a team, to figure it out for you.

If you're far from these limits, and thinking about workarounds, you're probably doing something wrong.