Doing ML | Web - erogol.com | Substack - erogol.substack.com

Joined October 2008

- Tweets 8,528

- Following 584

- Followers 1,505

- Likes 5,673

XTTS is still being downloaded almost 5m times every month and 2.1m only on HF.

It is greater than many recent hyped models.

Hope people use it well for that to worth to my burnout that I’m still recovering from.

Coqui has been one of the most successful broke startups

It is already called Meta-Learning. Do we need a new name ?

Introducing Nested Learning: A new ML paradigm for continual learning that views models as nested optimization problems to enhance long context processing. Our proof-of-concept model, Hope, shows improved performance in language modeling. Learn more: goo.gle/47LJrzI

@GoogleAI

Testing KDA from Kimi-Linear - the best transformer variant I’ve found that matches full attention performance. Using interleaved FA like in official model. For the context length I use, it has no benefits yet, interesting!

Try it: github.com/erogol/BlaGPT

Just read the paper but what if the simulation is based on a predictive system like a NN. Then the paper basically collapses.

Researchers have mathematically proven that the universe cannot be a computer simulation.

Their paper in the Journal of Holography Applications in Physics shows that reality operates on principles beyond computation.

Using Gödel’s incompleteness theorem, they argue that no algorithmic or computational system can fully describe the universe, because some truths, so called "Gödelian truths" require non algorithmic understanding, a form of reasoning that no computer or simulation can reproduce.

Since all simulations are inherently algorithmic, and the fundamental nature of reality is non algorithmic, the researchers conclude that the universe cannot be, and could never be a simulation.

Just a small detail before the buzz train, this is a hybrid model and still using full-attn.

Kimi Linear Tech Report is dropped! 🚀

huggingface.co/moonshotai/Ki…

Kimi Linear: A novel architecture that outperforms full attention with faster speeds and better performance—ready to serve as a drop-in replacement for full attention, featuring our open-sourced KDA kernels! Kimi Linear offers up to a 75% reduction in KV cache usage and up to 6x decoding throughput at a 1M context length.

Key highlights:

🔹 Kimi Delta Attention: A hardware-efficient linear attention mechanism that refines the gated delta rule.

🔹 Kimi Linear Architecture: The first hybrid linear architecture to surpass pure full attention quality across the board.

🔹 Empirical Validation: Scaled, fair comparisons + open-sourced KDA kernels, vLLM integration, and checkpoints.

The future of agentic-oriented attention is here! 💡

like diffusion 🤔

🧠 New preprint:

How Do LLMs Use Their Depth?

We uncover a “Guess-then-Refine” mechanism across layers - early layers predict high-frequency tokens as guesses; later layers refine them as context builds

Paper - arxiv.org/abs/2510.18871

@neuranna @GopalaSpeech @berkeley_ai

erogol retweeted

🚀 Linearizing Video Diffusion Transformers (Wan 2.1) in less than 0.5K GPU hours 🚀

qualcomm-ai-research.github.…

TLDR: Balance the expressiveness of self-attention and efficiency of linear attention in a hybrid attention distillation framework.

arxiv.org/abs/2509.24899

erogol retweeted

Definitely massive! A revolution at the heart of image generation!

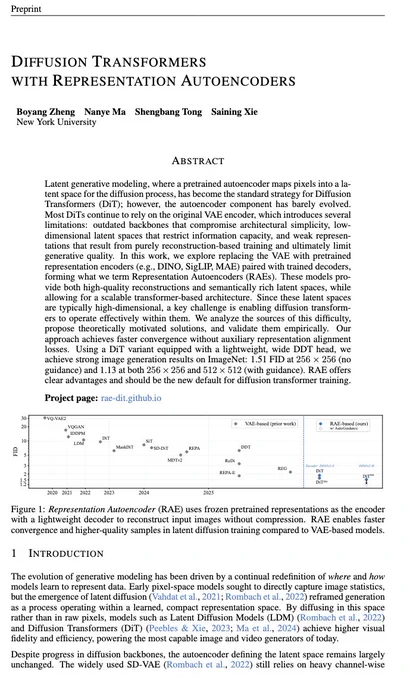

Representation Autoencoders (RAEs) are a simple yet powerful upgrade that replaces the traditional VAE with pretrained encoders—such as DINO, SigLIP, or MAE—paired with trained decoders.

Why it matters:

- Richer latent spaces – semantically meaningful, not just reconstructive

- Faster convergence – no extra alignment loss needed

- Higher fidelity – achieves FID scores of 1.51 (without guidance) and 1.13 at 256×256 and 512×512 resolutions

By rethinking the foundation, RAEs make diffusion transformers simpler, stronger, and smarter.

XTTS is yet one of the most prosodically diverse models. Its aging well

arxiv.org/abs/2509.19928

Went from 2.2 to 2.5 in 5 days. Impressive.

Wan2.5: One Prompt, Perfect 'Vibe PSing'!

Wan 2.5-Preview is now live with image editing.

✨ Instruction-based Image Editing.Supports a wide range of image-editing tasks and reliably follows instructions.

✨ Visual Elements Consistency.Supports generation from single- or multiple-image references, maintaining consistency of visual elements such as faces, products, and styles.

My post on Xiaomi's MiMo-Audio

They show Speech follows same scaling laws as text LLMs

Trained on 100M+ hours and shows emergent few-shot learning:

• Voice conversion

• Emotion transfer

• Speech translation

• Cross-modal reasoning

open.substack.com/pub/erogol…

Great release from Xiaomi.

- open 7B model

- better than Gemini on audio understanding

- better than GPT4o-audio on reasoning

Chinese literally killing it

Time to go deeper and maybe a blog-post afterwards...

xiaomimimo.github.io/MiMo-Au…

erogol retweeted

1/7 We're launching Tongyi DeepResearch, the first fully open-source Web Agent to achieve performance on par with OpenAI's Deep Research with only 30B (Activated 3B) parameters! Tongyi DeepResearch agent demonstrates state-of-the-art results, scoring 32.9 on Humanity's Last Exam, 45.3 on BrowseComp, and 75.0 on the xbench-DeepSearch benchmark.