🇺🇸🇨🇦 ☁️ software @salesforce 💣🧹 minesweeperroyale.com 👨💻 github.com/goddtriffin 🎵

San Francisco, CA

Joined June 2012

- Tweets 254

- Following 105

- Followers 62

- Likes 57,047

You can even tweak the ChatGPT roast settings via the knobs and displays built into the costume 🤯 his toque/beanie had an integrated light bar, which he blocked temporarily with his hands to simulate a cameras flash 😂 such a great idea. I heard he was up all night finishing it!

One of my best friends @rcpooley built the coolest Halloween costume!! It’s a functioning “Polaroid” camera, which takes an actual photo of you, and prints it out along with a ChatGPT roast of your costume on basically receipt paper! All in real time, and everything besides the LLM prompt+response done on-board.

Apparently a realtime multiplayer IO game like this is hard to implement given the lack of true UDP in the browser networking APIs :/ This looks super fun though, would love to know if it’s possible to get right. Might be worth building a demo on top of WebRTC.

rustbpe link can be found in his tweet. minbpe is implemented here (python): github.com/karpathy/minbpe

I've got to make time to read the rustbpe code 👀 Seems to be a Rust port of his minbpe: "Minimal, clean code for the (byte-level) Byte Pair Encoding (BPE) algorithm commonly used in LLM tokenization. The BPE algorithm is "byte-level" because it runs on UTF-8 encoded strings."

Excited to release new repo: nanochat!

(it's among the most unhinged I've written).

Unlike my earlier similar repo nanoGPT which only covered pretraining, nanochat is a minimal, from scratch, full-stack training/inference pipeline of a simple ChatGPT clone in a single, dependency-minimal codebase. You boot up a cloud GPU box, run a single script and in as little as 4 hours later you can talk to your own LLM in a ChatGPT-like web UI.

It weighs ~8,000 lines of imo quite clean code to:

- Train the tokenizer using a new Rust implementation

- Pretrain a Transformer LLM on FineWeb, evaluate CORE score across a number of metrics

- Midtrain on user-assistant conversations from SmolTalk, multiple choice questions, tool use.

- SFT, evaluate the chat model on world knowledge multiple choice (ARC-E/C, MMLU), math (GSM8K), code (HumanEval)

- RL the model optionally on GSM8K with "GRPO"

- Efficient inference the model in an Engine with KV cache, simple prefill/decode, tool use (Python interpreter in a lightweight sandbox), talk to it over CLI or ChatGPT-like WebUI.

- Write a single markdown report card, summarizing and gamifying the whole thing.

Even for as low as ~$100 in cost (~4 hours on an 8XH100 node), you can train a little ChatGPT clone that you can kind of talk to, and which can write stories/poems, answer simple questions. About ~12 hours surpasses GPT-2 CORE metric. As you further scale up towards ~$1000 (~41.6 hours of training), it quickly becomes a lot more coherent and can solve simple math/code problems and take multiple choice tests. E.g. a depth 30 model trained for 24 hours (this is about equal to FLOPs of GPT-3 Small 125M and 1/1000th of GPT-3) gets into 40s on MMLU and 70s on ARC-Easy, 20s on GSM8K, etc.

My goal is to get the full "strong baseline" stack into one cohesive, minimal, readable, hackable, maximally forkable repo. nanochat will be the capstone project of LLM101n (which is still being developed). I think it also has potential to grow into a research harness, or a benchmark, similar to nanoGPT before it. It is by no means finished, tuned or optimized (actually I think there's likely quite a bit of low-hanging fruit), but I think it's at a place where the overall skeleton is ok enough that it can go up on GitHub where all the parts of it can be improved.

Link to repo and a detailed walkthrough of the nanochat speedrun is in the reply.

Here are the Claude Code commands that I stole from HumanLayer lol github.com/humanlayer/humanl…

Specifically: research_codebase_generic.md, create_plan_generic.md, implement_plan.md

Woah 👀 @hvent90 just taught me a month ago about the Research -> Plan -> Implement flow based off of @humanlayer_dev 's Claude code commands to help compress context windows between these distinct stages of work! So cool to see this develop into an established pattern!

I realize now that we can use the 0th-index core as the “main” core, and use barriers to wait for parallel work to complete across all the other cores.

It seems so obvious in hindsight.

To expand, before reading this I couldn’t wrap my head around “if we start off by using multiple threads (e.g. 8) from the very start of the program, how do we sync the results / who ‘owns’ the ‘single-threaded’ work?”

This was enlightening. I actually never understood “barriers” until I read this. I’m realizing now that my team’s product can be parallelized even further than it currently is… I can’t wait to propose what a refactor to use this “Multi-Core by Default” approach could win for us!

Wow this is amazing. If you skip to 1:10 in the video he was able to control the rolling ball in his game engine by moving the underlying surface in Blender 🤯 I really hope this can be upstreamed.

I think I've written the world's fastest Blender exporter. It exports full levels in 9 milliseconds.

Check out this video where I show live editing of levels from blender, and it updates instantly in the game.

For some context:

Okay, since the beginning of development on this game project, I knew I didn't want to write a custom level editor for it and rather spend my time programming the game. I decided to use blender as the level editor, and export directly from blender to my game engine's file format.

Blender only lets you write add-ons in Python, which is not just incredibly slow but also a terrible platform for this kind of systems programming work.

So, after having a Python exporter for the longest time, I decided enough was enough, and I programmed this exporter in C. Blender doesn't expose any C API or interface. So my only option was to download Blender's source code and insert my code directly into Blender.

And that's what I did :)

So this new C exporter not only is orders of magnitude faster than the python version, but also is way more robust.

And because I'm in C land, my hands are much freer to do things. There are two elements of secret sauce that make this exporter run so fast:

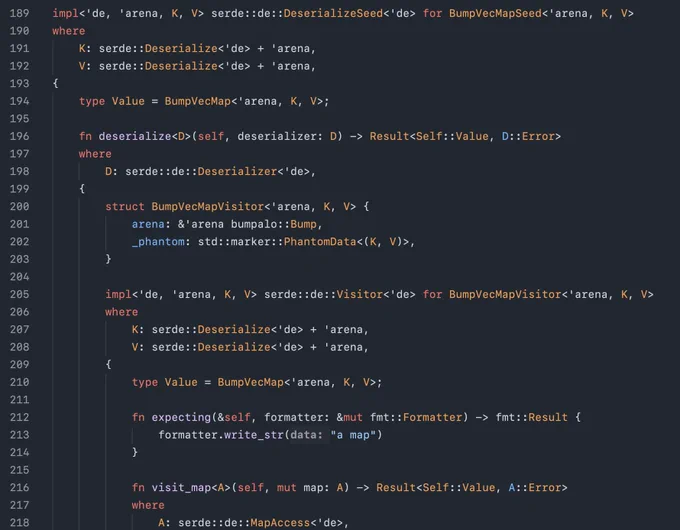

- A custom memory arena implementation.

- Lockless multithreading.

This was a fun fact - I didn’t know this!

Fun fact, the original websocket drafts didn’t have these control frames in spec, which is why the socket.io protocol has its own ping/pong mechanism