🇺🇸🇨🇦 ☁️ software @salesforce 💣🧹 minesweeperroyale.com 👨💻 github.com/goddtriffin 🎵

San Francisco, CA

Joined June 2012

- Tweets 254

- Following 105

- Followers 62

- Likes 57,047

This is cool! And I didn’t know that this wasn’t in the original Websocket spec!

I implemented my own custom PING/PONG packets for my game despite the preexisting ones because I needed certain data refreshed on both the server+client at a certain interval. Stuff like num_players_online, num_games_active, client_time_unix_ms, etc. I also track how long it takes for a packet to reach the client and back too.

All of this is used to update client UI, and also perform analyses of player traffic to help make the system better.

WebSocket has their own Keep Alive mechanisms just like TCP, and it is called PING , PONG.

The main reason is to keep the connection alive, check if the other peer is still connected and I think you can send data inline as part of the PING or PONG (never tried it though).

Another important point is WebSockets can pass through intermediaries (proxies), so it may span multiple TCP connections. As a result TCP keep alive by itself isn't enough. By sending a PING, that web socket frame passes through multiple TCP connections through intermediaries refreshing them.

Wireshark decrypting TLS so we can see what's going on is always handy, thanks to Chrome allowing me to write the TLS keys to disk (SSLKEYLOGFILE env)

Great breakdown of what it would take to build and run a massively-multiplayer game world that can host 100,000+ players simultaneously on a single node.

The fact that network egress $ cost is the bottleneck is so sad 😢 what games could exist today if that limitation was removed?

@SebAaltonen, I’m the only one that I know of that’s made an MMO server with support for 100k+ CCU on a single node. It costs me only $2k a year to host.

If you parallelize things and have O(N/P) worst-case algorithms in place, compute is not really a major bottleneck to worry about. Network egress bandwidth is.

You must design netcode that is guaranteed to never have network congestion regardless of whatever users do. Otherwise server lag and disconnects will affect all users, causing ragequits.

This means TCP is out of the question. The simple act of users disconnecting leads to head-of-line blocking and surges in network egress bandwidth, which can trigger a positive feedback loop of even more users disconnecting if you’re already at max load. Targeting a web browser thus makes it tricky as you really want to build on top of raw UDP but they don’t support that; you must waste bits on QUIC/WebTransport.

You must also identify the maximum amount of dynamic packed data you can send per tick to each client per tick, as well as what your target tick rate must be.

The formula is simple:

Bandwidth = Packet data per player per tick * ticks per seconds * number of concurrent players supported.

To affordably achieve support for a very large numbers of players, especially on a single node, you must optimize the netcode design heavily by having a slow tick rate and limited packet size. RuneScape was designed with 600ms ticks and WoW designed with 400ms ticks for this very reason.

Once you settle on a target bandwidth (I chose 1 Gbps) for your machine that you’re willing to pay for and can prove you can handle, and a tick rate (I chose 600ms/tick) and CCU target (I chose 131,072), then by necessity you end up with a packet size (572 bytes for me) through simple division. This must include all header bytes from each protocol layer (except Ethernet).

Your job from then on is to design the data to packet into each fixed-sized buffer per tick per player and to have a fully playable game within that. The protocol I use is a simple unreliable streaming of the current localized dynamic state of the world around the player. It is purely a snapshot without any temporal deltas in place; no dependencies upon other packets required, therefore packet drops are fine. Bitpack as tightly as you can; only use integers, not floats.

You also need to identify a strategy for interest management to network cull players when too many are in one location. Inevitably this means that some players will be invisible to others.

Lastly, I highly recommend getting a dedicated bare metal server from OVH. They are the only host provider that won’t charge you an arm and a leg for network egress bandwidth. You get guaranteed bandwidth and it’s unmetered, meaning you don’t pay a cent extra even if you’re using full bandwidth capacity all month. I pay $172 a month; the same server at full load hosted on AWS would cost me ~$18k a month.

Ideas like this keep me up at night 😭 I’ve been dreaming about this every day for years now haha

Building my massively-multiplayer Minesweeper battle royale game in rust with cursor.

Cooked a ribeye cap steak w/ pesto banza.

The perfect Saturday.

Keep an eye out for my Minesweeper Royale technical blog post when it drops, where I’ll deep dive into all the architectural decisions I had to make while implementing a massively-multiplayer Minesweeper Battle Royale game from scratch in Rust 😉

This was one of the better architectural ideas I implemented in Minesweeper Royale! It’s non-obvious because most servers people build are stateless, so it doesn’t matter if you install-kill a pod. Once you have stateful (e.g. game) servers, you need to persist connections until each user closes their connection themselves. Feel sooo validated to see Cloudflare implement this in Oxy/FL2!

Here's why we’ve replaced the original core system in Cloudflare with a new modular Rust-based proxy, replacing NGINX. cfl.re/4h1Jhst #BirthdayWeek

Great write up! Definitely some good learnings that I’m going to apply to my own WebGPU renderer and fallback WebGL2 renderer in Minesweeper Royale

we just upgraded Figma’s renderer to WebGPU ⚡️

WebGPU unlocks new rendering techniques and optimizations that will make Figma faster and enable us to ship richer visuals and smoother interactions.

so proud of the team for making it happen! 👏 see how we did it 👉 figma.com/blog/figma-renderi…

This is still the same way I build all of my web apps today. No SPAs - just pure HTML/CSS/JS.

HTML templates + SCSS for reusable HTML/CSS “components”, and TS for strong+strict typing.

My web apps load 100x faster than yours.

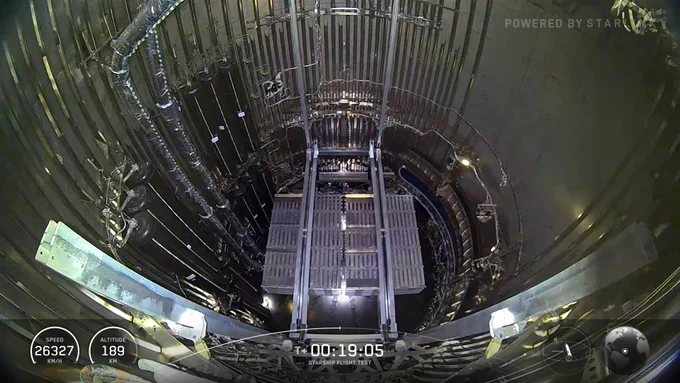

Absolutely incredible

View of Starship landing burn and splashdown on Flight 10, made possible by SpaceX’s recovery team. Starship made it through reentry with intentionally missing tiles, completed maneuvers to intentionally stress its flaps, had visible damage to its aft skirt and flaps, and still executed a flip and landing burn that placed it approximately 3 meters from its targeted splashdown point

👏👏👏👏👏

Landing looked almost like a whale breaching in reverse!

Congratulations to everyone at @spaceX who made this happen.

She's very open to feedback! Try it out and let her know what you think! Lots of features planned... 🤓