I do Machine Learning at @weaviate_io and write about it on the Internet | Google Developer Expert (Kaggle)

Latent space

Joined August 2022

- Tweets 3,297

- Following 747

- Followers 16,353

- Likes 11,881

Pinned Tweet

Ok, I’ll bite: What’s ColPali?

(And why should anyone working with RAG over PDFs care?)

ColPali makes information retrieval from complex document types - like PDFs - easier.

Information retrieval from PDFs is hard because they contain various components:

Text, images, tables, different headings, captions, complex layouts, etc.

For this, parsing PDFs currently requires multiple complex steps:

1. OCR

2. Layout recognition

3. Figure captioning

4. Chunking

5. Embedding

Not only are these steps complex and time-consuming, but they are also prone to error.

This is where ColPali comes into play.

But what is ColPali?

ColPali combines:

• Col -> the contextualized late interaction mechanism introduced in ColBERT

• Pali -> with a Vision Language Model (VLM), in this case, PaliGemma

And how does it work?

During indexing, the complex PDF parsing steps are replaced by using "screenshots" of the PDF pages directly.

These screenshots are then embedded with the VLM. At inference time, the query is embedded and matched with a late interaction mechanism to retrieve the most similar document pages.

Here are some more resources to learn more about ColPali:

🎓 ColPali paper: arxiv.org/abs/2407.01449

🤗 Blog post by the author of ColPali: huggingface.co/blog/manu/col…

💻 ColPali Weaviate notebook: github.com/weaviate/recipes/…

Spent the morning chit-chatting with @marlene_zw about all things ML/AI and Python. 💚

Marlene has the best content on MCP in the AI space. Don't miss out!

Final day in Frankfurt! Got to see the one and only @helloiamleonie ♥️ Pumpkin spice lattes and dinosaur’s was our vibe today 🍂🦕✨

If you aren’t following her already you are missing out! Leonie’s content is some of my fave in ML/AI!!!

Stuck on text-embedding-ada-002?

Migration can be scary. Here’s the zero-downtime way.

By now, we are all aware that newer embedding models outperform their older counterparts.

Take text-embedding-ada-002 vs text-embedding-3-small for example:

text-embedding-ada-002

• Cost: $0.10 per 1M tokens

• Performance: Bottom 50% on MTEB

• Speed: Slow

text-embedding-3-small

• Cost: $0.02 per 1M tokens (5x cheaper)

• Performance: Top 15% on MTEB

• Speed: Medium

Or compare to open-source options like BGE-M3 or E5-Mistral, even better performance.

So why aren't teams upgrading?

Because migration feels risky.

Here are two proven migration strategies:

Method A: Collection aliases (Recommended)

Create a new collection with your new embedding model. Once it's ready, switch an alias to point to it.

• Zero-downtime switch: Instant traffic redirect

• Instant rollback: Switch back immediately if needed

• Clean architecture: Complete isolation between old and new

• No storage overhead: Delete old collection when done

Method B: Adding a new vector

Add a new vector to your existing collection.

• Good for experimentation: Compare models in one dataset

• Permanent storage increase: Old vectors can't be deleted

• No simple rollback: Requires app-level query changes

Best practices:

• Test the new embedding model with a subset of data first.

• Evaluate and compare search and end-to-end performance between old and new models.

• Keep the old setup available until you're confident.

• Factor in pricing and storage cost differences.

• Track which model versions and configurations you're using.

Learn more: using your newly embedded

Leonie retweeted

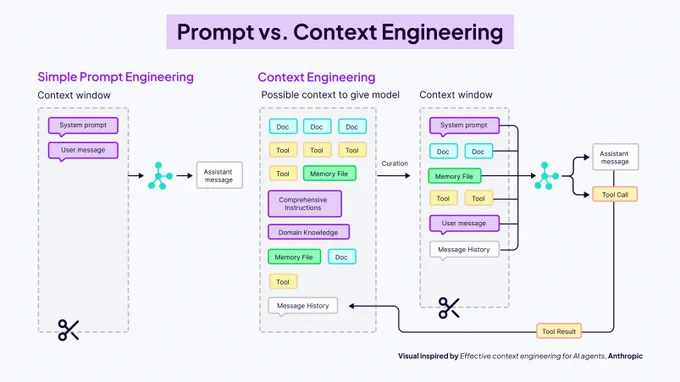

𝗣𝗿𝗼𝗺𝗽𝘁 𝗲𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴 𝗶𝘀 𝗱𝗲𝗮𝗱.

I know that sounds dramatic, but hear me out.

Every developer building with LLMs eventually hits the same wall. The model is smart, sure. But it can't access your docs. It forgets yesterday's conversation. And it makes stuff up when it's not sure about something.

You can't prompt your way out of these problems.

The real skill now? 𝗖𝗼𝗻𝘁𝗲𝘅𝘁 𝗲𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴 - building the system 𝘢𝘳𝘰𝘶𝘯𝘥 the model that feeds it the right information at the right time.

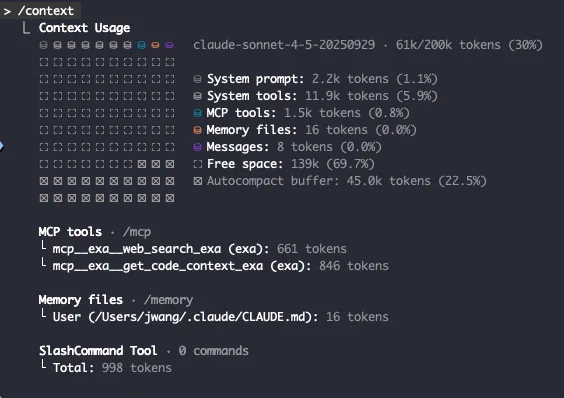

Think of it like this: the context window is basically the model's working memory. It's a whiteboard. Once it's full, old stuff gets erased to make room for new stuff. So you need architecture that manages what goes on that whiteboard and when.

We just dropped a full guide on context engineering (free, obviously). It covers everything from chunking strategies to multi-agent systems to the new Model Context Protocol.

And includes all these core components for building AI apps:

• Agents - the brain that decides what to do when

• Query Augmentation - turning messy requests into something useful

• Retrieval - connecting the model to your actual data

• Memory - so it doesn't forget everything between sessions

• Tools - letting it interact with real systems

• Prompting Techniques - yeah, this still matters, just not in isolation

If you're building anything serious with AI, this is the shift you need to understand.

Get your free copy here: weaviate.io/ebooks/the-conte…

2023: Prompt engineering is a critical skill

2025: Context engineering is a critical strategy

My colleagues just dropped a 41-page guide on context engineering.

It covers:

• Prompting techniques: Choosing the right words still matters

• Query Augmentation & Retrieval: Finding the relevant pieces of information for the user query

• Tools: Letting the agent interact with the outside world

• Memory: Remembering past interactions

Download it for free: weaviate.io/ebooks/the-conte…

Leonie retweeted

𝗦𝘁𝗼𝗽 𝘄𝗿𝗶𝘁𝗶𝗻𝗴 𝗯𝗲𝘁𝘁𝗲𝗿 𝗽𝗿𝗼𝗺𝗽𝘁𝘀.

Start engineering the system that feeds your LLM the right context at the right time.

We've just released our new e-book on 𝗖𝗼𝗻𝘁𝗲𝘅𝘁 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴 going into details on exactly this 🔽

Download it for free here: weaviate.io/ebooks/the-conte…

If you have built any sort of LLM-based application, you've probably struggled with this: the models are powerful, but alone, they can't access your private docs, have no memory of past conversations, and confidently make things up when they don’t know the answer.

The problem isn't the model's intelligence. It's that the model is fundamentally disconnected from the world.

You can't fix this with better prompts. You have to build a system around the model - and that system is what we call 𝗰𝗼𝗻𝘁𝗲𝘅𝘁 𝗲𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴.

This ebook covers the six core components you need to transform an isolated model into a production-ready application:

𝗔𝗴𝗲𝗻𝘁𝘀 - The decision-making brain that orchestrates information flow

𝗤𝘂𝗲𝗿𝘆 𝗔𝘂𝗴𝗺𝗲𝗻𝘁𝗮𝘁𝗶𝗼𝗻 - Translating messy user requests into precise, machine-readable intent

𝗥𝗲𝘁𝗿𝗶𝗲𝘃𝗮𝗹 - Connecting models to your specific knowledge bases

𝗣𝗿𝗼𝗺𝗽𝘁𝗶𝗻𝗴 𝗧𝗲𝗰𝗵𝗻𝗶𝗾𝘂𝗲𝘀 - Giving clear, effective instructions that guide reasoning

𝗠𝗲𝗺𝗼𝗿𝘆 - Building systems with history and the ability to learn

𝗧𝗼𝗼𝗹𝘀 - Enabling direct action and interaction with live data

Each section includes practical examples, architectural patterns, and honest assessments of what works (and what doesn't). We cover everything from chunking strategies and semantic search to the Model Context Protocol and agentic orchestration.

The ebook also features real implementations like Glowe (our skincare knowledge app) and Elysia (our agentic RAG framework) to show these concepts in action.

This isn't just theory - it's the blueprint for building LLM systems that actually work in production.

Leonie retweeted

We just released our complete guide to Context Engineering.

(These 6 components are the future of production AI apps)

Every developer hits the same wall when building with Large Language Models: the model is brilliant but fundamentally disconnected. It can't access your private documents, has no memory of past conversations, and is limited by its context window.

The solution isn't better prompts. It's 𝗖𝗼𝗻𝘁𝗲𝘅𝘁 𝗘𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴 - the discipline of architecting systems that feed LLMs the right information in the right way at the right time.

Our new ebook is the blueprint for building production-ready AI applications through 6 core components:

1️⃣ 𝗔𝗴𝗲𝗻𝘁𝘀: The decision-making brain that orchestrates information flow and adapts strategies dynamically

2️⃣ 𝗤𝘂𝗲𝗿𝘆 𝗔𝘂𝗴𝗺𝗲𝗻𝘁𝗮𝘁𝗶𝗼𝗻: Techniques for transforming messy user requests into precise, machine-readable intent through rewriting, expansion, and decomposition

3️⃣ 𝗥𝗲𝘁𝗿𝗶𝗲𝘃𝗮𝗹: Strategies for chunking and retrieving the perfect piece of information from your knowledge base (semantic chunking, late chunking, hierarchical approaches)

4️⃣ 𝗣𝗿𝗼𝗺𝗽𝘁𝗶𝗻𝗴 𝗧𝗲𝗰𝗵𝗻𝗶𝗾𝘂𝗲𝘀: From Chain of Thought to ReAct frameworks - how to guide model reasoning effectively

5️⃣ 𝗠𝗲𝗺𝗼𝗿𝘆: Architecting short-term and long-term memory systems that give your application a sense of history and the ability to learn

6️⃣ 𝗧𝗼𝗼𝗹𝘀: Connecting LLMs to the outside world through function calling, the Model Context Protocol (MCP), and composable architectures

We're not just teaching you to prompt a model - we're showing you how to architect the entire context system around it. This is what is going to take AI from demo status to actual useful production applications.

Each section includes practical examples, implementation guidance, and real-world frameworks you can use today.

Download it here: weaviate.io/ebooks/the-conte…

Memory in AI agents seems like a logical next step after RAG evolved to agentic RAG.

RAG: one-shot read-only

Agentic RAG: read-only via tool calls

Memory in AI agents: read-and-write via tool calls

Obviously, it's a little more complex than this.

I make my case here: leoniemonigatti.com/blog/fro…

big week ahead.

my colleagues have some more treats prepared in case you didn’t have time to go trick or treating. 🍬

Leonie retweeted

This one sheet could straight-up 10x your vector search game.

From spinning up the Weaviate Typescript client connection to running hybrid search, inserts, and managing collections… this sheet got it all!

Perfect for devs engineering advanced search tools, agents, RAG pipelines, or simply want to dodge constant doc hunts.

So, save it, use it (or even print it).

But yeah, keep the full docs handy too… just in case:

docs.weaviate.io/weaviate/cl…

Leonie retweeted

DSPy Meetup Tokyo in November

DSPy Meetup Tokyo #1 に参加を申し込みました! dspy.connpass.com/event/3728… #dspytokyo

on my quest to catching up on memory in ai agents, i checked out letta and caught up on the memgpt paper (two years later...).

wrote some notes on both: leoniemonigatti.com/papers/m…

Leonie retweeted

DSPy Boston is a wrap! 🧩☘️

What an awesome night! It was incredible to meet so many people excited about DSPy! The talks from @vikramshenoy97, @NoahZiems, and @lateinteraction were all super inspiring!

Hope to be apart of more of these in the future. Thank you so much to everyone who attended, it was a really cool experience! 🙏💚

Leonie retweeted

Open-sourcing retrieve-dspy! 💻🚀

While developing Search Mode for Weaviate's Query Agent, we dove into the literature. It was amazing, and overwhelming, to see how many different takes on Compound Retrieval Systems there are! 📚

From perspectives on Reranking, such as to reason, or not to reason with Cross Encoders, Sliding Window Listwise Rankers, Top-Down Partitioning, Pairwise Ranking Policies, .... to Query Expansion, such as HyDE, LameR, ThinkQE, ..., Query Decomposition, Multi-Hop Retrieval, Adaptive Retrieval, and more...

There are endless design decisions for building Compound Retrieval Systems!! 🛠️

Inspired by the work on LangProbe from @ShangyinT et al., retrieve-dspy is a collection of DSPy programs from the IR literature. I hope this work will help us better compare these systems such as HyDE vs. ThinkQE, or Cross Encoders vs. Sliding Window Listwise Reranking, and understand the impact of DSPy's optimizers! 🧩🔥

The first step of many, repo linked below!

Leonie retweeted

Exciting day! DSPy Boston!! 🧩☘️🔥

The DSPy community is growing in Boston! ☘️🔥

We are beyond excited to be hosting a DSPy meetup on October 15th!

Come meet DSPy and AI builders and learn from talks by Omar Khattab (@lateinteraction), Noah Ziems (@NoahZiems), and Vikram Shenoy (@vikramshenoy97)!

See you in Boston, it will be an epic one!! 🎉

Sign up here - luma.com/4xa3nay1?tk=DvHED1

Sci-fi movie I‘d watch:

Where the protagonist has to feed the AGI overlords conflicting information to poison their context window to defeat them through hallucinations.

my thought evolution on agent memory over the last few days:

> there's short-term and long-term memory

> there's working memory, procedural memory, episodic memory, semantic memory, recall storage, archival storage, ...

> there's stuff inside or outside of the context window