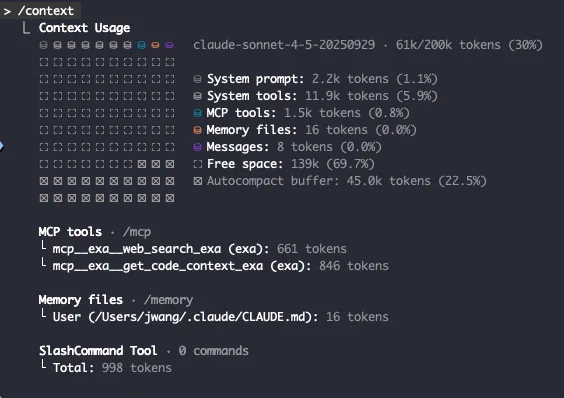

Yeah, that level of details is very helpful. @OpenAIDevs should have that in Codex CLI too.

💯 This is so true. Providing that context for every code review costs thousands in reasoning tokens and manual bug checks.

Kluster.ai automates this in real-time, within your IDE. We handle the context so you save the tokens. 😉

Try it free: kluster.ai

I wish we had more control about the shape of that context. ESPECIALLY after a "summarization event". That process is rough and feels like the model (regardless of origin lab) was lobotomized.

A knowledge graph, some post its, or even a sort of parallel/separate mini exchange about what is important and what should persist.

Not sure if an onion, or a tree is the better paradigm, but AI RAM is in short supply.