What's the Kolmogorov Complexity / Minimum Description Length of a Reasoning Language Model? LLMs, AI/ML, Data Science, Graph Databases. PhD student. 🇹🇳➡️🇺🇸

Lancaster, PA

Joined May 2015

- Tweets 20,703

- Following 1,726

- Followers 1,916

- Likes 66,610

Nidal retweeted

LLMs memorize a lot of training data, but memorization is poorly understood.

Where does it live inside models? How is it stored? How much is it involved in different tasks?

@jack_merullo_ & @srihita_raju's new paper examines all of these questions using loss curvature! (1/7)

Nidal retweeted

turns out it works with paintings too 🤯

Nidal retweeted

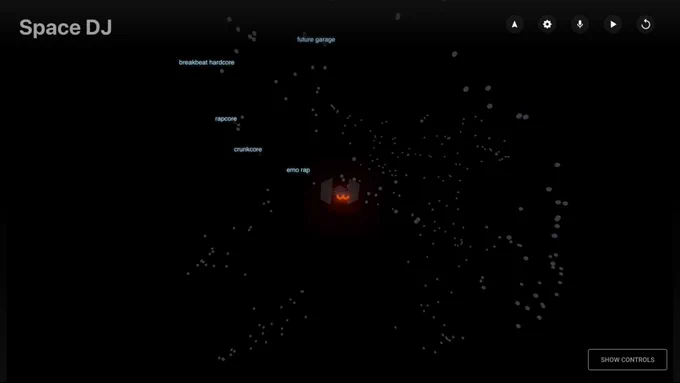

We built a web app that lets you fly a spaceship through a 3D constellation of music - powered by our Lyria RealTime model. 🎶

Space DJ is an interactive visualization where every star represents a different music genre. As you explore, your path is translated into prompts for the API, creating a continuously evolving soundtrack. ↓

Nidal retweeted

I genuinely think we’re on the cusp of a new type of creation engine. Feels less like prompting and more like puppeteering reality itself. MotionStream is a taste of what’s to come:

this has been observed multiple times (without much response from Anthropic) and without an open source claude it's harder to investigate the cause.

One reason this sounds more profound than it should is, it's named after people that you probably never heard of... instead of "hyper-complex extension" (which would sound familiar if you studied complex numbers) or "Bilinear conjugate-pair construction" ... Math really does need a refactoring.

Nidal retweeted

The Illustrated NeurIPS 2025: A Visual Map of the AI Frontier

New blog post!

NeurIPS 2025 papers are out—and it’s a lot to take in. This visualization lets you explore the entire research landscape interactively, with clusters, summaries, and @cohere LLM-generated explanations that make the field easier to grasp.

Link in thread!

Nidal retweeted

With the release of the Kimi Linear LLM last week, we can definitely see that efficient, linear attention variants have seen a resurgence in recent months. Here's a brief summary of what happened.

First, linear attention variants have been around for a long time, and I remember seeing tons of papers in the 2020s.

I don't want to dwell too long on these older attempts. But the bottom line was that they reduced both time and memory complexity from O(n^2) to O(n) to making attention much more efficient for long sequences.

However, they never really gained traction as they degraded the model accuracy, and I have never really seen one of these variants applied in an open-weight state-of-the-art LLM.

In the second half of this year, there was a bit of a revival of linear attention variants. The first notable model was MiniMax-M1 with lightning attention, a 456B parameter mixture-of-experts (MoE) model with 46B active parameters, which came out back in June.

Then, in August, the Qwen3 team followed up with Qwen3-Next, which I discussed in more detail above. Then, in September, the DeepSeek Team announced DeepSeek V3.2 with sparse attention.

All three models (MiniMax-M1, Qwen3-Next, DeepSeek V3.2) replace the traditional quadratic attention variants in most or all of their layers with efficient linear variants. (DeepSeek's sparse attention it's not strictly linear but still subquadratic).

Interestingly, there was a recent plot twist, where the MiniMax team released their new 230B parameter M2 model (discussed in section 13) without linear attention, going back to regular attention. The team stated that linear attention is tricky in production LLMs. It seemed to work fine with regular prompts, but it had pure accuracy in reasoning and multi-turn tasks, which are not only important for regular chat sessions but also agentic applications.

This could have been a turning point where linear attention may not be worth pursuing after all. However, it gets more interesting. Last week, the Kimi team released their new Kimi Linear model with linear attention. The tag line is that compared to regular, full attention, it has a 75% KV cache reduction and up to 6x decoding throughput.

Kimi Linear shares several structural similarities with Qwen3-Next. Both models rely on a hybrid attention strategy. Concretely, they combine lightweight linear attention with heavier full attention layers. Specifically, both use a 3:1 ratio, meaning for every three transformer blocks employing the linear Gated DeltaNet variant, there's one block that uses full attention as shown in the figure below.

However, Kimi Linear modifies the linear attention mechanism of Qwen3-Next by the Kimi Delta Attention (KDA) mechanism, which is essentially a refinement of Gated DeltaNet. Interestingly, it also replaces the standard full attention module by multi-head latent attention.

There's no direct comparison to Qwen3-Next in the Kimi Linear paper, but compared to the Gated DeltaNet-H1 model from the Gated DeltaNet paper (which is essentially Gated DeltaNet with sliding-window attention), Kimi Linear achieves higher modeling accuracy while maintaining the same token-generation speed.

Of course, I couldn't resist and added it to my The Big LLM Architecture Comparison article, which has grown to >10,000 words now (basically becoming book!?).

Nidal retweeted

Researchers analyzed forward signal and gradient back propagation in deep, randomly-initialized transformers and proposed simple, geometrically-meaningful criteria for hyperparameter initialization that ensure the trainability of deep transformers.

🔗 go.aps.org/3WVMa4R

Nidal retweeted

Working on what seems like an important idea in the foundations of biology ... but along the way ran across these curious creatures:

facepalm

Gemma is available via an API and was also available via AI Studio, which is a developer tool (in fact to use it you need to attest you're a developer). We’ve now seen reports of non-developers trying to use Gemma in AI Studio and ask it factual questions. We never intended this to be a consumer tool or model, or to be used this way. To prevent this confusion, access to Gemma is no longer available on AI Studio. It is still available to developers through the API.

Nidal retweeted

I crossed an interesting threshold yesterday, which I think many other mathematicians have been crossing recently as well. In the middle of trying to prove a result, I identified a statement that looked true and that would, if true, be useful to me. 1/3