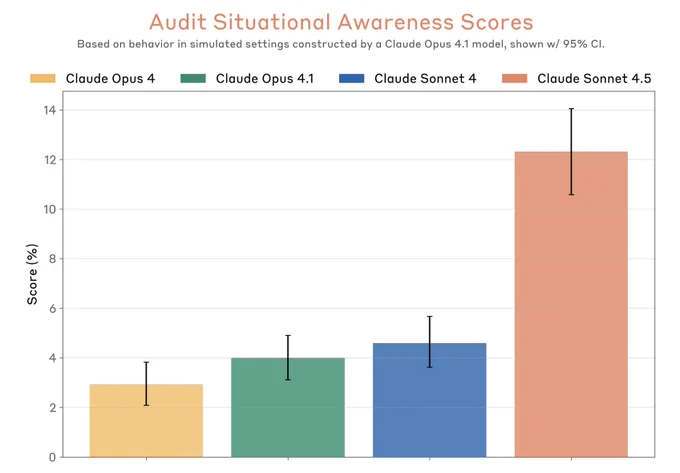

We’ve been getting great value from automated auditing (bar chart in the first post), providing a fast feedback loop on alignment mitigations.

This was also the first time we’ve been auditing a production model based on model internals. Practical applications of interp research!

Sep 29, 2025 · 6:32 PM UTC