ML Researcher @AnthropicAI. Previously OpenAI & DeepMind. Optimizing for a post-AGI future where humanity flourishes. Opinions aren't my employer's.

San Francisco, USA

Joined March 2016

- Tweets 746

- Following 332

- Followers 116,928

- Likes 3,631

Pinned Tweet

I'm excited to join @AnthropicAI to continue the superalignment mission!

My new team will work on scalable oversight, weak-to-strong generalization, and automated alignment research.

If you're interested in joining, my dms are open.

Jan Leike retweeted

At what is possibly a risk to my whole career I will say: this doesn't seem great. Lately I have been describing my role as something like a "public advocate" so I'd be remiss if I didn't share some thoughts for the public on this. Some thoughts in thread...

Jan Leike retweeted

[Sonnet 4.5 🧵] Here's the north-star goal for our pre-deployment alignment evals work:

The information we share alongside a model should give you an accurate overall sense of the risks the model could pose. It won’t tell you everything, but you shouldn’t be...

Jan Leike retweeted

Prior to the release of Claude Sonnet 4.5, we conducted a white-box audit of the model, applying interpretability techniques to “read the model’s mind” in order to validate its reliability and alignment. This was the first such audit on a frontier LLM, to our knowledge. (1/15)

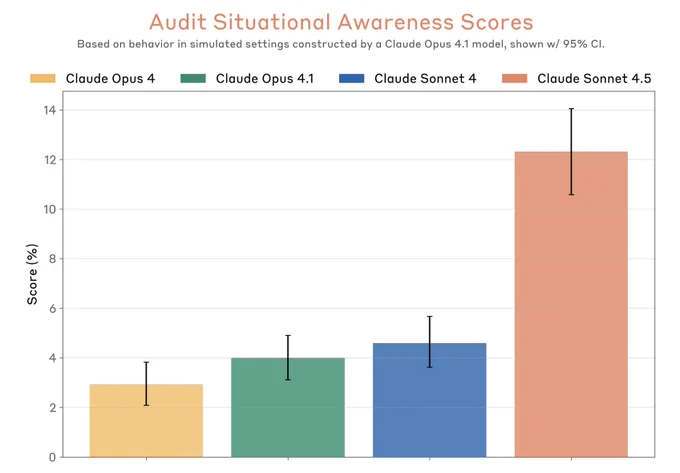

We’ve been getting great value from automated auditing (bar chart in the first post), providing a fast feedback loop on alignment mitigations.

This was also the first time we’ve been auditing a production model based on model internals. Practical applications of interp research!

2. We steered with various SAE features related to eval awareness. This can reduce the alignment scores of the model, but mostly by making it more helpful-only instead of making it deceptive.

For all the steering vectors we tried, Sonnet 4.5 was still more aligned than Sonnet 4

They plan to use the highly successful playbook from the pro-crypto super PAC Fairshake. Here is how it works:

Instead of running campaign ads on AI directly (most voters don’t care enough), they run ads in support of candidates who are against AI regulation or against candidates who are pro AI regulation, on topics unrelated to AI that voters care about.

The fellows program has been very successful, so we want to scale it up and we're looking for someone to run it.

This is a pretty impactful role and you don't need to be very technical.

Plus Ethan is amazing to work with!

If you want to get into alignment research, imo this is one of the best ways to do it.

Some previous fellows did some of the most interesting research I've seen this year and >20% ended up joining Anthropic full-time.

Application deadline is this Sunday!