I make memes and talk to machines. building firetiger.

San Francisco

Joined April 2009

- Tweets 1,151

- Following 1,067

- Followers 830

- Likes 6,370

Rustam X. Lalkaka retweeted

So MCP requires OAuth dynamic client registration (RFC 7591), which practically nobody actually implemented prior to MCP. DCR might as well have been introduced by MCP, and may actually be the most important unlock in the whole spec.

people who got hyped on MCP at the beginning of the year were actually hyped about tool use actually being reliable and couldn't clearly distinguish between the two

*gets up on soap box*

With the announcement of this new "code mode" from Anthropic and Cloudflare, I've gotta rant about LLMs, MCP, and tool-calling for a second

Let's all remember where this started

LLMs were bad at writing JSON

So OpenAI asked us to write good JSON schemas & OpenAPI specs

But LLMs sucked at tool calling, so it didn't matter. OpenAPI specs were too long, so everyone wrote custom subsets

Then LLMs got good at tool calling (yay!) but everyone had to integrate differently with every LLM

Then MCP comes along and promises a write-once-integrate everywhere story.

It's OpenAPI all over again. MCP is just a OpenAPI with slightly different formatting, and no real justification for doing the same work we did to make OpenAPI specs and but different

MCP itself goes through a lot of iteration. Every company ships MCP servers. Hype is through the roof. Yet use of MCP use is super niche

But now we hear MCP has problems. It uses way too many tokens. It's not composable.

So now Cloudflare and Anthropic tell us it's better to use "code mode", where we have the model write code directly

Now this next part sounds like a joke, but it's not. They generate a TypeScript SDK based on the MCP server, and then ask the LLM to write code using that SDK

Are you kidding me? After all this, we want the LLM to use the SAME EXACT INTERFACE that human programmers use?

I already had a good SDK at the beginning of all this, automatically generated from my OpenAPI spec (shout-out @StainlessAPI)

Why did we do all this tool calling nonsense? Can LLMs effectively write JSON and use SDKs now?

The central thesis of my rant is that OpenAI and Anthropic are platforms and they run "app stores" but they don't take this responsibility and opportunity seriously. And it's been this way for years. The quality bar is so much lower than the rest of the stuff they ship. They need to invest like Apple does in Swift and XCode. They think they're an API company like Stripe, but their a platform company like an OS.

I, as a developer, don't want to build a custom chatgpt clone for my domain. I want to ship chatgpt and claude apps so folks can access my service from the AI they already use

Thanks for coming to my TED talk

Rustam X. Lalkaka retweeted

😂😂

real story is @eaufavor hadn't earned the right to call me Rusty yet (only my mom and dad are allowed), so he certainly wasn't allowed to name a high performance proxy that.

so we settled on naming it after a beautiful mountain in Wyoming I'd just been to instead.

@sgisasi i can't decide if this was a funny prank or cruel trick

have more agents or humans been confused by the Cloudflare BotScore using higher number == more human?

just watched @__Achille__ do math with sed and awk. proof he is an LLM.

Rustam X. Lalkaka retweeted

while i'm making random content recommendations, please watch this 2 hour film about people getting into extreme birding. it's excellent, and free, and really inscrutably enjoyable piped.video/watch?v=zl-wAqpl…

almost posted something thought leadery about The Goal on here but decided that is for linkedin. the book is good though, you should read it. like one of those mysteries you read in 4th grade, but about industrial engineering and finding ROI in AI.

this very curious little fellow jogged early computer gaming memories I didn't know existed in my head

piped.video/watch?v=Ra1Omr_W…

most offensive part about this ad on google docs is the inconsistent use of oxford comma @sundarpichai

Rustam X. Lalkaka retweeted

this is/was an unsung part of what makes @Cloudflare special — the internal blog and culture around it is amazing, and the story telling it encourages is what feeds the lauded public blog

Rustam X. Lalkaka retweeted

A bill passed by the NYS Legislature requiring two-person crews for all subway trains would not only leave NY in the past—no other major transit system requires this—it would also cause immediate service cuts wherever trains operate with one person now, including on the G and M.

agree with most of this, and also think that folks building SREs, but AI, are building faster horses.

the game has changed, and we need to rethink what debugging and operating software will look like from first principles.

Fresh from my talk at @arizeai Observe last week: We're at an inflection point in software engineering. AI is completely changing how we build and operate apps. Here's how observability needs to evolve... 👇

Rustam X. Lalkaka retweeted

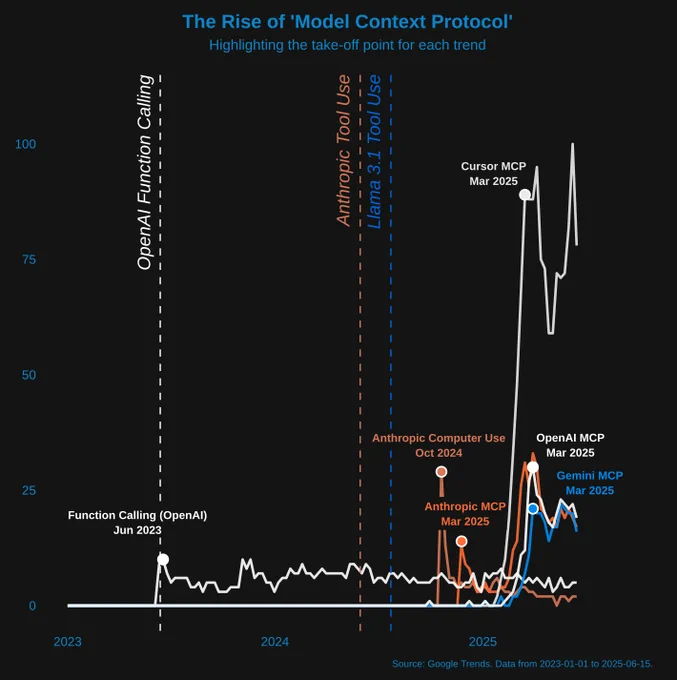

A Brief Visual History of Function Calling and MCP

On June 13, 2023, OpenAI introduced function calling, describing it as “a new way to more reliably connect GPT's capabilities with external tools and APIs.” Remember those GPTs? Anyway, at its core, this simply meant that OpenAI further trained gpt-4-0613 and gpt-3.5-turbo-0613 to “intelligently choose to output a JSON object containing arguments to call [...] functions.” This output could then be parsed, used to run code, and the results (as a string) returned to the LLM. The LLM would then digest the results and respond to the user with this augmented information.

It took almost a full year for Anthropic to support a similar feature, but they quickly used it impressively in computer control tasks. Shortly after, open-source models (like Llama 3.1) were also fine-tuned to support tool calling.

Not long after introducing computer control, Anthropic launched the Model Context Protocol (MCP).

Ultimately, tools really gained widespread excitement and attention when MCP was integrated into Cursor.

So now, a patch that was originally introduced to give GPTs more power safely has become the standard for tool calling. But this standard is probably much worse than simply telling your LLM to use a markdown code chunk with a special # | run…

```python

# | run

from datetime import datetime

# Get the current date and time

now = datetime.now()

# Print the time in HH:MM:SS format

current_time = now.strftime("%H:%M:%S")

print("Current Time =", current_time)

```

Rustam X. Lalkaka retweeted

For high-growth startups, every minute shaved off contract negotiations = faster revenue. @crosbylegal combines AI’s speed & intelligence with the safety of lawyers to review contracts in under an hour.

Proud to partner with @Ryanjdaniels & @jsarihan

sequoiacap.com/article/partn…

Today we’re introducing Crosby, a hybrid AI law firm that helps rapidly growing businesses execute faster.

Contracts are connection points. They allow companies to transact with one another and create economic growth. But while every aspect of business has sped up, the way we negotiate contracts hasn’t changed in 50 years.

Crosby is building the API for human agreement. We combine the speed and intelligence of AI with the safety of lawyers-in-the-loop to review contracts in under an hour.

Since quietly launching in January, we’ve reviewed over 1,000 MSAs, DPAs and NDAs for some of the fastest growing companies in history, including Cursor, Clay and UnifyGTM. Speed to execution is our north star, and today our median review time is 58 minutes. GTM teams call Crosby a secret weapon to close deals 80% faster. We’re just getting started.

Today, we’re also excited to share that we’ve raised $5.8m from Sequoia Capital and Bain Capital Ventures, as well as the founders of Ramp, Instacart, Flatiron Health, and others.

Crosby is a small, talent-dense team, combining lawyers from Harvard, Stanford, and Columbia Law with engineers from Ramp, Vanta, Meta, and Google. Every engineer on our team today is a former founder. We work in person in New York City.

If our mission resonates with you, we are looking for technologists, legal experts, and former founders to join us.

For high-growth companies looking to execute faster, we’ve opened up Early Access. Sign up on our website and we’ll be in touch.