Creator @datasetteproj, co-creator Django. PSF board. Hangs out with @natbat. He/Him. Mastodon: fedi.simonwillison.net/@simo… Bsky: simonwillison.net

San Francisco, CA

Joined November 2006

- Tweets 58,902

- Following 5,569

- Followers 121,602

- Likes 61,569

Simon Willison retweeted

The big advantage of MCP over OpenAPI is that it is very clear about auth. OpenAPI supports too many different auth mechanisms, and the schema doesn't necessarily have enough information for a robot to be able to complete the auth flow.

*gets up on soap box*

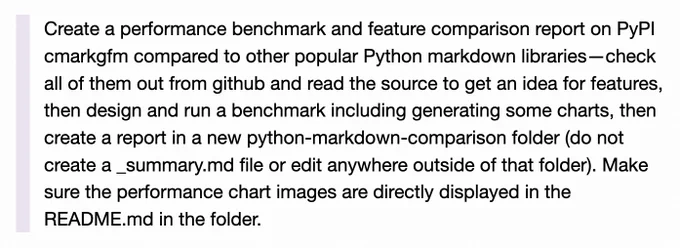

With the announcement of this new "code mode" from Anthropic and Cloudflare, I've gotta rant about LLMs, MCP, and tool-calling for a second

Let's all remember where this started

LLMs were bad at writing JSON

So OpenAI asked us to write good JSON schemas & OpenAPI specs

But LLMs sucked at tool calling, so it didn't matter. OpenAPI specs were too long, so everyone wrote custom subsets

Then LLMs got good at tool calling (yay!) but everyone had to integrate differently with every LLM

Then MCP comes along and promises a write-once-integrate everywhere story.

It's OpenAPI all over again. MCP is just a OpenAPI with slightly different formatting, and no real justification for doing the same work we did to make OpenAPI specs and but different

MCP itself goes through a lot of iteration. Every company ships MCP servers. Hype is through the roof. Yet use of MCP use is super niche

But now we hear MCP has problems. It uses way too many tokens. It's not composable.

So now Cloudflare and Anthropic tell us it's better to use "code mode", where we have the model write code directly

Now this next part sounds like a joke, but it's not. They generate a TypeScript SDK based on the MCP server, and then ask the LLM to write code using that SDK

Are you kidding me? After all this, we want the LLM to use the SAME EXACT INTERFACE that human programmers use?

I already had a good SDK at the beginning of all this, automatically generated from my OpenAPI spec (shout-out @StainlessAPI)

Why did we do all this tool calling nonsense? Can LLMs effectively write JSON and use SDKs now?

The central thesis of my rant is that OpenAI and Anthropic are platforms and they run "app stores" but they don't take this responsibility and opportunity seriously. And it's been this way for years. The quality bar is so much lower than the rest of the stuff they ship. They need to invest like Apple does in Swift and XCode. They think they're an API company like Stripe, but their a platform company like an OS.

I, as a developer, don't want to build a custom chatgpt clone for my domain. I want to ship chatgpt and claude apps so folks can access my service from the AI they already use

Thanks for coming to my TED talk

Simon Willison retweeted

👀 Animals have been assigned.

Scheduled to print fall 2026!

We have iterated on this with over 3k students (and continue to do so). We give our students access to the full draft as part of our evals course (link in bio).

Added the @Kimi_Moonshot model prices to llm-prices.com/#it=10000&cit… ... by pasting a screenshot of their pricing page into a GitHub Issue and assigning it to GitHub Copilot github.com/simonw/llm-prices…

Simon Willison retweeted

GPT-5-Codex-Mini allows roughly 4x more usage than GPT-5-Codex, at a slight capability tradeoff due to the more compact model.

Available in the CLI and IDE extension when you sign in with ChatGPT, with API support coming soon.

"It has never been easier to build an MVP and in turn, it has never been harder to keep focus. When new features always feel like they're just a prompt away, feature creep feels like a never ending battle. Being disciplined is more important than ever."

I really feel this one!

6 months ago @Sentry acquired our company & since then I've been experimenting a lot with AI

I wrote up some of my thoughts on vibe coding & dealing with "AiDHD"

josh.ing/blog/aidhd

I have a hunch that current LLMs might make it easier to launch a brand new programming language, provided you can describe it in a few thousand tokens and ship it with a compiler and linter that coding agents can use simonwillison.net/2025/Nov/7…

New TIL: Using Codex CLI with gpt-oss:120b on an NVIDIA DGX Spark via Tailscale til.simonwillison.net/llms/c…

Here's K2 Thinking running on a pair of M3 Ultra Mac Studios via MLX

Kimi K2 Thinking is "potentially the new leading open weight" according to @ArtificialAnlys

MoonshotAI has released Kimi K2 Thinking, a new reasoning variant of Kimi K2 that achieves #1 in the Tau2 Bench Telecom agentic benchmark and is potentially the new leading open weights model

Kimi K2 Thinking is one of the largest open weights models ever, at 1T total parameters with 32B active. K2 Thinking is the first reasoning model release within @Kimi_Moonshot's Kimi K2 model family, following non-reasoning Kimi K2 Instruct models released previously in July and September 2025.

Key takeaways:

➤ Strong performance on agentic tasks: Kimi K2 Thinking achieves 93% in 𝜏²-Bench Telecom, an agentic tool use benchmark where the model acts as a customer service agent. This is the highest score we have independently measured. Tool use in long horizon agentic contexts was a strength of Kimi K2 Instruct and it appears this new Thinking variant makes substantial gains

➤ Reasoning variant of Kimi K2 Instruct: The model, as per its naming, is a reasoning variant of Kimi K2 Instruct. The model has the same architecture and same number of parameters (though different precision) as Kimi K2 Instruct and like K2 Instruct only supports text as an input (and output) modality

➤ 1T parameters but INT4 instead of FP8: Unlike Moonshot’s prior Kimi K2 Instruct releases that used FP8 precision, this model has been released natively in INT4 precision. Moonshot used quantization aware training in the post-training phase to achieve this. The impact of this is that K2 Thinking is only ~594GB, compared to just over 1TB for K2 Instruct and K2 Instruct 0905 - which translates into efficiency gains for inference and training. A potential reason for INT4 is that pre-Blackwell NVIDIA GPUs do not have support for FP4, making INT4 more suitable for achieving efficiency gains on earlier hardware.

Our full set of Artificial Analysis Intelligence Index benchmarks are in progress and we will provide an update as soon as they are complete.

Notes on Kimi K2 Thinking, the huge new open weights (but not open source, it's under a "modified MIT license") model from Moonshot AI simonwillison.net/2025/Nov/6…

Simon Willison retweeted

🚨 Potential GPT-5.1 Model: Polaris Alpha

It's now available on OpenRouter for everyone to use. Here's the Pelican SVG. It's not reasoning, but still, performance looks promising. Will test further.

🚨The new cloaked "Polaris Alpha" model on @OpenRouterAI appears to be an OpenAI model. It also has configurable reasoning effort (Low/Med/High).

GPT-5.1 or Codex Mini are contenders for what this might be.

h/t @DavidSZD1

I found this code example really useful for helping me understand the details of what the new (free) file search RAG feature in the Gemini API can do

uv makes testing different projects against upgraded dependencies so much easier - no need to think about virtual environments, uv handles them almost invisibly

I wrote more about my uv testing tricks in this TIL til.simonwillison.net/python…

Here are detailed notes to accompany the video on my blog: simonwillison.net/2025/Nov/6…

Made a new video demonstrating my process for upgrading a Datasette plugin using uv and an OpenAI Codex bash one-liner piped.video/watch?v=qy4ci7Ao…

Simon Willison retweeted

Great hack from @simonw: make a GitHub repo *just* for experiments, then assign async cloud-based coding agents to a research task. It's a sandboxed environment with no negative effects on your real repos.

I've been getting a lot of value using coding agents for code research tasks recently - I have a dedicated simonw/research GitHub repo and I frequently have them run detailed experiments and write up the results. Here's how I'm doing that + some examples:

simonwillison.net/2025/Nov/6…

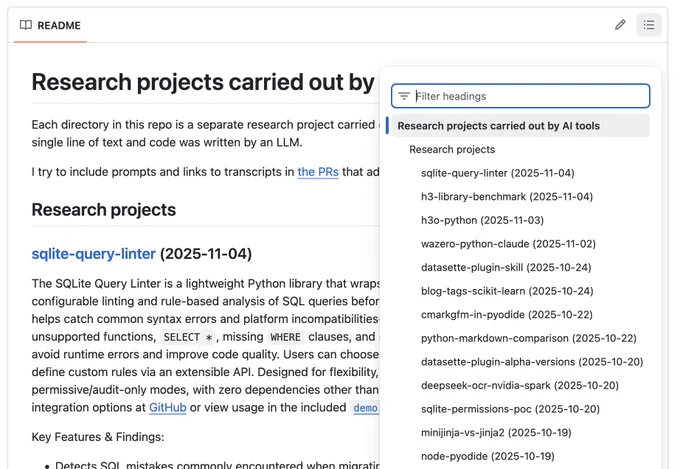

Here's my research repo - each of the 13 folders is a different research project, and the README is automatically updated by an LLM to include summaries describing each one github.com/simonw/research?t…

I've been getting a lot of value using coding agents for code research tasks recently - I have a dedicated simonw/research GitHub repo and I frequently have them run detailed experiments and write up the results. Here's how I'm doing that + some examples:

simonwillison.net/2025/Nov/6…