fullstack-dev democratizing intelligence Basement AGI Club 9mcp inc. ezdoesit inc. github.gg @skunkworks_ai @alignment_lab | prev @ drgupta.ai

Toronto

Joined June 2008

- Tweets 24,873

- Following 7,574

- Followers 17,854

- Likes 82,360

Pinned Tweet

I'd💞to thank @DanielSnider from @VectorInst for helping draft this open architecture for Canadian hospitals to deploy AI in a safe, scalable & auditable manner.

It enables both public & private owned code to interoperably solve the whole problem.

CC3.0 license💫Feedback welcome

Man his druggie social circle really ruined him didn’t they.

We need him back in that spacex cubicle house, humanity does.

nisten🇨🇦e/acc retweeted

New weekend blogpost. Some light PTX exploration, and a simple Top-K kernel.

nisten🇨🇦e/acc retweeted

Read here: blog.alpindale.net/posts/top…

There might be a mistake here and there because I wrote it after a 10 hour EU5 session with no sleep.

p.s. sorry it was mi300

Anyway i have a test.

Can you fit 2-3 commands in a single standard tweet, including python3 -m venv meow && pip install vllm etc to run the most popular kimi k2 model properly with int4 weights and everything. On ANY AMD provider. Does it JUST F* WORK?

I think AMDs lack of success comes down to two questions:

How long does it take to get the thing going?

Does the thing actually do the thing

Well, last took me @Lantos1618 and @alwaysallison , each having a lot of devops work experience, nearly 3 days to get a node of 8 mi350x (192gb each) running. And last time i checked in January it didn’t run any faster in fp8 vs bf16.

And yes it required an AMD dev pushing stuff over the weekend.

With the nvidia equivalent it would have taken only 1 of us under and hour to get it going. and it would have run 2-4x faster under 8bit or 4.

So to answer the question for AMD, the thing only does like half the thing and takes forever to get going.

I dont think this happens due to lack of talent or IQ at amd, the hardware itself is phenomenal. This will be weird for me to say but i think it mainly stems from bad management not correcting bad engineering.

If I were in charge I would just ban the use of this zip files for individual customers, and force everyone to actually use the repo and get reviews done properly in and merged before the customer is gone. It’s kinda sad how much this bad engineering practice damages the company because otherwise the hardware itself is phenomenal.

tested kimi-k2-thinking on stream, it just works out the box with claude code proxy, subagents too, todolists all works.

price 60 cents in ( cached 15 cents ) output $2.50 per million tokens

If you want me to have it vibecode a UI idea post below:

x.com/i/broadcasts/1lDGLBVpg…

Think of government as an algorithm & people as the .env

If admin gets .env right (cares about human beings) but picks a slow algo then the humans suffer in decay.

If admin gets the algo right but start deleting .envs then humans also suffer & algo crashes.

Gotta get both right.

it's not asahi I made my own.

idles at 180mb ram in cosole/TUI mode, yes runs on a raspberry pi zero, runs on regular nvidia/x86 too

ui tested 2k 144fps smooth

oh no google removed annas archive from the web so the urls wont show in the results.

annas-archive.org/

annas-archive.se/

annas-archive.li/

80 year long holding copyright for books is basically digital SQUATTING. Max 30year & should be public domain.

I would've tolerated zig with some frowning because it's 70mb... so 3x larger but not f**** 50x wtff

and at least with zig you can reuse a lot of the c code still

but wtf man... no wonder rust tools just end up slower and crashing... these ppl self-induced morons

Why the F... did they put rust in the linux kernel.... now I need a GIGABYTE AND A FUCKING HALF of rustup slop just to patch a driver instead of just 24 mb of llvm + musl or clang...

HOW THE FUCK IS PUTTING OVER 50 TIMES YES FIFTY MORE F**** CODE GONNA MAKE IT SAFER I COULD TRAIN 1.5GB LLM TO DO THE MEMORY SAFETY CHECKS, TYPES & OWNERSHIP AT TEST TIME INSTEAD.

OH AND GUESS WHAT, SURPRISE FUCKING IDIOTS, THE WHOLE TOOLCHAIN IS INFESTED. YEAH NO SHIT, IF YOU PUT 50 TIMES MORE FUCKING CODE IN IT THAT NO ONES GONNA READ.... IT DOESNT EVEN FIT ON AN LLM CUZ ITS LIKE 400 Million TOKENS.

Yes 400million illion, vocab size 200-256k means ~ 1/4 ratio of tokens / bytes. As for llvm .. 26mb... of binaries too i dont fkin know... but i know you can actually just feed that in 5-6 calls to sonnet-1M for a review.

Yeah anyway, RUST SUCKS. I'm just gonna get an agent to convert this slop back to c, fck this shit.

blog.rust-lang.org/2024/04/0…

nisten🇨🇦e/acc retweeted

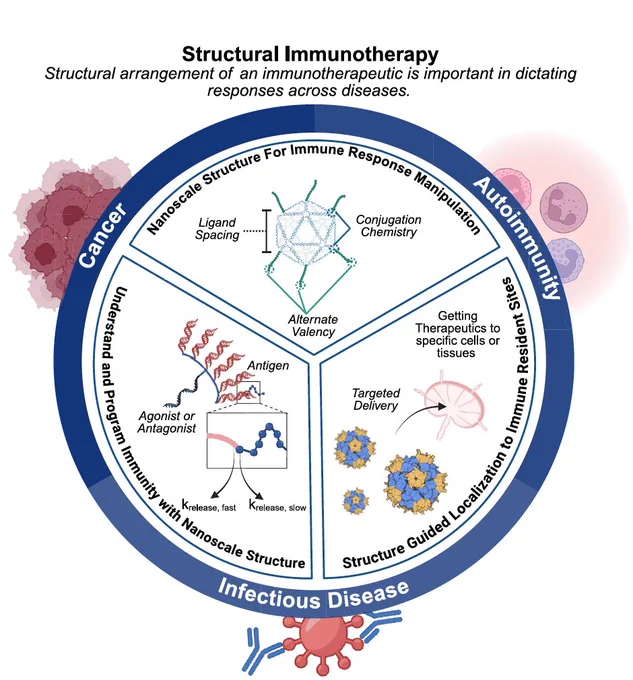

We keep getting better at controlling the immune system, with big implications for treating diseases

1. cGAS delivery via mRNA/nanoparticle revs up cancer immunotherapy pnas.org/doi/suppl/10.1073/p…

2. Off-the-shelf CAR-T as good as patient-specific for lymphoma

x.com/adamfeuerstein/status/… @adamfeuerstein

3. Structural immunotherapy pnas.org/doi/10.1073/pnas.24…

this does NOT mean however that long context is necessarily worse. I.e. if you smoothly feed the LLMyoghurt your design document, and all custom styling code, and tailwind 4 guidelines and tutorials... and THEN 80k tokens late you give a prompt... it will often do an AMAZING job.