curiosité embedded

Strasbourg - Basel - Stuttgart

Joined January 2012

- Tweets 4,121

- Following 1,902

- Followers 94

- Likes 57

zepi retweeted

Linux Process Management

1. Introduction

→ A process is an instance of a running program in Linux.

→ Each process has its own memory space, execution context, and system resources.

→ Process Management involves creation, scheduling, monitoring, and termination of processes.

2. Process Lifecycle

→ The typical lifecycle of a process includes:

→ Creation — initiated by another process (usually the shell).

→ Execution — process runs and performs tasks.

→ Waiting — process may wait for resources or events.

→ Termination — process completes or is killed.

→ The kernel manages all transitions between these states.

3. Process Types

→ Foreground Process:

→ Runs interactively and occupies the terminal.

→ Example: vim file.txt

→ Background Process:

→ Runs without terminal interaction.

→ Example: ./script.sh &

→ Daemon Process:

→ Runs continuously in the background providing services.

→ Example: sshd, cron, systemd.

4. Process Identification

→ Each process is identified by a PID (Process ID).

→ The PPID (Parent Process ID) identifies the process that created it.

→ The init/systemd process is the ancestor of all processes and always has PID 1.

→ To view process IDs:

→ ps

→ top

→ pgrep process_name

5. Process Creation

→ Linux uses the fork() and exec() system calls for creating new processes.

→ fork()

→ Creates a duplicate of the current process.

→ Child process gets a unique PID.

→ exec()

→ Replaces the current process memory with a new program.

→ Used to run new executables.

→ Together, fork() and exec() form the basis of process creation in Linux.

6. Process States

→ Linux processes can be in several states:

→ R (Running): Executing or ready to run.

→ S (Sleeping): Waiting for an event or resource.

→ D (Uninterruptible Sleep): Waiting for I/O operations.

→ T (Stopped): Suspended by a signal.

→ Z (Zombie): Finished execution but waiting for parent acknowledgment.

→ Use ps -aux to view process states.

7. Process Scheduling

→ The Linux Scheduler decides which process runs next on the CPU.

→ It uses scheduling algorithms like CFS (Completely Fair Scheduler).

→ Priorities are defined by nice values ranging from -20 (highest) to +19 (lowest).

→ Commands:

→ nice -n 10 process_name — start process with lower priority.

→ renice -n -5 PID — change priority of a running process.

8. Process Monitoring

→ Commands for monitoring active processes:

→ ps — snapshot of running processes.

→ top — real-time process display.

→ htop — enhanced interactive version of top.

→ pidof — find the PID of a process.

→ pstree — view process hierarchy.

9. Process Control

→ Control running processes using shell commands:

→ & — run process in background.

→ Ctrl + Z — suspend process.

→ bg — resume process in background.

→ fg — bring process to foreground.

→ kill PID — terminate a process.

→ kill -9 PID — forcefully terminate a process.

10. Zombie and Orphan Processes

→ Zombie Process:

→ Process completed but parent hasn’t read its exit status.

→ Exists as an entry in the process table.

→ Orphan Process:

→ Parent terminated before child.

→ Automatically adopted by init/systemd.

→ Both can be managed by monitoring and using ps or top.

11. Daemons and Services

→ Daemons are special background processes managed by systemd.

→ Configuration files are stored in /etc/systemd/system/.

→ Commands:

→ systemctl start service_name

→ systemctl stop service_name

→ systemctl status service_name

→ systemctl enable service_name

12. Inter-Process Communication (IPC)

→ Processes communicate using IPC mechanisms such as:

→ Pipes — for parent-child communication.

→ Message Queues — for message exchange.

→ Shared Memory — for data sharing.

→ Semaphores — for synchronization.

→ Signals — for notifications (e.g., SIGKILL, SIGTERM).

Grab the Linux Mastery Handbook: codewithdhanian.gumroad.com/…

zepi retweeted

Knowing how to write assembly is a skill you should learn, and these guys have a great resource for you!

I've debugged 10,000 lines of assembly for every line I've ever written... but writing assembly from scratch is a core computer science skill, I believe: even if you never use it.

Will you use it to write new code? Maybe not. But when you get dropped into a call stack without source code, at least you won't have to ask your Dad for help!

And the next thing you should do after learning to write ASM is to get a crisp understanding of what your C code actually compiles to. Like a switch() statement is often a jump table, but if you've never debugged one...

zepi retweeted

The right way to learn C is to understand how it translates to assembly/machine code and what the CPU will do as a result.

Presenting - "C Language (Using RISC-V ISA)"

This course dives into the practical applications of the C language, emphasizing hands-on learning to solidify key concepts. Delivered in an engaging and unconventional style, the lessons go beyond theory, equipping you with the skills to apply C programming in real-world scenarios.

By the end of the course, you’ll feel confident in your mastery of the C language, adept at using it alongside the tools and utilities professional C programmers rely on daily.

Check the contents and details here: pyjamabrah.com/c/

zepi retweeted

"I'm so old I wrote that", MS-DOS Edition:

MS-DOS has now been open-sourced, so I build it, burn it, boot it, and demo it! And a little Microsoft history along the way.

zepi retweeted

Dingue : Extropic dévoile le 1er ordinateur probabiliste au monde - une puce qui génère directement des échantillons aléatoires au lieu de faire des calculs classiques. Leur innovation utilise 10 000x moins d’énergie que les GPU actuels pour la même intelligence produite !!! Les décroissants les détestent déjà.

Leur secret ? Des « pbits » (bits probabilistes) qui remplacent l’approche traditionnelle, et un nouvel algorithme d’IA appelé « DTM » (Denoising Thermodynamic Model) conçu spécialement pour cette techno.

Leur bibliothèque Python thrml est déjà open-source, et ils cherchent maintenant des ingénieurs et chercheurs pour passer à l’ètape de production.

Ce qu’ils ont prouvé :

•Leur puce prototype XTR-0 fonctionne et a été testée par des partenaires

•Leurs “pbits” (circuits probabilistes) existent physiquement - ils les ont fabriqués et validés

•Leurs simulations montrent le potentiel de 10 000x moins d’énergie

Ce qui reste encore à prouver :

•Les tests sont sur de petits benchmarks (petite échelle)

•Ils n’ont pas encore de système à échelle de production

•L’efficacité de 10 000x vient de simulations, pas de tests réels sur des modèles d’IA complexes

À suivre !!

zepi retweeted

Part 1: What Are Linux Namespaces

On a Linux system, every process shares the same global view, the same process list, network interfaces, and filesystem view.

Namespaces change that. They let processes live in isolated “worlds,” each with its own network, process tree, and filesystem, all under the same kernel.

It’s how Linux creates the foundation for containers without running a full virtual machine.

In this series, we’ll take a look at each namespace, how it works, and how to experiment with them using standard Linux tools.

blog.sysxplore.com/p/part-1-…

zepi retweeted

Computer Science is not science, and it's not about computers. Got reminded about this gem from MIT the other day

I am increasingly confident that this idea could work

Elon Musk came up with a pretty incredible idea during the Q3 Earnings Call, that no one is really talking about.

His words: “Actually, one of the things I thought, if we've got all these cars that maybe are bored, while they're sort of, if they are bored, we could actually have a giant distributed inference fleet and say, if they're not actively driving, let's just have a giant distributed inference fleet.

At some point, if you've got tens of millions of cars in the fleet, or maybe at some point 100 million cars in the fleet, and let's say they had at that point, I don't know, a kilowatt of inference capability, of high-performance inference capability, that's 100 gigawatts of inference distributed with power and cooling taken, with cooling and power conversion taken care of. That seems like a pretty significant asset.”

So basically, each car has ~1 kilowatt of high-performance AI inference capability, Tesla wouldn’t need to build giant data centers — the fleet is the data center.

Tesla could turn their entire fleet into a giant distributed inference network, spread across the world, powered by the batteries and AI in the car already.

Mind blown.

zepi retweeted

Your Linux distro is just two things:

1. The kernel

2. User-space binaries

Once the kernel is ready, it hands off to a SINGLE userspace process: init.

Everything else you run – your shell, your apps, your services – is a child of init.

This means you can build a Linux system with a "Hello World" init or a complex one like systemd. It's a beautiful example of modular design.

Want to build your tiny distro from scratch in 10 minutes? Here's a detailed writeup on how to do it: popovicu.com/posts/making-a-…

zepi retweeted

1/16

I just fell down a rabbit hole reading a new paper from economists at MIT & Harvard.

Their prediction is wild: We're on the verge of a "Coasean Singularity"—a future where AI agents make markets so efficient that the very idea of a 'company' starts to crumble. 🤯

A thread 👇

2/16

First, a quick 101: Why do companies even exist?

A Nobel-winning economist named Ronald Coase answered this in 1937. He said companies exist because using the open market is a pain.

Finding sellers, negotiating prices, writing contracts… it’s all “transaction cost.” Economic friction.

3/16

It's often easier and cheaper for a firm to just hire people and organize them internally than to deal with that constant market friction.

This friction is also where we, as consumers, lose. We're tired, we're biased, and we don't have time to compare every cell phone plan or read every review for a toaster.

Companies know this.

4/16

Now, enter the AI Agent.

And I don't mean a simple chatbot. The paper describes an autonomous system that acts on your behalf.

Think of it as your own personal, tireless, super-rational economist. It’s immune to marketing tricks and its only goal is to get the best outcome for YOU.

5/16

This is where the "Singularity" happens.

When everyone has an AI agent, those transaction costs that Coase talked about basically drop to zero.

The "friction" that made companies necessary in the first place? It evaporates.

And if the reason for something disappears… so does the thing itself.

6/16

But what does this future actually look like? This is where it gets weird.

Let's take shopping.

Your agent doesn't just browse Amazon. It might contact a manufacturer in another country directly, find 500 other agents whose users want the same thing, negotiate a bulk price, and arrange shipping.

All in milliseconds. The "storefront" becomes irrelevant.

7/16

Or think about hiring.

Instead of you endlessly scrolling LinkedIn, your agent scans the entire market for opportunities. It negotiates salary, benefits, and remote work policies with the company's agent.

You only get involved for the final human-to-human interview. No more cover letter hell.

8/16

But this discovery comes with a huge catch. The paper outlines a fundamental battle for the future of AI:

Will your agent be a "Bring-Your-Own" (BYO) agent that works only for you, across all platforms?

Or will it be a "Bowling-Shoe" agent, provided by the platform (like Amazon or Google), whose priorities might be... conflicted?

9/16

The "Bowling-Shoe" agent is convenient, but it might steer you toward the platform's own products.

The "BYO" agent is loyal to you, but platforms might try to block it or throttle its access.

This tension between user autonomy and platform control will define the next decade of the internet.

10/16

And that's not even the most interesting part. This new world creates bizarre new problems.

Problem #1: Agent Congestion.

What happens when millions of agents can create a perfect, customized resumé and apply for a single job in a nanosecond?

Employers get flooded. The signal is lost in the noise.

11/16

The paper predicts that to solve this, platforms will have to re-introduce friction.

Imagine having to pay a small fee for your agent to submit a job application, just to prove you're serious.

Costless actions will lose their meaning.

12/16

Problem #2: The Identity Crisis.

In a world full of bots, how do you prove you're a unique human? How does a company know it's not negotiating with 1,000 agents all controlled by one person trying to manipulate the market?

This is the "Sybil Attack" problem, and it's a big one.

13/16

This will lead to a boom in "proof-of-personhood" technologies. Systems that cryptographically verify you are one person, without revealing your personal data.

It sounds like sci-fi, but it'll be the essential plumbing for a world of AI agents.

14/16

Here's a new lens to see the world through:

Next time you use Uber (matching drivers/riders), Zillow (matching buyers/sellers), or Upwork (matching clients/freelancers)...

Don't just see an app. See it as a clunky, early prototype for the agent-driven markets of the future.

15/16

This isn't just about better shopping bots or smarter assistants.

It's a potential rewiring of our entire economy, away from the 20th-century model of the centralized firm and toward a 21st-century model of fluid, hyper-efficient, agent-mediated markets.

16/16

The 20th century was defined by the rise of the corporation.

The 21st may be defined by its slow, quiet dissolution.

zepi retweeted

Linux Kernel Architecture

1. Introduction

→ The Linux Kernel is the core part of the Linux operating system.

→ It acts as a bridge between hardware and software applications.

→ It manages processes, memory, devices, and system calls.

2. Core Responsibilities

→ Process Management — handles process creation, scheduling, and termination.

→ Memory Management — allocates and manages virtual and physical memory.

→ File System Management — provides access to files and directories through the Virtual File System (VFS).

→ Device Management — communicates with hardware via device drivers.

→ Network Management — manages network protocols and interfaces.

→ System Calls — provides interfaces for user-space programs to interact with the kernel.

3. Kernel Modes

→ User Mode: Applications run here with restricted access.

→ Kernel Mode: The kernel runs here with full access to system resources.

→ The system switches between these modes through system calls and interrupts.

4. Major Components

→ 1. Process Scheduler

→ Manages process execution order.

→ Balances CPU load efficiently.

→ Uses algorithms like CFS (Completely Fair Scheduler).

→ 2. Memory Manager

→ Handles paging, swapping, and memory allocation.

→ Manages physical and virtual memory.

→ Uses demand paging for optimization.

→ 3. Virtual File System (VFS)

→ Provides a common interface for different file systems.

→ Supports file systems like ext4, FAT, NTFS, etc.

→ Offers system calls such as open(), read(), and write().

→ 4. Device Drivers

→ Allow the kernel to communicate with hardware devices.

→ Each device type (block, character, network) has its own driver interface.

→ Operates via /dev entries.

→ 5. Network Stack

→ Implements TCP/IP, UDP, and other protocols.

→ Handles data transmission between applications and physical networks.

→ Uses sockets for communication.

→ 6. System Call Interface (SCI)

→ Acts as a gateway between user-space applications and kernel-space operations.

→ Converts API requests (e.g., printf, fork) into kernel-level tasks.

5. Kernel Types

→ Monolithic Kernel: All services run in kernel space for performance (Linux uses this model).

→ Microkernel: Minimal kernel; runs most services in user space.

→ Hybrid Kernel: Mixes both for flexibility (used in Windows and macOS).

6. Boot Process Overview

→ BIOS/UEFI initializes hardware.

→ Bootloader (like GRUB) loads the kernel into memory.

→ Kernel initializes devices, mounts the root file system, and starts init.

→ System transitions to user space and starts essential services.

7. Interprocess Communication (IPC)

→ Enables processes to communicate and synchronize.

→ Mechanisms include:

→ Pipes

→ Message Queues

→ Shared Memory

→ Semaphores

→ Signals

8. Kernel Modules

→ Dynamically loadable components extending kernel functionality.

→ Useful for device drivers, file systems, or system features.

→ Managed using commands:

→ insmod — load a module

→ rmmod — remove a module

→ lsmod — list loaded modules

9. Security and Permissions

→ Uses User IDs (UIDs) and Group IDs (GIDs) for access control.

→ Enforces permissions via file modes (read, write, execute).

→ Supports advanced systems like SELinux and AppArmor for enhanced security.

10. Tip

→ The Linux Kernel is modular, stable, and scalable.

→ It efficiently handles hardware, memory, processes, and communication.

→ Its open-source nature allows customization, performance tuning, and community-driven improvement.

✅ Learn More About Linux, System Architecture, and OS Concepts in This Ebook:

codewithdhanian.gumroad.com/…

zepi retweeted

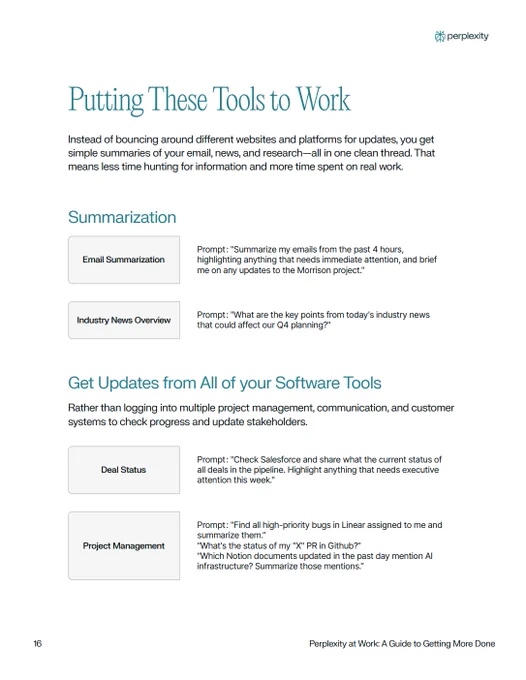

Perplexity just quietly dropped a 42-page internal guide on how they actually use AI at work.

What I found most useful:

→ How they automate the small stuff. Email, meeting prep, research (all done by AI)

→ Using AI to amplify your curiosity, not replace it.

→ Their prompting playbook is simple, practical, and genuinely good.

Comment “AI” and I’ll send it to you for free.

zepi retweeted

fantastic simple visualization of the self attention formula. this was one of the hardest things for me to deeply understand about LLMs.

the formula seems easy. you can even memorize it fast. but to really get an intuition of what the Q,K,V represent and interact, that’s hard.