Simplifying LLMs, AI Agents, RAG, and Machine Learning for you! • Co-founder @dailydoseofds_• BITS Pilani • 3 Patents • ex-AI Engineer @ LightningAI

Learn AI Engineering 👉

Joined July 2012

- Tweets 19,634

- Following 461

- Followers 233,825

- Likes 21,498

Pinned Tweet

My lecture at MIT!✨

From Physics to Linear Algebra & Machine learning, I have learned a lot from MIT!

Yesterday, I had the honour of delivering a guest lecture on The state of AI Engineering, exploring:

- Prompt Engineering

- Retrieval Augmented Generation.

- Fine-Tuning Large Language Models.

- And, how LLMs are bringing a paradigm shift

Thank you @RoyShilkrot for the invitation! 🙏

Grateful for the opportunity to engage with such brilliant minds!

If you are interested AI Engineering, I write a free weekly newsletter → @ML_Spring

Tech Stack:

- @UnslothAI to run and fine-tune the model

- @LightningAI environments for hosting and deployment

Find all code and everything you need here: lightning.ai/lightning-purch…

Fine-tune DeepSeek-OCR on your own language!

(100% local)

DeepSeek-OCR is a 3B-parameter vision model that achieves 97% precision while using 10× fewer vision tokens than text-based LLMs.

It handles tables, papers, and handwriting without killing your GPU or budget.

Why it matters:

Most vision models treat documents as massive sequences of tokens, making long-context processing expensive and slow.

DeepSeek-OCR uses context optical compression to convert 2D layouts into vision tokens, enabling efficient processing of complex documents.

The best part?

You can easily fine-tune it for your specific use case on a single GPU.

I used Unsloth to run this experiment on Persian text and saw an 88.26% improvement in character error rate.

↳ Base model: 149% character error rate (CER)

↳ Fine-tuned model: 60% CER (57% more accurate)

↳ Training time: 60 steps on a single GPU

Persian was just the test case. You can swap in your own dataset for any language, document type, or specific domain you're working with.

I've shared the complete guide in the next tweet - all the code, notebooks, and environment setup ready to run with a single click.

Everything is 100% open-source!

If you found it insightful, reshare with your network. Find me → @akshay_pachaar ✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

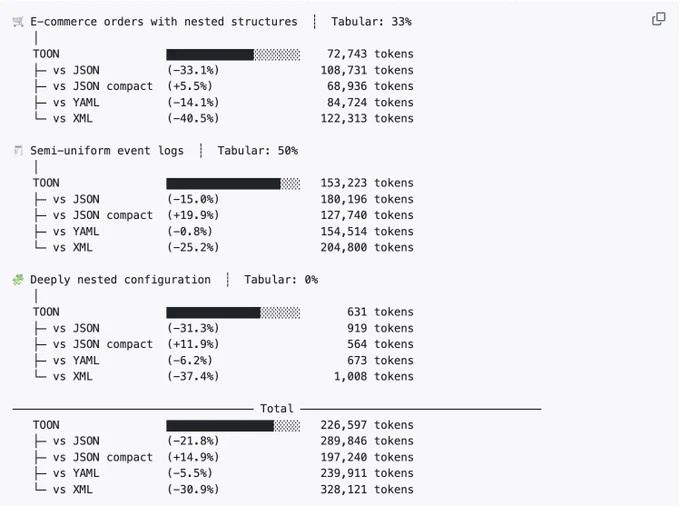

A simple trick cuts your LLM costs by 50%!

Just stop using JSON and use this instead:

TOON (Token-Oriented Object Notation) slashes your LLM token usage in half while keeping data perfectly readable.

Here's why it works:

TOON's sweet spot: uniform arrays with consistent fields per row. It merges YAML's indentation and CSV's tabular structure, optimized for minimal tokens.

Look at the example below.

JSON:

{

"𝘂𝘀𝗲𝗿𝘀": [

{ "𝗶𝗱": 𝟭, "𝗻𝗮𝗺𝗲": "𝗔𝗹𝗶𝗰𝗲", "𝗿𝗼𝗹𝗲": "𝗮𝗱𝗺𝗶𝗻" },

{ "𝗶𝗱": 𝟮, "𝗻𝗮𝗺𝗲": "𝗕𝗼𝗯", "𝗿𝗼𝗹𝗲": "𝘂𝘀𝗲𝗿" }

]

}

Toon:

𝘂𝘀𝗲𝗿𝘀[𝟮]{𝗶𝗱,𝗻𝗮𝗺𝗲,𝗿𝗼𝗹𝗲}:

𝟭,𝗔𝗹𝗶𝗰𝗲,𝗮𝗱𝗺𝗶𝗻

𝟮,𝗕𝗼𝗯,𝘂𝘀𝗲𝗿

It's obvious how few tokens are being used to represent the same information!

To summarise, here are the key features:

💸 30–60% fewer tokens than JSON

🔄 Borrows the best from YAML & CSV

🤿 Built-in validation with explicit lengths & fields

🍱 Minimal syntax (no redundant braces, brackets, etc.)

IMPORTANT!!

That said, for deeply nested or non-uniform data, JSON might be more efficient.

In the next tweet, I've shared some benchmark results demonstrating the effectiveness of this technique in reducing the number of tokens and improving retrieval accuracy with popular LLM providers.

Where do you think this could be effective in your existing workflows?

Find the relevant links in the next tweet!

Here are the benchmarks for token efficiency and retrieval accuracy as provided by the Toon team.

You can find the same information in their GitHub repo: github.com/toon-format/toon

A simple trick cuts your LLM costs by 50%!

Just stop using JSON and use this instead:

TOON (Token-Oriented Object Notation) slashes your LLM token usage in half while keeping data perfectly readable.

Here's why it works:

TOON's sweet spot: uniform arrays with consistent fields per row. It merges YAML's indentation and CSV's tabular structure, optimized for minimal tokens.

Look at the example below.

JSON:

{

"𝘂𝘀𝗲𝗿𝘀": [

{ "𝗶𝗱": 𝟭, "𝗻𝗮𝗺𝗲": "𝗔𝗹𝗶𝗰𝗲", "𝗿𝗼𝗹𝗲": "𝗮𝗱𝗺𝗶𝗻" },

{ "𝗶𝗱": 𝟮, "𝗻𝗮𝗺𝗲": "𝗕𝗼𝗯", "𝗿𝗼𝗹𝗲": "𝘂𝘀𝗲𝗿" }

]

}

Toon:

𝘂𝘀𝗲𝗿𝘀[𝟮]{𝗶𝗱,𝗻𝗮𝗺𝗲,𝗿𝗼𝗹𝗲}:

𝟭,𝗔𝗹𝗶𝗰𝗲,𝗮𝗱𝗺𝗶𝗻

𝟮,𝗕𝗼𝗯,𝘂𝘀𝗲𝗿

It's obvious how few tokens are being used to represent the same information!

To summarise, here are the key features:

💸 30–60% fewer tokens than JSON

🔄 Borrows the best from YAML & CSV

🤿 Built-in validation with explicit lengths & fields

🍱 Minimal syntax (no redundant braces, brackets, etc.)

IMPORTANT!!

That said, for deeply nested or non-uniform data, JSON might be more efficient.

In the next tweet, I've shared some benchmark results demonstrating the effectiveness of this technique in reducing the number of tokens and improving retrieval accuracy with popular LLM providers.

Where do you think this could be effective in your existing workflows?

Find the relevant links in the next tweet!

If you found it insightful, reshare with your network.

Find me → @akshay_pachaar ✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

XBOW raised $117M to build AI hacking agents.

Now someone just open-sourced it for FREE.

Strix deploys autonomous AI agents that act like real hackers - they run your code dynamically, find vulnerabilities, and validate them through actual proof-of-concepts.

Why it matters:

The biggest problem with traditional security testing is that it doesn't keep up with development speed.

Strix solves this by integrating directly into your workflow:

↳ Run it in CI/CD to catch vulnerabilities before production

↳ Get real proof-of-concepts, not false positives from static analysis

↳ Test everything: injection attacks, access control, business logic flaws

The best part?

You don't need to be a security expert. Strix includes a complete hacker toolkit - HTTP proxy, browser automation, and Python runtime for exploit development.

It's like having a security team that works at the speed of your CI/CD pipeline.

The best part is that the tool runs locally in Docker containers, so your code never leaves your environment.

Getting started is simple:

- pipx install strix-agent

- Point it at your codebase (app, repo, or directory)

Everything is 100% open-source!

I've shared link to the GitHub repo in the replies!

If you found it insightful, reshare with your network.

Find me → @akshay_pachaar ✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

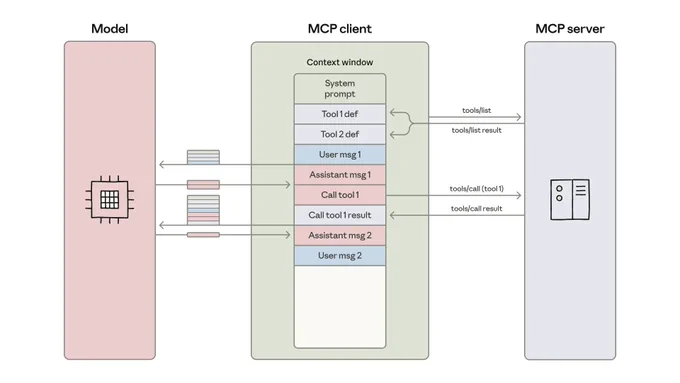

The blog: anthropic.com/engineering/co…

As usual, Anthropic just published another banger.

This one is on building efficient agents that handle more tools while using fewer tokens.

Agents scale better by writing code to call tools and the article explains how to use MCP to execute this code.

A must-read for AI devs!

Link to the Strix GitHub repo:

(don't forget to star 🌟)

github.com/usestrix/strix

XBOW raised $117M to build AI hacking agents.

Now someone just open-sourced it for FREE.

Strix deploys autonomous AI agents that act like real hackers - they run your code dynamically, find vulnerabilities, and validate them through actual proof-of-concepts.

Why it matters:

The biggest problem with traditional security testing is that it doesn't keep up with development speed.

Strix solves this by integrating directly into your workflow:

↳ Run it in CI/CD to catch vulnerabilities before production

↳ Get real proof-of-concepts, not false positives from static analysis

↳ Test everything: injection attacks, access control, business logic flaws

The best part?

You don't need to be a security expert. Strix includes a complete hacker toolkit - HTTP proxy, browser automation, and Python runtime for exploit development.

It's like having a security team that works at the speed of your CI/CD pipeline.

The best part is that the tool runs locally in Docker containers, so your code never leaves your environment.

Getting started is simple:

- pipx install strix-agent

- Point it at your codebase (app, repo, or directory)

Everything is 100% open-source!

I've shared link to the GitHub repo in the replies!

I recently compared Parlant and LangGraph.

(the original post is quoted below).

One of the most frequent questions readers asked was: “Isn’t it possible to create a fanout graph in LangGraph that performs parallel guideline matching, like Parlant does?”

Yes, but it misses the point. While you can create any type of execution model with a generic graph, it doesn’t actually help you implement the complexities of what a good guideline-matching graph actually does.

Guideline matching goes far beyond a simple fanout graph or parallel LLM execution.

Parlant actually has a detailed post explaining what production-grade guideline matching truly is. You’ll see why it requires more than just a fanout code snippet.

This is actually one of the deepest context engineering case studies that I’ve seen. Worth reading!

I’ve shared the link in replies!

Every LangGraph user I know is making the same mistake!

They all use the popular supervisor pattern to build conversational agents.

The pattern defines a supervisor agent that analyzes incoming queries and routes them to specialized sub-agents. Each sub-agent handles a specific domain (returns, billing, technical support) with its own system prompt.

This works beautifully when there's a clear separation of concerns.

The problem is that it always selects just one route.

For instance, if a customer asks: "I need to return this laptop. Also, what's your warranty on replacements?"

The supervisor routes this to the Returns Agent, which knows returns perfectly but has no idea about warranties.

So it either ignores the warranty question, admits it can't help, or even worse, hallucinates an answer. None of these options are desired.

This gets worse as conversations progress because real users don't think categorically. They mix topics, jump between contexts, and still expect the agent to keep up.

This isn't a bug you can fix since this is fundamentally how router patterns work.

Now, let's see how we can solve this problem.

Instead of routing between Agents, first, define some Guidelines.

Think of Guidelines as modular pieces of instructions like this:

```

agent.create_guideline(

condition="Customer asks about refunds",

action="Check order status first to see if eligible",

tools=[check_order_status],

)

```

Each guideline has two parts:

- Condition: When it gets activated?

- Action: What should the agent do?

Based on the user's query, relevant guidelines are dynamically loaded into the Agent's context.

For instance, when a customer asks about returns AND warranties, both guidelines get loaded into context simultaneously, enabling coherent responses across multiple topics without artificial separation.

This approach is actually implemented in Parlant - a recently trending open-source framework (15k+ stars).

Instead of routing between specialized agents, Parlant uses dynamic guideline matching. At each turn, it evaluates ALL your guidelines and loads only the relevant ones, maintaining coherent flow across different topics.

You can see the full implementation and try it yourself.

That said, LangGraph and Parlant are not competitors.

LangGraph is excellent for workflow automation where you need precise control over execution flow. Parlant is designed for free-form conversation where users don't follow scripts.

The best part? They work together beautifully. LangGraph can handle complex retrieval workflows inside Parlant tools, giving you conversational coherence from Parlant and powerful orchestration from LangGraph.

I have shared the repo in the replies!

If you found it insightful, reshare with your network.

Find me → @akshay_pachaar ✔️

For more insights and tutorials on LLMs, AI Agents, and Machine Learning!

RAG vs. CAG, clearly explained!

RAG is great, but it has a major problem:

Every query hits the vector database. Even for static information that hasn't changed in months.

This is expensive, slow, and unnecessary.

Cache-Augmented Generation (CAG) addresses this issue by enabling the model to "remember" static information directly in its key-value (KV) memory.

Even better? You can combine RAG and CAG for the best of both worlds.

Here's how it works:

RAG + CAG splits your knowledge into two layers:

↳ Static data (policies, documentation) gets cached once in the model's KV memory

↳ Dynamic data (recent updates, live documents) gets fetched via retrieval

The result? Faster inference, lower costs, less redundancy.

The trick is being selective about what you cache.

Only cache static, high-value knowledge that rarely changes. If you cache everything, you'll hit context limits. Separating "cold" (cacheable) and "hot" (retrievable) data keeps this system reliable.

You can start today. OpenAI and Anthropic already support prompt caching in their APIs.

I have shared a link to OpenAI's prompt caching guide in the replies.

Have you tried CAG in production yet?

RAG vs. CAG, clearly explained!

RAG is great, but it has a major problem:

Every query hits the vector database. Even for static information that hasn't changed in months.

This is expensive, slow, and unnecessary.

Cache-Augmented Generation (CAG) addresses this issue by enabling the model to "remember" static information directly in its key-value (KV) memory.

Even better? You can combine RAG and CAG for the best of both worlds.

Here's how it works:

RAG + CAG splits your knowledge into two layers:

↳ Static data (policies, documentation) gets cached once in the model's KV memory

↳ Dynamic data (recent updates, live documents) gets fetched via retrieval

The result? Faster inference, lower costs, less redundancy.

The trick is being selective about what you cache.

Only cache static, high-value knowledge that rarely changes. If you cache everything, you'll hit context limits. Separating "cold" (cacheable) and "hot" (retrievable) data keeps this system reliable.

You can start today. OpenAI and Anthropic already support prompt caching in their APIs.

I have shared a link to OpenAI's prompt caching guide in the replies.

Have you tried CAG in production yet?

The MCP moment for Reinforcement learning!

Mata just released OpenEnv, which standardizes how agents train with reinforcement learning.

It gives every RL system a shared, modular world. A containerized environment built on Gymnasium-inspired APIs.

100% open-source.

Meta just changed the RL game!

The hardest part of reinforcement learning isn't training.

It's managing the environment: the virtual world where your agent learns by trial and error.

With no standard way to build these worlds, each project starts from scratch with new APIs, new rules, new feedback loops.

The result? Agents that can't move across tasks, and researchers spending more time wiring environments than improving behavior.

This is exactly what PyTorch OpenEnv solves. Think of it as the MCP moment for RL training.

OpenEnv standardizes how agents train with reinforcement learning. It gives every RL system a shared, modular world. A containerized environment built on Gymnasium-inspired APIs that speak a common language:

- reset() → start a new episode

- step(action) → take an action and get feedback

- state() → observe progress

Each environment runs in isolation over HTTP: simple, type-safe, and reproducible.

Here's the flow in practice:

- An agent connects through the OpenEnv client

- The client routes actions to a FastAPI environment running in Docker

- The environment processes, updates state, and returns feedback

- The loop continues

Same pattern, whether it's a toy game, a coding environment, or any custom world you want your agents to interact with.

Just like MCP standardized tool calling for agents, OpenEnv standardizes how agents interact with RL training environments.

The best part everything is 100% open-source!

In the replies, I've shared a reproducible example that uses UnslothAI to fine-tune GPT-OSS 20B to play the game 2048, plus the link to Meta's open-source OpenEnv repo.