This paper brings a great insight on the effect of AI in medical care.

Defensive AI use can trap clinicians in a prisoner's dilemma, where choices that reduce blame end up hurting care.

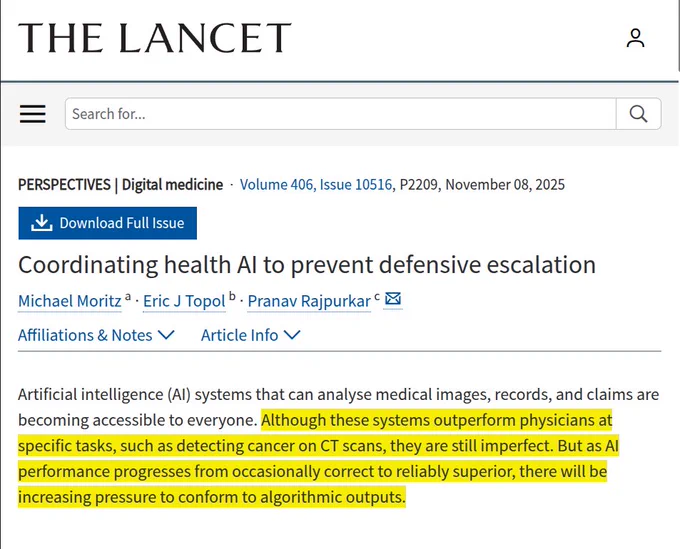

Says AI tools are already better than many doctors at some narrow jobs, like spotting cancer on a CT scan, but they still make mistakes.

As these tools keep getting better and hit a level where they are right most of the time, people in the system will feel pressure to follow whatever the AI says.

A doctor who disagrees with the AI might worry about blame later if the AI was right, so even when the doctor has a good reason, choosing a different path will feel risky.

Over time that pressure can push everyone to default to the AI algorithm, not because it is perfect, but because going against it looks unsafe for careers and lawsuits.

Big gaps remain because shared coordination tools are rare, liability is fuzzy when AI and a clinician disagree, and without policy the rational move is to keep escalating AI use.

The fix is system level coordination that pays for patient outcomes, protects good faith judgment when AI disagrees, and demands transparent reasoning from the tools.

Nov 8, 2025 · 12:18 AM UTC