The fastest way to ship reliable AI apps - Evaluation, Experimentation, and Observability Platform

SF & NYC

Joined June 2021

- Tweets 1,223

- Following 455

- Followers 1,418

- Likes 544

Pinned Tweet

Multi-agent systems offer incredible potential and unprecedented risks. How do you solve for observability, failure mode analysis, and guardrailing in the era of agents?

Today, we’re announcing our Agent Reliability platform to observe, evaluate, guardrail, and improve agents at scale.

You can get started with the complete platform for trustworthy agentic AI today for free, and here’s how we’re solving some of the biggest challenges in agent reliability:

- Observability redesigned for agents

Trace views collapse under complex workflows, so we created the Graph View, Timeline View, and Conversation View to offer rich, intuitive visualizations of agent decisions, tool calls, and conversation flows. This multi-dimensional approach enables teams to pinpoint exactly where and why agents deviate or fail.

- Automated Failure Mode Analysis with our new Insights Engine

Our Insights Engine ingests your logs, metrics, and agent code to automatically surface nuanced failure modes and their root causes. But knowing the problem is not enough; you need to know how to fix it.

Insights Engine delivers actionable fixes and can even apply them automatically. With adaptive learning, your insights become smarter and more relevant as your agents evolve.

- Evaluating Agents Across Multiple Dimensions

Agentic systems interact across complex pathways, and evaluating their performance requires new metrics that reflect this increasing complexity. To deliver comprehensive agentic measurements, we’ve added more out-of-the-box agent metrics like flow adherence, agent flow, agent efficiency, and more.

For specialized domains and unique workflows, custom metrics powered by our new Luna-2 small language models can be rapidly designed and fine-tuned for your specific use case.

- Real-Time Guardrails Powered by Luna-2

As AI agents become more autonomous and complex, failures like hallucinations or unsafe actions increase dramatically. Without real-time guardrails, these errors will hurt your user experience and brand reputation.

Our Luna-2 family of small language models is purpose-built to provide low-latency, cost-effective guardrails that actively stop agent errors before they happen. With support for out-of-the-box and custom metrics, Luna-2 enables enterprises to enforce safety, compliance, and reliability at scale.

Enterprises running hundreds of agents and processing hundreds of millions of queries daily already rely on Galileo’s Agent Reliability platform to protect their users, safeguard brand trust, and accelerate innovation.

Agent Reliability is available starting today. Try it for free and experience the new standard in AI reliability.

Learn more below 👇

Galileo retweeted

👟I joined the field engineering team at @rungalileo to help bring AI applications across the last mile to production. Here's a demo of an SDR outreach assistant where I use Galileo's context engineering and observability features to improve the app.

Tomorrow, our Co-founder and CPO @atinsanyal joins the @rhodes_trust at the University of Oxford for a panel on "Rebuilding Trust in the Digital Age,” exploring misinformation, privacy, and ethical AI alongside:

→ Lian Ryan-Hume (T&S Policy Lead at TikTok)

→ Helen Xiao He Zhang (Office of Eric Schmidt & Intrigue Media)

→ John Dupree (Opus Faveo Innovation Development)

Together, the panel will bdiscuss what ethical AI developent looks like in practice, examining who should be held accountable when AI systems cause harm or reinforce bias, and explormng how we can rebuild public trust in digital systems.

📅 November 8, 11:45 AM London Time

➡️ Oxford and online (link below)

Galileo retweeted

@daytonaio US Events Calendar | October 2025

This month, we’re connecting with builders, hackers, and innovators shaping the future of AI and developer tools. ⚡

📍 San Francisco 🌉

AI Builders — November 12

HackSprint — November 15

📍 New York City 🗽

HackSprint — November 18

A huge thank-you to our event partners: @AnthropicAI, @getsentry, @rungalileo, @browser_use, @coderabbitai, @elevenlabsio, @brexHQ, @TigrisData, @awscloud

📎 Register links are in the comments.

🗽 If you’re an AI Builder in New York, you won’t want to miss the first @daytonaio HackSprint in NYC coming up on November 18th 🔜

We’re partnering with Daytona, @AnthropicAI, @elevenlabsio, @brexHQ, @coderabbitai, and @TigrisData to host an intense one-day sprint where you’ll have six hours to build reliable agents from scratch.

🏆 The total prize pool is over $30,000

✨ Every participant receives $100 Daytona credits, $50 Claude API credits, and 3 months of the ElevenLabs Creator tier for free

Plus, we'll be giving away prizes for the best uses of Galileo:

🥇: $300 visa gift card + 60K Traces

🥈: $200 visa gift card + 40K Traces

🥉: $100 visa gift card + 30K Traces

Your judges include:

– @JukicVedran, Co-founder & CTO at Daytona

– Avi Kumar, Solution Engineering at Galileo

– @bigal123, Customer Success Engineer at Galieo

– Anitej Biradar, Forward Deployed Engineer at ElevenLabs

– Sarah Han, Forward Deployed Engineer at ElevenLabs

– Raouf Chebri, Developer Relations at Tigris Data

– Joshua Inoa, Engineering Manager at Brex

– @tarunipaleru, Founding PM at @TeamHathora

📅 You won’t want to miss this, register here: luma.com/tq1o914r

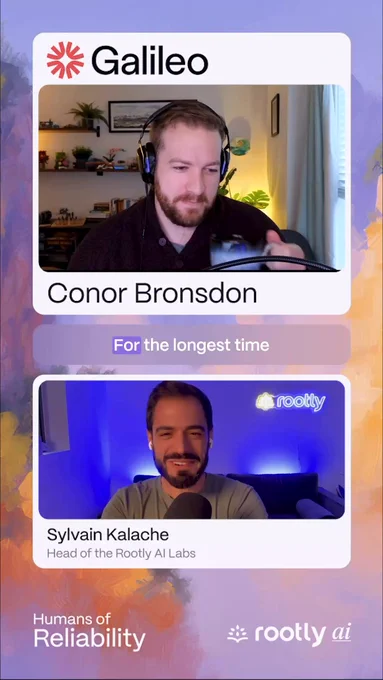

Monitoring AI applications for quality is no easy task. @ConorBronsdon (@rungalileo) introduces Evaluation Driven Development (EvDD): a framework for ensuring your model is set up for success.

💬 "Enterprises are able to deploy these applications almost 10x faster than they would have been able to without Galileo. The @cloudera and Galileo partnership, the value we bring is so synergistic and complementary." - Soumya Mohan, Galileo's Head of Product

We’re proud to be part of Cloudera’s Enterprise AI Ecosystem, delivering the observability layer that production AI demands.

Cloudera brings the data foundation, private AI models, and agent development tools.

We ensure enterprises can test, evaluate, monitor, and protect those systems at scale.

Our integration, along with Cloudera’s other partners, creates a closed loop for trusted AI deployment. Watch Soumya's full explanation in the video below 👇

Galileo retweeted

А new 165-page guide, Mastering Multi-Agent Systems, which demonstrates when multi-agent systems add value, how to design them efficiently, and how to build reliable systems that work in production.

Learn how to select the right multi-agent architecture for your use case here: galileo.ai/mastering-multi-a…

Galileo retweeted

Still buzzing from our first @daytonaio HackSprint in SF! 🚀 Big shoutout to @AnthropicAI, @rungalileo, @browser_use & @WorkOS — and everyone who built, judged, or supported!

Congrats to our winning teams! 🏆

📽️Aftermovie by @itsajchan⬇️

Last month's inaugural @daytonaio HackSprint was electric, and we're doing it again in SF on November 15th with Daytona, @AnthropicAI, @getsentry, @coderabbitai, and @browser_use 💥

Last month, we saw biotech research connectors, voice-guided AI trainers, and treasury simulation agents, all built from scratch in six hours. We can’t wait to see what the community builds this time. 👀

The prize pool is valued over $40,000, every participant will receive $50 in Claude API credits from Anthropic, $100 in Daytona credits, and $50 in Browser Use credits. Plus, our Engineering Lead, @nmparanjape, will be judging and awarding prizes for the best use of Galileo 🏆

Now is your chance to tackle the challenge and build your best agents with sharp reasoning, independent decision-making, and safe integrations. Register here: luma.com/bh7auv0t

Learn how to select the right multi-agent architecture for your use case here: galileo.ai/mastering-multi-a…

How do you know which multi-agent architecture is right for your use case? Here are three questions to help you determine your architecture:

→ Do you need perfectly synchronized consistency, or can your agents work with slightly stale data and sync up periodically without breaking functionality?

→ Can your system afford to stop if one component fails, or do your tasks require careful sequencing?

→ Are you working with 3-5 agents, or will your system need to scale to hundreds of agents?

Choosing the wrong multi-agent architecture can cost months of debugging coordination failures.

Learn our decision framework for selecting the right architecture in chapter 3 of our new eBook, Mastering Multi-Agent Systems, below 👇

Galileo retweeted

🚀 Daytona HackSprint NYC — Nov 18!

1 day before kick off of the @aiDotEngineer Code Summit (we’re a Gold Sponsor)

$30K+ in prizes. Build + demo real AI agents in 1 day.

With: @AnthropicAI, @rungalileo, @elevenlabsio , @coderabbitai, @TigrisData & @brexHQ .

Event details & RVSP in comments.

@swyx, @ivanburazin, @JukicVedran, @troispio, @CipcicMarijan

Learn whether multi-agent systems are the right choice for your use case in our comprehensive guide here: galileo.ai/mastering-multi-a…

Multi-agent systems can handle more complex tasks, but are they worth the orchestration overhead, and how can they be made reliable in production?

We just published a new 165-page guide, Mastering Multi-Agent Systems, which demonstrates when multi-agent systems add value, how to design them efficiently, and how to build reliable systems that work in production.

Inside, you'll find:

1️⃣ The core advantages of multi-agent systems, from specialization to fault tolerance, and when these benefits justify the added complexity.

2️⃣ A decision framework to determine if your project truly needs multiple agents, including five critical questions to ask before building distributed systems.

3️⃣ Four primary architectures (centralized, decentralized, hierarchical, and hybrid) with practical guidance on choosing the right structure for your use case.

4️⃣ Context engineering strategies for multi-agent systems, including the difference between memory and context, plus four common failure modes and how to fix them.

5️⃣ A complete LangGraph implementation example, from setup to production monitoring, showing how to build, test, and continuously improve a real customer service multi-agent system.

Download your copy below and learn how to master multi-agent systems 👇

Galileo retweeted

Listen to my conversation with @YashSheth46 COO of @rungalileo on

Spotify: bit.ly/3v1R0Tu

Apple: bit.ly/4bTCwpD

Youtube: bit.ly/3uXthnv

#AI

#MachineLearning

#LLM

#AIObservability

#AIEvaluation

#AIInfrastructure

Traditional observability breaks in the agentic era. Agent failures don't show up with standard monitoring solutions and require a fundamentally different approach to diagnose.

Tomorrow at @_odsc AI West, our CTO and Co-founder, @atinsanyal, will unpack 'The New GenAI Reliability Playbook' and introduce a modern observability framework purpose-built for agentic systems. You'll learn how to:

✅ Instrument the agent loop to track tool quality, error rates, and latency across complex workflows

✅ Catch failure modes early with metrics that actually matter for agentic systems

✅ Diagnose drift and brittleness through targeted telemetry that traces root causes

✅ Build continuous improvement pipelines with minimal lift

If you're at ODSC AI West, don't miss this session. Register in the comments below👇

Explore these new metrics and more in our docs here: v2docs.galileo.ai/concepts/m…