Senior vibe coder · NLP/LLM research · PhD in AI & Wireless Comms

Montpellier, France

Joined March 2020

- Tweets 1,139

- Following 986

- Followers 543

- Likes 4,236

Is this the first OSS model that does o3-style parallel trajectory generation and aggregation?

🚀 Hello, Kimi K2 Thinking!

The Open-Source Thinking Agent Model is here.

🔹 SOTA on HLE (44.9%) and BrowseComp (60.2%)

🔹 Executes up to 200 – 300 sequential tool calls without human interference

🔹 Excels in reasoning, agentic search, and coding

🔹 256K context window

Built as a thinking agent, K2 Thinking marks our latest efforts in test-time scaling — scaling both thinking tokens and tool-calling turns.

K2 Thinking is now live on kimi.com in chat mode, with full agentic mode coming soon. It is also accessible via API.

🔌 API is live: platform.moonshot.ai

🔗 Tech blog: moonshotai.github.io/Kimi-K2…

🔗 Weights & code: huggingface.co/moonshotai

Interesting argument in favor of keeping the KL term in GRPO. Ig it makes sense when fine-tuning on top of an already strong reasoning baseline.

These guys know how to benchmark. They included models released just yesterday.

Listening to @karpathy's take on mode collapse

> They [LLMs] have a collapsed data distribution. One easy way to see it is to go to ChatGPT and ask it, "Tell me a joke." It only has like three jokes.

Curious what he thinks about this work

In my experience, this works well when peer programming with AI as well. When I ask it to implement a feature or solve an issue, I always ask for different options to choose from, and oftentimes I end up not picking the 1st suggestion.

Are we gonna start seeing DGX Spark instances on @runpod_io, @PrimeIntellect and the likes? 👀

Also @soumithchintala seems to share the same opinion

Sometimes we forget that NVIDIA wins because it's a software company. DGX Spark is a reminder of that. It's a CUDA dev machine that's beautiful enough and small enough to be on my desk and with enough memory to fit a truckload of params.

It's not the fastest or best at anything, but it's great to develop on and transfer your final training run to a H/B200, final robotics policy to your Jetson, final inference to {nvidia/apple/amd/[favorite vendor]}.

I'm now convinced that the DGX Spark is more meant to be a devkit for B200s than anything, so it doesn't make sense to compare it to Mac Studios or Ryzen AI Max+ 395s.

🚀 SGLang In-Depth Review of the NVIDIA DGX Spark is LIVE!

Thanks to @NVIDIA’s early access program, SGLang makes its first ever appearance in a consumer product, the brand-new DGX Spark.

The DGX Spark’s 128GB Unified Memory and Blackwell architecture set a new standard for local AI prototyping and edge computing. We're thrilled to bring these cutting-edge performance insights and software support to the developer community.

Our review dives into how to efficiently deploy and accelerate large models like Llama 3.1 70B, GPT-OSS using SGLang's EAGLE3 speculative decoding and @Ollama on this beautiful piece of engineering.

👇 Unboxing video and tech blog in the thread

#SGLang #NVIDIA #SparkSomethingBig #Blackwell #DGXSpark #AIInference #LLMServing

It's only a matter of time before @pewdiepie discovers @home_assistant and sees the light

Gentlemen I need your full attention.

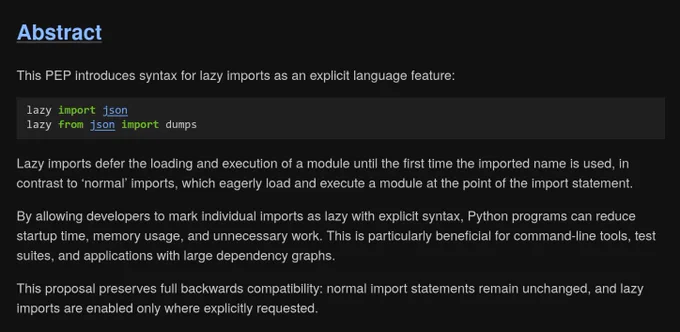

Python is introducing lazy imports.

I repeat.

Python is introducing lazy imports.

inb4 the flood of `treewide: adopt lazy imports` +123,244 PRs