AI/ML | Fitness junkie & scuba diver 🏋️♂️🌊 | Pianist & classical music addict 🎶 | View are my own, duh

Santa Clara, CA

Joined August 2015

- Tweets 6,346

- Following 1,336

- Followers 127

- Likes 23,883

Jingran Z retweeted

The new 1 Trillion parameter Kimi K2 Thinking model runs well on 2 M3 Ultras in its native format - no loss in quality!

The model was quantization aware trained (qat) at int4.

Here it generated ~3500 tokens at 15 toks/sec using pipeline-parallelism in mlx-lm:

Jingran Z retweeted

Hi everyone,

Grand Theft Auto VI will now release on Thursday, November 19, 2026.

We are sorry for adding additional time to what we realize has been a long wait, but these extra months will allow us to finish the game with the level of polish you have come to expect and deserve.

Jingran Z retweeted

A few thoughts on the Kimi-K2-thinking release

1) Open source is catching up and in some cases surpassing closed source.

2) More surprising: the rise of open source isn’t coming from the U.S., as was still expected at the end of last year (LLama), but from China—kicked off in early 2025 with DeepSeek, and now confirmed by Kimi.

3)The sanctions against China aren’t achieving their intended effect; on the contrary, the chip restrictions are pushing Chinese scientists to become far more creative, essentially turning necessity into a virtue.

4)Taken together, this leads to the conclusion that China is not just becoming an increasingly strong competitor to the U.S., but is now catching up—and it’s no longer clear who will win the race toward AGI.

Jingran Z retweeted

The new Kimi model was trained with only $4.6M !!!?? Like 1/100 of their US counterparts? Can that be true!?

Remember:

> In 1969, NASA’s Apollo mission landed people on the moon with a computer that had just 4KB of RAM.

And as @crystalsssup said "Creativity loves constraints"

Jingran Z retweeted

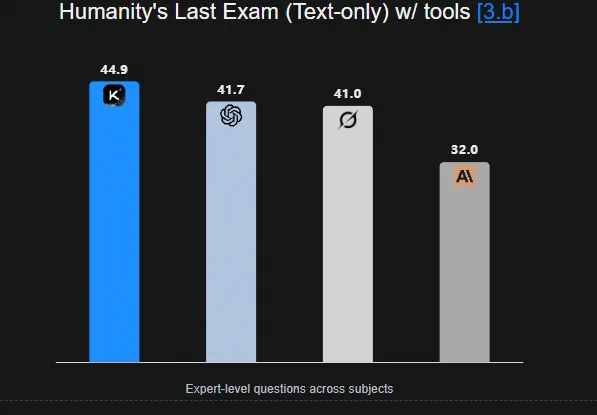

Kimi K2 Thinking is the new leading open weights model: it demonstrates particular strength in agentic contexts but is very verbose, generating the most tokens of any model in completing our Intelligence Index evals

@Kimi_Moonshot's Kimi K2 Thinking achieves a 67 in the Artificial Analysis Intelligence Index. This positions it clearly above all other open weights models, including the recently released MiniMax-M2 and DeepSeek-V3.2-Exp, and second only to GPT-5 amongst proprietary models.

It used the highest number of tokens ever across the evals in Artificial Analysis Intelligence Index (140M), but with MoonShot’s official API pricing of $0.6/$2.5 per million input/output tokens (for the base endpoint), overall Cost to Run Artificial Analysis Intelligence Index comes in cheaper than leading frontier models at $356. Moonshot also offers a faster turbo endpoint priced at $1.15/$8 (driving a Cost to Run Artificial Analysis Intelligence Index result of $1172 for the turbo endpoint - second only to Grok 4 as the most expensive model). The base endpoint is very slow at ~8 output tokens/s while the turbo is somewhat faster at ~50 output tokens/s.

The model is one of the largest open weights models ever at 1T total parameters with 32B active. K2 Thinking is the first reasoning model release in Moonshot AI’s Kimi K2 model family, following non-reasoning Kimi K2 Instruct models released previously in July and September 2025.

Moonshot AI only refers to post-training in their announcement. This release highlights the continued trend of post-training & specifically RL driving gains in performance for reasoning models and in long horizon tasks involving tool calling.

Key takeaways:

➤ Details: text only (no image input), 256K context window, natively released in INT4 precision, 1T total with 32B active (~594GB)

➤ New leader in open weights intelligence: Kimi K2 Thinking achieves a 67 in the Artificial Analysis Intelligence Index. This is the highest open weights score yet and significantly higher than gpt-oss-120b (61), MiniMax-M2 (61), Qwen 235B A22B 2507 (57) and DeepSeek-V3.2-Exp (57). This release continues the trend of open weights models closely following proprietary models in intelligence achieved

➤ China takes back the open weights frontier: Releases from China based AI labs have led in open weights intelligence offered for most of the past year. OpenAI’s gpt-oss-120b release in August 2025 briefly took back the leadership position for the US. Moonshot AI’s K2 Thinking takes back the leading open weights model mantle for China based AI labs

➤ Strong agentic performance: Kimi K2 Thinking demonstrates particular strength in agentic contexts, as showcased by its #2 position in the Artificial Analysis Agentic Index - where it is second only to GPT-5. This is mostly driven by K2 Thinking achieving 93% in 𝜏²-Bench Telecom, an agentic tool use benchmark where the model acts as a customer service agent. This is the highest score we have independently measured. Tool use in long horizon agentic contexts was a strength of Kimi K2 Instruct and it appears this new Thinking variant makes substantial gains

➤ Top open weights coding model, but behind proprietary models: K2 Thinking does not score a win in any of our coding evals - it lands in 6th place in Terminal-Bench Hard, 7th place in SciCode and 2nd place in LiveCodeBench. Compared to open weights models, it is in first or first equal for each of these evals - and therefore comes in ahead of previous open weights leader DeepSeek V3.2 in our Artificial Analysis Coding Index

➤ Biggest leap for open weights in Humanity’s Last Exam: K2 Thinking’s strongest results include Humanity’s Last Exam, where we measured a score of 22.3% (no tools) - an all time high for open weights models and coming in only behind GPT-5 and Grok 4

➤ Verbosity: Kimi K2 Thinking is very verbose - taking 140M total tokens are used to run our Intelligence Index evaluations, ~2.5x the number of tokens used by DeepSeek V3.2 and ~2x compared to GPT-5. This high verbosity drives both higher cost and higher latency, compared to less verbose models. On Mooshot’s base endpoint, K2 Thinking is 2.5x cheaper than GPT-5 (high) but 9x more expensive than DeepSeek V3.2 (Cost to Run Artificial Analysis Intelligence Index)

➤ Reasoning variant of Kimi K2 Instruct: The model, as per its naming, is a reasoning variant of Kimi K2 Instruct. The model has the same architecture and same number of parameters (though different precision) as Kimi K2 Instruct. It continues to only support text inputs and outputs

➤ 1T parameters but INT4 instead of FP8: Unlike Moonshot’s prior Kimi K2 Instruct releases that used FP8 precision, this model has been released natively in INT4 precision. Moonshot used quantization aware training in the post-training phase to achieve this. The impact of this is that K2 Thinking is only ~594GB, compared to just over 1TB for K2 Instruct and K2 Instruct 0905 - which translates into efficiency gains for inference and training. A potential reason for INT4 is that pre-Blackwell NVIDIA GPUs do not have support for FP4, making INT4 more suitable for achieving efficiency gains on earlier hardware

➤ Access: The model is available on @huggingface with a modified MIT license. @Kimi_Moonshot is serving an official API (available globally) and third party inference providers are already launching endpoints - including @basetenco, @FireworksAI_HQ, @novita_labs, @parasail_io

Jingran Z retweeted

These numbers are amazing for an open-source model. We're working on bringing this model up for Perplexity users with our own deployment in US data centers.

Jingran Z retweeted

Someone shared a fascinating visual of an AI agent moving through a real codebase - reviewing structures, making decisions, and committing changes.

It’s a reminder that software development is quietly entering a new phase.

Not automation in the traditional sense, but systems that can understand context, navigate complexity, and contribute meaningfully.

We’re still early, but the trajectory is unmistakable: coding is becoming more collaborative between humans and intelligent agents than ever before.